Introducing rubbish assortment, metrics, and trade-offs

The element of the HotSpot JVM that manages the appliance heap of your utility is named the rubbish collector (GC). A GC governs the entire lifecycle of utility heap objects, starting when the appliance allocates reminiscence and persevering with by means of reclaiming that reminiscence for eventual reuse later.

At a really excessive stage, probably the most fundamental performance of rubbish assortment algorithms within the JVM are the next:

◉ Upon an allocation request for reminiscence from the appliance, the GC supplies reminiscence. Offering that reminiscence needs to be as fast as attainable.

◉ The GC detects reminiscence that the appliance isn’t going to make use of once more. Once more, this mechanism needs to be environment friendly and never take an undue period of time. This unreachable reminiscence can also be generally referred to as rubbish.

◉ The GC then supplies that reminiscence once more to the appliance, ideally “in time,” that’s, shortly.

There are various extra necessities for an excellent rubbish assortment algorithm, however these three are probably the most fundamental ones and adequate for this dialogue.

There are various methods to fulfill all these necessities, however sadly there is no such thing as a silver bullet and no one-size-fits-all algorithm. Because of this, the JDK supplies a number of rubbish assortment algorithms to select from, and every is optimized for various use circumstances. Their implementation roughly dictates conduct about a number of of the three predominant efficiency metrics of throughput, latency, and reminiscence footprint and the way they impression Java purposes.

◉ Throughput represents the quantity of labor that may be carried out in a given time unit. By way of this dialogue, a rubbish assortment algorithm that performs extra assortment work per time unit is preferable, permitting greater throughput of the Java utility.

◉ Latency offers a sign of how lengthy a single operation of the appliance takes. A rubbish assortment algorithm targeted on latency tries to attenuate impacting latency. Within the context of a GC, the important thing considerations are whether or not its operation induces pauses, the extent of any pauses, and the way lengthy the pauses could also be.

◉ Reminiscence footprint within the context of a GC means how a lot further reminiscence past the appliance’s Java heap reminiscence utilization the GC wants for correct operation. Knowledge used purely for the administration of the Java heap takes away from the appliance; if the quantity of reminiscence the GC (or, extra usually, the JVM) makes use of is much less, extra reminiscence could be offered to the appliance’s Java heap.

These three metrics are related: A excessive throughput collector could considerably impression latency (however minimizes impression on the appliance) and the opposite manner round. Decrease reminiscence consumption could require the usage of algorithms which are much less optimum within the different metrics. Decrease latency collectors could do extra work concurrently or in small steps as a part of the execution of the appliance, taking away extra processor sources.

This relationship is usually graphed in a triangle with one metric in every nook, as proven in Determine 1. Each rubbish assortment algorithm occupies part of that triangle primarily based on the place it’s focused and what it’s best at.

Determine 1. The GC efficiency metrics triangle

Attempting to enhance a GC in a number of of the metrics usually penalizes the others.

The OpenJDK GCs in JDK 18

OpenJDK supplies a various set of 5 GCs that target completely different efficiency metrics. Desk 1 lists their names, their space of focus, and a few of the core ideas used to attain the specified properties.

Desk 1. OpenJDK’s 5 GCs

| Rubbish collector | Focus space | Ideas |

| Parallel | Throughput | Multithreaded stop-the-world (STW) compaction and generational assortment |

| Rubbish First (G1) | Balanced efficiency | Multithreaded STW compaction, concurrent liveness, and generational assortment |

| Z Rubbish Collector (ZGC) (since JDK 15) | Latency | All the pieces concurrent to the appliance |

| Shenandoah (since JDK 12) | Latency | All the pieces concurrent to the appliance |

| Serial | Footprint and startup time | Single-threaded STW compaction and generational assortment |

The Parallel GC is the default collector for JDK 8 and earlier. It focuses on throughput by attempting to get work carried out as shortly as attainable with minimal regard to latency (pauses).

The Parallel GC frees reminiscence by evacuating (that’s, copying) the in-use reminiscence to different areas within the heap in additional compact kind, leaving giant areas of then-free reminiscence inside STW pauses. STW pauses happen when an allocation request can’t be glad; then the JVM stops the appliance utterly, lets the rubbish assortment algorithm carry out its reminiscence compaction work with as many processor threads as obtainable, allocates the reminiscence requested within the allocation, and eventually continues execution of the appliance.

The Parallel GC is also a generational collector that maximizes rubbish assortment effectivity. Extra on the thought of generational assortment is mentioned later.

G1 performs prolonged work concurrent to the appliance, that’s, whereas the appliance is operating utilizing a number of threads. This decreases most pause occasions considerably, at the price of some general throughput.

The ZGC and Shenandoah GCs concentrate on latency at the price of throughput. They try to do all rubbish assortment work with out noticeable pauses. Presently neither is generational. They have been first launched in JDK 15 and JDK 12, respectively, as nonexperimental variations.

The Serial GC focuses on footprint and startup time. This GC is sort of a easier and slower model of the Parallel GC, because it makes use of solely a single thread for all work inside STW pauses. The heap can also be organized in generations. Nevertheless, the Serial GC excels at footprint and startup time, making it notably appropriate for small, short-running purposes on account of its diminished complexity.

OpenJDK supplies one other GC, Epsilon, which I omitted from Desk 1. Why? As a result of Epsilon solely permits reminiscence allocation and by no means performs any reclamation, it doesn’t meet all the necessities for a GC. Nevertheless, Epsilon could be helpful for some very slim and special-niche purposes.

Quick introduction to the G1 GC

How does G1 obtain this steadiness between throughput and latency?

One key approach is generational rubbish assortment. It exploits the commentary that probably the most not too long ago allotted objects are the almost definitely ones that may be reclaimed nearly instantly (they “die” shortly). So G1, and some other generational GC, splits the Java heap into two areas: a so-called younger technology into which objects are initially allotted and an previous technology the place objects that reside longer than a number of rubbish assortment cycles for the younger technology are positioned to allow them to be reclaimed with much less effort.

The younger technology is often a lot smaller than the previous technology. Due to this fact, the hassle for gathering it, plus the truth that a tracing GC equivalent to G1 processes solely reachable (reside) objects throughout young-generation collections, means the time spent rubbish gathering the younger technology usually is brief, and plenty of reminiscence is reclaimed on the identical time.

In some unspecified time in the future, longer-living objects are moved into the previous technology.

Due to this fact, every so often, there’s a want to gather rubbish and reclaim reminiscence from the previous technology because it fills up. For the reason that previous technology is often giant, and it usually comprises a big variety of reside objects, this could take fairly a while. (For instance, the Parallel GC’s full collections usually take many occasions longer than its young-generation collections.)

Because of this, G1 splits old-generation rubbish assortment work into two phases.

◉ G1 first traces by means of the reside objects concurrently to the Java utility. This strikes a big a part of the work wanted for reclaiming reminiscence from the previous technology out of the rubbish assortment pauses, thus lowering latency. The precise reminiscence reclamation, if carried out unexpectedly, would nonetheless be very time consuming on giant utility heaps.

◉ Due to this fact, G1 incrementally reclaims reminiscence from the previous technology. After the tracing of reside objects, for each one of many subsequent few common young-generation collections, G1 compacts a small a part of the previous technology along with the entire younger technology, reclaiming reminiscence there as properly over time.

Reclaiming the previous technology incrementally is a little more inefficient than doing all this work directly (because the Parallel GC does) on account of inaccuracies in tracing by means of the article graph in addition to the time and house overhead for managing help knowledge constructions for incremental rubbish collections, nevertheless it considerably decreases the utmost time spent in pauses. As a tough information, rubbish assortment occasions for incremental rubbish assortment pauses take across the identical time as those reclaiming solely reminiscence from the younger technology.

As well as, you’ll be able to set the pause time aim for each of these kinds of rubbish assortment pauses through the MaxGCPauseMillis command-line choice; G1 tries to maintain the time spent beneath this worth. The default worth for this length is 200 ms. That may or won’t be acceptable on your utility, however it is just a information for the utmost. G1 will preserve pause occasions decrease than that worth if attainable. Due to this fact, an excellent first try to enhance pause occasions is attempting to lower the worth of MaxGCPauseMillis.

Progress from JDK 8 to JDK 18

Now that I’ve launched OpenJDK’s GCs, I’ll element enhancements which were made to the three metrics—throughput, latency, and reminiscence footprint—for the GCs over the past 10 JDK releases.

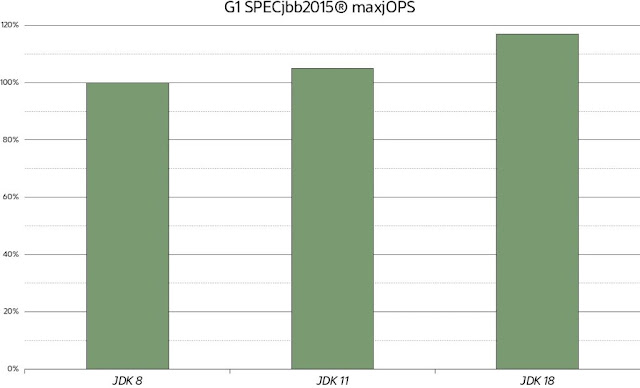

Throughput good points for G1. To show the throughput and latency enhancements, this text makes use of the SPECjbb2015 benchmark. SPECjbb2015 is a typical trade benchmark that measures Java server efficiency by simulating a mixture of operations inside a grocery store firm. The benchmark supplies two metrics.

◉ maxjOPS corresponds to the utmost variety of transactions the system can present. It is a throughput metric.

◉ criticaljOPS measures throughput underneath a number of service-level agreements (SLAs), equivalent to response occasions, from 10 ms to 100 ms.

This text makes use of maxjOPS as a base for evaluating the throughput for JDK releases and the precise pause time enhancements for latency. Whereas criticaljOPS values are consultant of latency induced by pause time, there are different sources that contribute to that rating. Straight evaluating pause occasions avoids this drawback.

Determine 2 exhibits maxjOPS outcomes for G1 in composite mode on a 16 GB Java heap, graphed relative to JDK 8 for JDK 11 and JDK 18. As you’ll be able to see, the throughput scores enhance considerably just by transferring to later JDK releases. JDK 11 improves by round 5% and JDK 18 by round 18%, respectively, in comparison with JDK 8. Merely put, with later JDKs, extra sources can be found and used for precise work within the utility.

Determine 2. G1 throughput good points measured with SPECjbb2015 maxjOPS

The dialogue beneath makes an attempt to attribute these throughput enhancements to explicit rubbish assortment modifications. Nevertheless, rubbish assortment efficiency, notably throughput, can also be very amenable to different generic enhancements equivalent to code compilation, so the rubbish assortment modifications should not accountable for all of the uplift.

In JDK 8 the consumer needed to manually set the time when G1 began concurrent tracing of reside objects for old-generation assortment. If the time was set too early, the JVM didn’t use all the appliance heap assigned to the previous technology earlier than beginning the reclamation work. One downside was that this didn’t give the objects within the previous technology as a lot time to develop into reclaimable. So G1 wouldn’t solely take extra processor sources to investigate liveness as a result of extra knowledge was nonetheless reside, but additionally G1 would do extra work than vital releasing reminiscence for the previous technology.

One other drawback was that if the time to begin old-generation assortment have been set to be too late, the JVM would possibly run out of reminiscence, inflicting a really gradual full assortment. Starting with JDK 9, G1 routinely determines an optimum level at which to begin old-generation tracing, and it even adapts to the present utility’s conduct.

Basically, each launch consists of optimizations that make rubbish assortment pauses shorter whereas performing the identical work. This results in a pure enchancment in throughput. There are various optimizations that may very well be listed on this article, and the next part about latency enhancements factors out a few of them.

When NUMA consciousness applies, the G1 GC assumes that objects allotted on one reminiscence node (by a single thread or thread group) will likely be principally referenced from different objects on the identical node. Due to this fact, whereas an object stays within the younger technology, G1 retains objects on the identical node, and it evenly distributes the longer-living objects throughout nodes within the previous technology to attenuate access-time variation. That is just like what the Parallel GC implements.

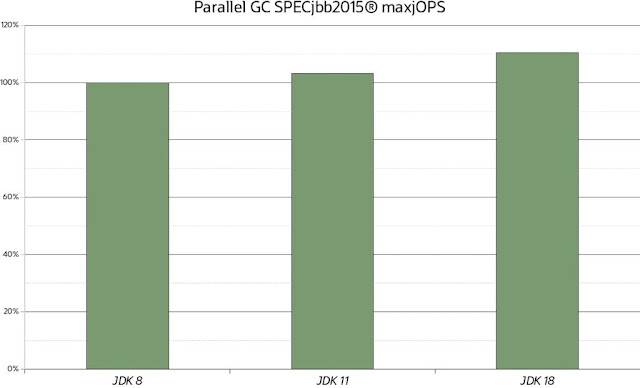

Throughput good points for the Parallel GC. Talking of the Parallel GC, Determine 3 exhibits maxjOPS rating enhancements from JDK 8 to JDK 18 on the identical heap configuration used earlier. Once more, solely by substituting the JVM, even with the Parallel GC, you may get a modest 2% to round a pleasant 10% enchancment in throughput. The enhancements are smaller than with G1 as a result of the Parallel GC began off from a better absolute worth, and there was much less to realize.

Determine 3. Throughput good points for the Parallel GC measured with SPECjbb2015 maxjOPS

Latency enhancements on G1. To show latency enhancements for HotSpot JVM GCs, this part makes use of the SPECjbb2015 benchmark with a set load after which measures pause occasions. The Java heap measurement is ready to 16 GB. Desk 2 summarizes common and 99th percentile (P99) pause occasions and relative whole pause occasions throughout the identical interval for various JDK variations on the default pause time aim of 200 ms.

Desk 2. Latency enhancements with the default pause time of 200 ms

| JDK 8, 200 ms | JDK 11, 200 ms | JDK 18, 200 ms | |

| Common (ms) | 124 | 111 | 89 |

| P99 (ms) | 176 | 111 | 111 |

| Relative assortment time (%) | n/a | -15.8 | -34.4 |

JDK 8 pauses take 124 ms on common, and P99 pauses are 176 ms. JDK 11 improves common pause time to 111 ms and P99 pauses to 134 ms—in whole spending 15.8% much less time in pauses. JDK 18 considerably improves on that when extra, leading to pauses taking 89 ms on common and P99 pause occasions taking 104 ms—leading to 34.4% much less time in rubbish assortment pauses.

I prolonged the experiment so as to add a JDK 18 run with a pause time aim set to 50 ms, as a result of I arbitrarily determined that the default for -XX:MaxGCPauseMillis of 200 ms was too lengthy. G1, on common, met the pause time aim, with P99 rubbish assortment pauses taking 56 ms (see Desk 3). Total, whole time spent in pauses didn’t enhance a lot (0.06%) in comparison with JDK 8.

In different phrases, by substituting a JDK 8 JVM with a JDK 18 JVM, you both get considerably decreased common pauses at doubtlessly elevated throughput for a similar pause time aim, or you’ll be able to have G1 preserve a a lot smaller pause time aim (50 ms) on the identical whole time spent in pauses, which roughly corresponds to the identical throughput.

Desk 3. Latency enhancements by setting the pause time aim to 50 ms

| JDK 8, 200 ms | JDK 11, 200 ms | JDK 18, 200 ms | JDK 18, 50 ms | |

| Common (ms) | 124 | 111 | 89 | 44 |

| P99 (ms) | 176 | 134 | 104 | 56 |

| Relative assortment time (%) | n/a | -15.8 | -34.4 | +0.06 |

The leads to Desk 3 have been made attainable by many enhancements since JDK 8. Listed below are probably the most notable ones.

Additional, the processing of those remembered-set entries has been checked out very totally to trim pointless code and optimize for the frequent paths.

One other focus in JDKs later than JDK 8 has been enhancing the precise parallelization of duties inside a pause: Adjustments have tried to enhance parallelization both by making phases parallel or by creating bigger parallel phases out of smaller serial ones to keep away from pointless synchronization factors. Important sources have been spent to enhance work balancing inside parallel phases in order that if a thread is out of labor, it needs to be cleverer when in search of work to steal from different threads.

By the best way, later JDKs began extra unusual conditions, one among them being evacuation failure. Evacuation failure happens throughout rubbish assortment if there is no such thing as a more room to repeat objects into.

Rubbish assortment pauses on ZGC. In case your utility requires even shorter rubbish assortment pause occasions, Desk 4 exhibits a comparability with one of many latency-focused collectors, ZGC, on the identical workload used earlier. It exhibits the pause-time durations introduced earlier for G1 plus an extra rightmost column exhibiting ZGC.

Desk 4. ZGC latency in comparison with G1 latency

| JDK 8, 200 ms, G1 | JDK 18, 200 ms, G1 | JDK 18, 50 ms, G1 | JDK 18, ZGC | |

| Common (ms) | 124 | 89 | 44 | 0.01 |

| P99 (ms) | 176 | 104 | 56 | 0.031 |

ZGC delivers on its promise of submillisecond pause time targets, transferring all reclamation work concurrent to the appliance. Just some minor work to supply closure of rubbish assortment phases nonetheless wants pauses. As anticipated, these pauses will likely be very small: on this case, even far beneath the instructed millisecond vary that ZGC goals to supply.

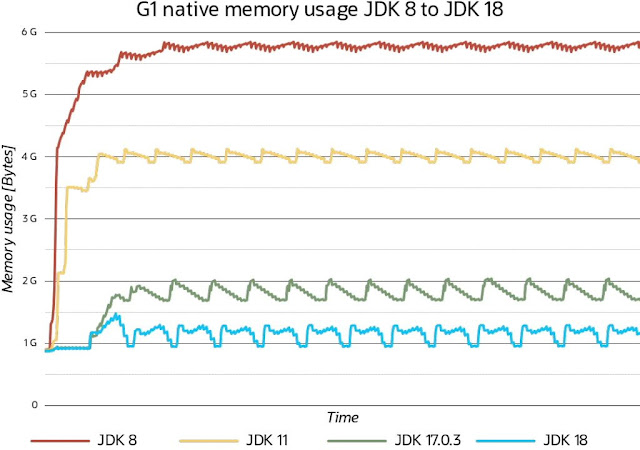

Footprint enhancements for G1. The final metric this text will look at is progress within the reminiscence footprint of the G1 rubbish assortment algorithm. Right here, the footprint of the algorithm is outlined as the quantity of additional reminiscence exterior of the Java heap that it wants to supply its performance.

In G1, along with static knowledge depending on the Java heap measurement, which takes up roughly 3.2% of the scale of the Java heap, usually the opposite predominant client of further reminiscence is remembered units that allow generational rubbish assortment and, particularly, incremental rubbish assortment of the previous technology.

One class of purposes that stresses G1’s remembered units is object caches: They incessantly generate references between areas throughout the previous technology of the heap as they add and take away newly cached entries.

Determine 4. The G1 GC’s native reminiscence footprint

With JDK 8, after a brief warmup interval, G1 native reminiscence utilization settles at round 5.8 GB of native reminiscence. JDK 11 improved on that, lowering the native reminiscence footprint to round 4 GB; JDK 17 improved it to round 1.8 GB; and JDK 18 settles at round 1.25 GB of rubbish assortment native reminiscence utilization. It is a discount of additional reminiscence utilization from nearly 30% of the Java heap in JDK 8 to round 6% of additional reminiscence utilization in JDK 18.

There isn’t a explicit price in throughput or latency related to these modifications, as earlier sections confirmed. Certainly, lowering the metadata the G1 GC maintains usually improved the opposite metrics thus far.

The principle precept for these modifications from JDK 8 by means of JDK 18 has been to take care of rubbish assortment metadata solely on a really strict as-needed foundation, sustaining solely what is anticipated to be wanted when it’s wanted. Because of this, G1 re-creates and manages this reminiscence concurrently, releasing knowledge as shortly as attainable. In JDK 18, enhancements to the illustration of this metadata and storing it extra densely contributed considerably to the development of the reminiscence footprint.

Determine 4 additionally exhibits that in later JDK releases G1 elevated its aggressiveness, step-by-step, in giving again reminiscence to the working system by wanting on the distinction between peaks and valleys in steady-state operations—within the final launch, G1 even does this course of concurrently.

The way forward for rubbish assortment

Though it’s arduous to foretell what the longer term holds and what the numerous initiatives to enhance rubbish assortment and, particularly, G1, will present, a few of the following developments usually tend to find yourself within the HotSpot JVM sooner or later.

One other identified drawback of utilizing G1 in comparison with the throughput collector, Parallel GC, is its impression on throughput—customers report variations within the vary of 10% to twenty% in excessive circumstances. The reason for this drawback is understood, and there have been a number of recommendations on find out how to enhance this downside with out compromising different qualities of the G1 GC.

Pretty not too long ago, it’s been decided that pause occasions and, particularly, work distribution effectivity within the rubbish assortment pauses are nonetheless lower than optimum.

There’s a lot ongoing exercise to alter the ZGC and Shenandoah GCs to be generational. In lots of purposes, the present single-generational design of those GCs has too many disadvantages concerning throughput and timeliness of reclamation, usually requiring a lot bigger heap sizes to compensate.

Supply: oracle.com