This weblog put up continues the collection of examples via which we wish to present varied applied sciences that can be utilized as an information supply to energy a Lightstreamer Information Adapter and, in flip, dispatch the info to a number of shoppers related to the Lightstreamer server throughout the web.

On this put up, we are going to present a fundamental integration with Apache Kafka.

Kafka and Lightstreamer

Apache Kafka is an open-source, distributed occasion streaming platform that handles real-time knowledge feeds. It’s designed to handle excessive quantity, excessive throughput, and low latency knowledge streams and can be utilized for a wide range of messaging, log aggregation, and knowledge pipeline use circumstances. Kafka is very scalable and fault-tolerant and is usually used as a spine for large-scale knowledge architectures. It’s written in Java and makes use of a publish-subscribe mannequin to deal with and course of streaming knowledge.

Lightstreamer is a real-time streaming server that can be utilized to push knowledge to all kinds of shoppers, together with net browsers, cellular purposes, and sensible units. Lightstreamer’s distinctive adaptive streaming capabilities assist cut back bandwidth and latency, in addition to traverse any sort of proxies, firewalls, and different community intermediaries. Its huge fanout capabilities enable Lightstreamer to scale to hundreds of thousands of concurrent shoppers. It will probably join to numerous knowledge sources, together with databases, message queues, and net providers. Lightstreamer can even devour knowledge from Apache Kafka subjects after which ship it to distant shoppers in actual time, making it a great choice for streaming knowledge from a Kafka platform to a number of shoppers worldwide over the web with low latency and excessive reliability.

For the demo introduced on this put up, we’ve got used Amazon Managed Streaming for Apache Kafka (MSK). It’s a totally managed service supplied by AWS that makes it straightforward to construct and run purposes that use Apache Kafka. The service handles the heavy lifting of managing, scaling, and patching Apache Kafka clusters, so to give attention to constructing and operating your purposes.

AWS MSK offers a high-performance, extremely out there, and safe Kafka surroundings that may be simply built-in with different AWS providers. It permits you to create and handle your Kafka clusters, and offers choices for knowledge backup and restoration, encryption, and entry management. Moreover, it offers monitoring and logging capabilities that mean you can troubleshoot and debug your Kafka clusters. It’s a pay-as-you-go service, you solely pay for the sources you devour, and you may scale the variety of dealer nodes and storage capability as per your want.

The demo

The Demo simulates a quite simple departure board with a number of rows displaying real-time flight data to passengers of a hypothetical airport. The information are simulated with a random generator and despatched to a Kafka subject. The shopper of the demo is an internet web page an identical to the one developed for the

demo retrieving knowledge from DynamoDB.

The Demo Structure

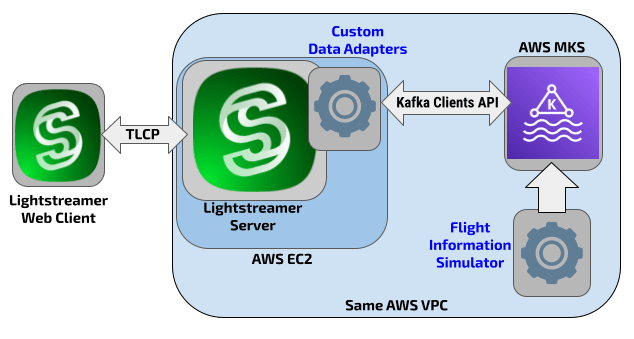

The general structure of the demo contains the component under:

- An online web page utilizing the Lightstreamer Internet Shopper SDK to connect with the Lightstreamer server and subscribe to the flight data gadgets.

- A Lightstreamer server deployed on an AWS EC2 occasion alongside the customized metadata and knowledge adapters.

- The adapters use the Java In-Course of Adapter SDK; specifically, the Information Adapter retrieves knowledge from the MSK knowledge supply via the Kafka shoppers API for Java.

- An MSK cluster with a subject named

departuresboard-001. - A simulator, additionally in-built Java language, pushing knowledge into the Kafka subject.

Adapter Particulars

The supply code of the adapters is developed within the package deal: com.lightstreamer.examples.kafkademo.adapters.

The Information Adapter consists basically of two supply recordsdata:

- KafkaDataAdapter.java implements the DataProvider interface based mostly on the Java In-Course of Adapter API and offers with publishing the simulated flight data into the Lightstreamer server;

- ConsumerLoop.java implements a client loop for the Kafka service retrieving the messages to be pushed into the Lightstreamer server.

As for the Metadata Adapter, the demo depends on the fundamental functionalities supplied by the ready-made LiteralBasedProvider Metadata Adapter.

Polling knowledge from Kafka

So as to obtain knowledge from a Kafka messaging platform, a Java software should have a Kafka Shopper. The next steps define the fundamental course of for receiving knowledge from a Kafka subject:

- Arrange a Kafka Shopper

- Configure the Shopper

- Subscribe to a Subject

- Ballot for knowledge

- Deserialize and course of the info

Beneath is a snippet of code from the ConsumerLoop class implementing the steps talked about above.

As soon as deserialized, a message from Kafka is handed to the Information Adapter and ultimately pushed into the Lightstreamer server to be dispatched to the online shoppers within the type of Add, Delete, or Replace messages as requested by the COMMAND subscribe mode. The under code implements the processing of the message.

The information reception from Kafka is triggered by the subscribe operate invoked within the Information Adapter when a shopper subscribes for the primary time to the gadgets of the demo; particularly, within the KafkaDataAdapter.java class, we’ve got the next code:

The Simulator

The information proven by the demo are randomly generated by a simulator and printed right into a Kafka subject. The simulator generates flight knowledge randomly, creating new flights, updating knowledge, after which deleting them as soon as they depart. To be exact, the message despatched to the subject is a string with the next fields pipe separated: “vacation spot”, “departure”, “terminal”, “standing”, and “airline”. The important thing of the report is the flight code with the format “LS999”.

Placing issues collectively

The demo wants a Kafka cluster the place a subject with title

departuresboard-001 is outlined. You should use a Kafka server put in regionally or any of the providers provided within the cloud; for this demo, we used AWS MSK, which is exactly what the following steps discuss with.

AWS MSK

- Register to the AWS Console within the account you wish to create your cluster in.

- Browse to the MSK create cluster wizard to start out the creation.

- Given the restricted wants of the demo, you’ll be able to select choices for a cluster with solely 2 brokers, one per availability zone, and of small measurement (kafka.t3.small).

- Select Unauthenticated entry choice and permit Plaintext connection.

- We select a cluster configuration such because the MSK default configuration however a single add; since within the demo solely real-time occasions are managed, we select a really quick retention time for messages: log.retention.ms = 2000

- Create a subject with title departuresboard-001.

Lightstreamer Server

- Obtain Lightstreamer Server (Lightstreamer Server comes with a free non-expiring demo license for 20 related customers) from Lightstreamer Obtain web page, and set up it, as defined within the GETTING_STARTED.TXT file within the set up dwelling listing.

- Guarantee that Lightstreamer Server will not be operating.

- Get the deploy.zip file from the newest launch of the Demo GitHub venture, unzip it, and duplicate the kafkademo folder into the adapters folder of your Lightstreamer Server set up.

- Replace the adapters.xml file setting the “kafka_bootstrap_servers” parameter with the connection string of your cluster created within the earlier part; to retrieve this data use the steps under:

- Open the Amazon MSK console at https://console.aws.amazon.com/msk/.

- Look ahead to the standing of your cluster to grow to be Lively. This would possibly take a number of minutes. After the standing turns into Lively, select the cluster title. This takes you to a web page containing the cluster abstract.

- Select View shopper data.

- Copy the connection string for plaintext authentication.

- [Optional] Customise the logging settings in log4j configuration file kafkademo/lessons/log4j2.xml.

- So as to keep away from authentication stuff, the machine operating the Lightstreamer server should be in the identical vpc of the MSK cluster.

- Begin Lightstreamer Server.

Simulator Producer

From the LS_HOMEadapterskafkademolib folder, you can begin the simulator producer loop with this command:

The place bootstrap_server is identical data retrieved within the earlier part, and the subject title is

departuresboard-001.

Internet Shopper

As a shopper for this demo, you should use the Lightstreamer – DynamoDB Demo – Internet Shopper; you’ll be able to comply with the directions within the

Set up part with one addition: within the src/js/const.js file, change the LS_ADAPTER_SET to

KAFKADEMO.