Written by Harry Roberts on CSS Wizardry.

Desk of Contents

A few years in the past, my first few days on a brand new net efficiency challenge have been

all the time sluggish going. So many false begins, tedious workflows, and a whole lack

of effectivity actually made it troublesome for me to seek out momentum. All by means of no

fault of the consumer or the challenge, however by means of enormous flaws in my very own strategy.

In a bid to deal with this, I launched a brand new device into my arsenal in order that

I may hit the bottom operating a lot quicker, and get solutions to my shoppers a lot

sooner.

When first engaged on a brand new site-speed engagement, you have to work out shortly

the place the slowdowns, blindspots, and inefficiencies lie. Except a consumer hires

you to particularly enhance the efficiency of 1 web page, you’ll want a broad

view of the entire web site or utility. Traditionally, I’d perhaps have a look at Google

Analytics—or a RUM answer if the consumer had one already—however that is solely

helpful for displaying me specific outliers, and never essentially any patterns

throughout the entire challenge.

doesn’t essentially assist us construct an even bigger image of the location as an entire.

Not solely that, however Google Analytics, by default, solely reviews load instances, which

at the moment are virtually completely redundant within the subject of efficiency optimisation.

I wanted a extra holistic approach to visualise efficiency throughout a whole web site,

and—ideally—one thing extra helpful than load instances.

Determine Web page Varieties

Visualising an entire web site

is slightly overkill, particularly contemplating

many websites might run to the tens of 1000’s of pages. As an alternative, what we are able to do

for nearly each web site on the earth is cut up the challenge into web page sorts. These

web page sorts would additionally usually correspond to totally different templates within the

codebase. For instance, my web site has a homepage, content material pages (e.g. my about

web page), and article pages, like this one you’re studying proper now; an

ecommerce web site would have a homepage, a product listings web page (PLP), a product

particulars web page (PDP), and a search outcomes web page (SRP).

By capturing and evaluating the behaviour of those pages alone, we are able to

instantly start to construct up a consultant image of the state of the entire

web site. And whereas we might not seize outliers this manner, we are able to nonetheless audit these

in isolation as a separate physique of labor if required.

For the sake of the remainder of the put up, I’ll be auditing an anonymised ecommerce

consumer of mine, however you’ll be capable of swap out my web page sorts on your personal with

ease.

Gathering Information

If an image is value a thousand phrases, a waterfall is value a thousand

footage. Let’s begin with the gold customary:

WebPageTest.

Any time you run a check with WebPageTest, you’ll get this desk of various

milestones and metrics. We’re within the first seven technical timings,

First Byte by means of Complete Blocking Time:

to go any deeper than this.

All we have to do now could be seize this data for every of our pages and paste

it right into a spreadsheet. When you’d like a replica of the precise spreadsheet I take advantage of, you

can pay money for it right here.

outcomes. Larger variance means a much less secure metric throughout pages.

With this suite of numbers, I can now start to make assessments.

I can see from the screenshot above that TTFB is my most secure metric—nobody

web page seems to have notably costly database queries or API calls on the

back-end. Conversely, LCP is much extra erratic, that means that we’re more likely to

have extremely totally different content material web page to web page (the metric isn’t unhealthy, per se, it’s

extremely variable), and that it’s probably not equally optimised or delivered.

Every little thing else is a shade in between, and at this stage gives no actual

insights. It’s once we start to graph the information that helpful patterns emerge.

Visualising the Information

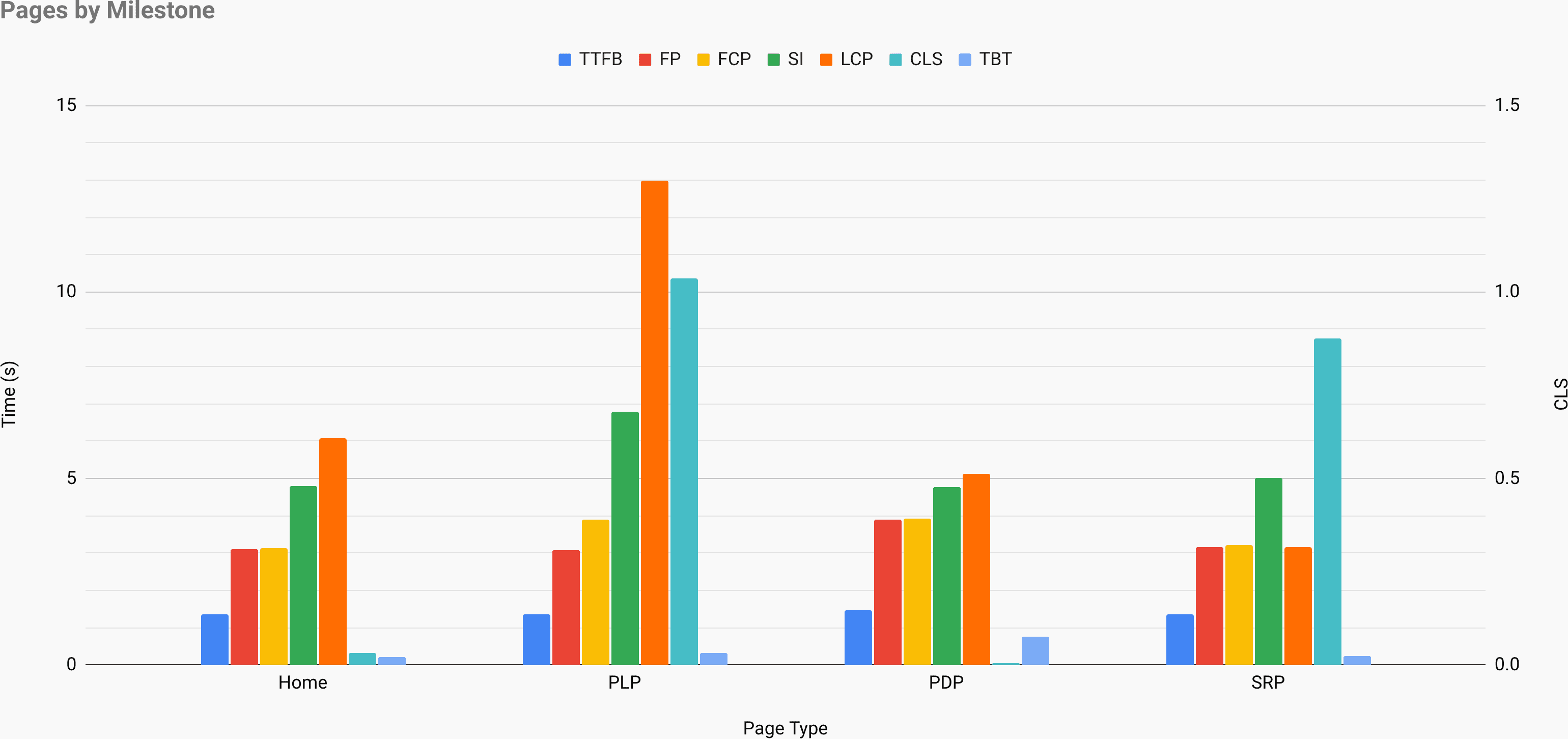

In a separate sheet—obtainable on the similar hyperlink as

earlier than—I’ve merely graphed the

knowledge by web page kind.

seconds, thus seems plotted individually towards the appropriate Y-axis.

It is perhaps a good suggestion to open the above graph picture up in a brand new tab and hold it

helpful throughout the remainder of this put up.

The entire level of the train that follows is to permit me to maneuver shortly,

recognizing patterns from afar, and never having to do any sluggish or meticulous work

but. I would like to have the ability to type hypotheses and draw conclusions with out viewing

a single URL or a line of supply code. That work is what comes subsequent.

For now, I’m often sat with a espresso, some tunes on, and an old-school pen and

paper making notes. What I’m hoping to determine is the place I must look first

after I get into the audit correct. I need to rule out any useless ends or false

begins. I need to maximise the work not finished.

Let’s dig in!

Constructing the Map

Firstly, I need to evaluate entire pages at a time. Instantly, I can see

that the PLP is by far the worst offender. Virtually all of its bars are larger

than different pages. Because of this I’ll possible find yourself giving it slightly extra

consideration than the others. Subsequent, the opposite three pages are fairly comparable with

the homepage being marginally worse.

Subsequent, I’ll search for any particular person outliers or extremities. Instantly I can

see that we have now an outlying Largest Contentful Paint on the homepage and an

outlying Cumulative Format Shift on the search outcomes web page. With my pen and

paper, I’ll make an observation of investigating these particularly in my testing.

After extremities, I’d like to really discover consistencies. As soon as once more

noting that TTFB could be very constant—as seen within the desk view—I can draw the

conclusion that back-end time (and no matter else is perhaps taking place in

there)

is uniformly constant throughout every web page kind. Because of this I possible don’t

must do any particular work on any particular person pages, but additionally means that any

basic back-end enhancements I make in space A may also be felt in areas

B and C, too. However maybe most significantly, the steadiness of TTFB signifies that

all subsequent milestones are measured from a really dependable baseline—I don’t

must make any on the fly changes to different metrics to be able to cross

reference them. Put one other means, if one web page’s TTFB was a whole second later,

I’d must account for that second when evaluating deltas between any of the

bars.

Which brings me onto the following bit: deltas. That is the place you’ll discover some actually

fascinating insights that may run surprisingly deep.

Gaps between TTFB and First Paint can, by and enormous, be thought of your

vital path. That is mainly what number of render blocking sources every web page

has. In fact, it’s slightly extra complicated than that, however for this train it’s

an extremely dependable proxy. Now right here’s the place it will get actually fascinating:

If all of our TTFBs are fairly an identical, then any faulty FPs are

indicative of a unique quantity of render blocking sources. Provided that render

blocking sources reside within the head of the doc, this means differing

head tags on that web page. Word that the PDP’s FP is sort of a second slower than

different pages? I feel the PDP has one thing totally different about it. Is it merely an

extra render-blocking useful resource or two? Or, maybe a extra excessive situation

is that it has completely totally different head tags? Possibly that is a wholly

totally different utility? It’s not unparalleled.

The following factor I need to deal with is deltas between First Paint and First

Contentful Paint. FP is outlined as the primary pixel, it doesn’t matter what it’s,

being placed on the display. FCP, however, is the primary image- or

text-pixel rendered to the display. Word that the majority pages share near-identical FP

and FCP? This can be a robust suggestion {that a} web page is both:

- embedding its brand within the HTML or CSS relatively than utilizing an exterior picture,

or; - making use of

font-displayfor its net fonts, or; - not utilizing net fonts in any respect.

Mainly, the first- and first-contentful paints being an identical tells us that

some textual content or imagery was rendered within the very first cross. This additionally signifies that

if we handle to enhance FP, we’re possible to enhance FCP at no cost!

The PLP, nonetheless, does present a distinction between the 2. I’ll make an observation in my

pad to research font-loading methods on this web page (font-based points are,

in my expertise, extra frequent than image-based one, although I gained’t rule it out

fully).

Now, let’s transfer on to gaps between First Contentful Paint and Velocity

Index. SI is a measure of the visible completeness of above the fold content material

over time. It’s slightly extra complicated than

that, nevertheless it’s

mainly coping with visible progress. The bigger the hole between FCP and SI,

the longer the tail of visible completeness is. That’s to say, you’ll have many

totally different areas of content material within the viewport that each one render at totally different instances,

against all popping in directly. This will likely or will not be fascinating relying on

the way you’ve designed the ATF expertise: you shouldn’t lazy-load something ATF,

however skeleton screens may negatively affect your SI regardless of being an finally

higher expertise. Usually all I’m wanting our for listed below are any lengthy tails

that counsel I’m below prioritising above the fold content material.

Extra curiously, let’s check out Velocity Index vs. Largest Contentful

Paint. This can be a little more durable to clarify, however for essentially the most half, a big hole

between SI and LCP means that your largest contentful paint was one of many

final above the fold paints—there was a big area lacking for an extended whereas,

and its late arrival is obvious within the massive hole. However, a small

hole means that your largest contentful paint was comparatively early, however

a smaller ATF paint is pushing your SI out—e.g. a late-loaded chat consumer, or

a small cookie banner, and many others. Finally, this hole is perhaps telling me about how

the character of the ATF content material got here collectively: was it a single massive area that

painted late, or was it an extended tail of smaller areas pushing metrics the opposite

means?

This implies we are able to infer from the graph that a big however late-rendered area on

the PLP is pulling out our Velocity Index, however a excessive SI and low LCP on the SRP

means we’re possible coping with a long-tail rendering downside which is much less

related to a big area.

Subsequent up, let’s have a look at Cumulative Format Shift and different milestones. Whereas

CLS is a Core Net Important, it’s not a milestone measured in timings. As an alternative, it

focuses on format stability, which is definitely not a lot in any respect about velocity. That

mentioned, we are able to nonetheless be part of some dots. It’s fairly apparent to me that massive LCP on the

PLP is pushing out our CLS—no matter content material is late to render doesn’t have any

placeholder or skeleton. Nonetheless, and barely extra obscure, the excessive CLS on the

SRP will not be joined by a excessive LCP. This implies two doable situations:

- Maybe the search outcomes web page is loading its particular person photographs with out

widthorpeakattributes, that means that whereas they aren’t a part of one

LCP, numerous small photographs are nudging the format round. This confirms the

long-tail rendering suspicion we got here up with earlier than, and is actually a

behaviour that might contribute to a excessive CLS. - Much less possible, however keep in mind that SI is simply measuring above the fold

completeness, so there’s an opportunity that an enormous preliminary CLS pushed a whole lot of

render-regions off-screen, enhancing the SI rating within the course of. Nonetheless,

I might anticipate to see this additionally mirrored in a extra extreme LCP, too.

Lastly, Complete Blocking Time can’t be reliably or meaningfully in contrast

towards many different metrics as different milestones (besides CLS) are network-bound

and TBT is CPU-bound. The one correlation one may draw could be between

a big TTFB–First Paint delta and a heightened TBT metric. Bear in mind, a big

hole between TTFB and FP is indicative of extra synchronous belongings within the

head—if TBT goes up as nicely, it’s extra possible that these belongings are JS and never

CSS. As a rule, I might merely view TBT in isolation.

Last Phrase

All of this data gleaned with out having to go to a single web page or view

a single line of supply code. I didn’t want to select by means of a single little bit of

DevTools! That is the strategy I tackle each new audit challenge in order that I can

shortly determine what points may want investigation first, what liabilities

is perhaps the place, and extra importantly, what work I can keep away from doing.

Having the ability to collect this many clues so shortly units me up extremely nicely forward

of digging into the challenge absolutely; it tells me precisely the place to look first. This

complete train is an effective way of realizing the place to start out, which was all the time one

of essentially the most difficult components of the method for me.