Chances are high, if you’re writing Ruby code, you might be utilizing Sidekiq to deal with

background processing. In case you are coming from ActiveJob or another

background, keep tuned, a number of the suggestions coated may be utilized there as nicely.

People make the most of (Sidekiq) background jobs for various instances. Some crunch

numbers, some dispatch welcome emails to customers, and a few schedule information syncing.

No matter your case could also be, you may finally run right into a requirement to keep away from

duplicate jobs. By duplicate jobs, I envision two jobs that do the very same

factor. Let’s dive in on {that a} bit.

Why De-Duplicate Jobs?

Think about a situation the place your job seems like the next:

class BookSalesWorker

embrace Sidekiq::Employee

def carry out(book_id)

crunch_some_numbers(book_id)

upload_to_s3

finish

...

finishThe BookSalesWorker all the time does the identical factor — queries the DB for a e book

based mostly on the book_id and fetches the newest gross sales information to calculate some

numbers. Then, it uploads them to a storage service. Needless to say each

time a e book is bought in your web site, you’ll have this job enqueued.

Now, what in the event you acquired 100 gross sales directly? You’d have 100 of those jobs doing the

very same factor. Possibly you might be positive with that. You don’t care about S3 writes

that a lot, and your queues aren’t as congested, so you’ll be able to deal with the load.

However, “does it scale?”™️

Nicely, positively not. If you happen to begin receiving extra gross sales for extra books, your

queue will rapidly pile up with pointless work. When you have 100 jobs that do

the identical factor for a single e book, and you’ve got 10 books promoting in parallel,

you are actually 1000 jobs deep in your queue, the place in actuality, you might simply

have 10 jobs for every e book.

Now, let’s undergo a few choices on how one can forestall duplicate jobs

from piling up your queues.

1. DIY Method

In case you are not a fan of exterior dependencies and complicated logic, you’ll be able to go

forward and add some customized options to your codebase. I created a pattern repo

to check out our examples first-hand. There will probably be a hyperlink in every strategy to

the instance.

1.1 One Flag Strategy

You possibly can add one flag that decides whether or not to enqueue a job or not. One may

add a sales_enqueued_at of their Ebook desk and keep that one. For instance:

module BookSalesService

def schedule_with_one_flag(e book)

if e book.sales_enqueued_at < 10.minutes.in the past

e book.replace(sales_enqueued_at: Time.present)

BookSalesWorker.perform_async(e book.id)

finish

finish

finishThat signifies that no new jobs will probably be enqueued till 10 minutes have handed from

the time the final job acquired enqueued. After 10 minutes have handed, we then

replace the sales_enqueued_at and enqueue a brand new job.

One other factor you are able to do is ready one flag that could be a boolean, e.g.

crunching_sales. You set crunching_sales to true earlier than the primary job is

enqueued. Then, you set it to false as soon as the job is full. All different jobs

that attempt to get scheduled will probably be rejected till crunching_sales is fake.

You possibly can do that strategy

within the instance repo

I created.

1.2 Two Flags Strategy

If “locking” a job from being enqueued for 10 minutes sounds too scary, however you

are nonetheless positive with additional flags in your code, then the subsequent suggestion may

curiosity you.

You possibly can add one other flag to the present sales_enqueued_at — the

sales_calculated_at. Then our code will look one thing like this:

module BookSalesService

def schedule_with_two_flags(e book)

if e book.sales_enqueued_at <= e book.sales_calculated_at

e book.replace(sales_enqueued_at: Time.present)

BookSalesWorker.perform_async(e book.id)

finish

finish

finish

class BookSalesWorker

embrace Sidekiq::Employee

def carry out(book_id)

crunch_some_numbers(book_id)

upload_to_s3

e book.replace(sales_calculated_at: Time.present)

finish

...

finishTo strive it out, take a look at the directions within the instance repo.

Now we management a portion of the time between when a job is enqueued and

completed. In that portion of time, no job may be enqueued. Whereas the job is

operating, the sales_enqueued_at will probably be bigger than sales_calculated_at. When

the job finishes operating, the sales_calculated_at will probably be bigger (newer)

than the sales_enqueued_at and a brand new job will get enqueued.

Utilizing two flags is likely to be attention-grabbing, so you might present the final time these

gross sales numbers acquired up to date within the UI. Then the customers that learn them can have an

concept of how current the info is. A win-win scenario.

Flag Sum Up

It is likely to be tempting to create options like these in instances of want, however to

me, they appear a bit clumsy, and so they add some overhead. I might suggest utilizing

this in case your use case is straightforward, however as quickly because it proves complicated or not

sufficient, I’d urge you to check out different choices.

An enormous con with the flag strategy is that you’ll lose all the roles that attempted

to enqueue throughout these 10 minutes. An enormous professional is that you’re not bringing in

dependencies, and it’ll alleviate the job quantity in queues fairly rapidly.

1.3 Traversing The Queue

One other strategy you’ll be able to take is to create a customized locking mechanism to

forestall the identical jobs from enqueueing. We are going to verify the Sidekiq queue we’re

enthusiastic about and see if the job (employee) is already there. The code will look

one thing like this:

module BookSalesService

def schedule_unique_across_queue(e book)

queue = Sidekiq::Queue.new('default')

queue.every do |job|

return if job.klass == BookSalesWorker.to_s &&

job.args.be a part of('') == e book.id.to_s

finish

BookSalesWorker.perform_async(e book.id)

finish

finish

class BookSalesWorker

embrace Sidekiq::Employee

def carry out(book_id)

crunch_some_numbers(book_id)

upload_to_s3

finish

...

finishWithin the instance above we’re checking whether or not the 'default' queue has a job with

the category title of BookSalesWorker. We’re additionally checking if the job arguments

match the e book ID. If the BookSalesWorker job with the identical Ebook ID is within the

queue, we’ll return early and never schedule one other one.

Be aware that a few of them may get scheduled in the event you schedule jobs too quick

as a result of the queue is empty. The precise factor occurred to me when testing it

domestically with:

100.instances { BookSalesService.schedule_unique_across_queue(e book) }You possibly can strive it out within the instance repo.

The advantage of this strategy is that you may traverse all queues to

seek for an current job in the event you want it. The con is that you may nonetheless have

duplicate jobs in case your queue is empty and also you schedule a whole lot of them directly.

Additionally, you might be doubtlessly traversing by means of all the roles within the queue earlier than

scheduling one, in order that is likely to be pricey relying on the scale of your queue.

2. Upgrading to Sidekiq Enterprise

If you happen to or your group has some cash mendacity round, you’ll be able to improve to the

Enterprise model of Sidekiq. It begins at $179 monthly, and it has a cool

function that helps you keep away from duplicate jobs. Sadly, I don’t have

Sidekiq Enterprise, however I consider their documentation

is adequate. You possibly can simply have distinctive (non-duplicated) jobs with the

following code:

class BookSalesWorker

embrace Sidekiq::Employee

sidekiq_options unique_for: 10.minutes

def carry out(book_id)

crunch_some_numbers(book_id)

upload_to_s3

finish

...

finishAnd that’s it. You may have an analogous job implementation to what we described in

the ‘One Flag Strategy’ part. The job will probably be distinctive

for 10 minutes, that means that no different job with the identical arguments may be

scheduled in that point interval.

Fairly cool one-liner, huh? Nicely, you probably have Enterprise Sidekiq and also you simply

came upon about this function, I’m actually glad I helped. Most of us should not

going to make use of it, so let’s bounce into the subsequent resolution.

3. sidekiq-unique-jobs To The Rescue

Sure, I do know we’re about to say a gem. And sure, it has some Lua recordsdata in it

which could put some individuals off. However bear with me, it’s a very candy deal you

are getting with it. The sidekiq-unique-job

gem comes with a whole lot of locking and different configuration choices — most likely extra

than you want.

To get began rapidly, put sidekiq-unique-jobs gem into your Gemfile, do

bundle and configure your employee as proven:

class UniqueBookSalesWorker

embrace Sidekiq::Employee

sidekiq_options lock: :until_executed,

on_conflict: :reject

def carry out(book_id)

e book = Ebook.discover(book_id)

logger.data "I'm a Sidekiq Ebook Gross sales employee - I began"

sleep 2

logger.data "I'm a Sidekiq Ebook Gross sales employee - I completed"

e book.replace(sales_calculated_at: Time.present)

e book.replace(crunching_sales: false)

finish

finishThere are a whole lot of choices, however I made a decision to simplify and use this one:

sidekiq_options lock: :until_executed, on_conflict: :rejectThe lock: :until_executed will lock the primary UniqueBookSalesWorker job till

it’s executed. With on_conflict: :reject, we’re saying that we would like all different

jobs that attempt to get executed to be rejected into the useless queue. What we

achieved right here is just like what we did in our DIY examples within the subjects

above.

A slight enchancment over these DIY examples is that we now have a type of log of

what occurred. To get a way of the way it seems, let’s strive the next:

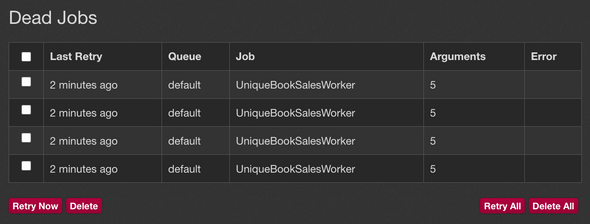

5.instances { UniqueBookSalesWorker.perform_async(Ebook.final.id) }Just one job will totally execute, and the opposite 4 jobs will probably be despatched off to

the useless queue, the place you’ll be able to retry them. This strategy differs from our

examples the place duplicate jobs had been simply ignored.

There are a whole lot of choices to select from in the case of locking and battle

resolving. I counsel you seek the advice of the gem’s documentation

on your particular use case.

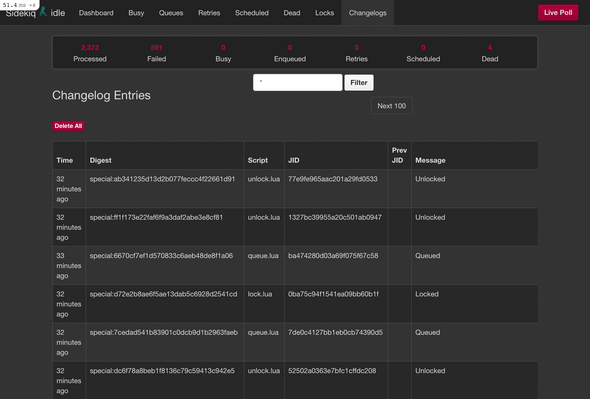

Nice Insights

The beauty of this gem is that you may view the locks and the historical past

of what went down in your queues. All it’s worthwhile to do is add the next strains

in your config/routes.rb:

# config/routes.rb

require 'sidekiq_unique_jobs/net'

Rails.software.routes.draw do

mount Sidekiq::Net, at: '/sidekiq'

finishIt would embrace the unique Sidekiq consumer, however it can additionally offer you two extra

pages — one for job locks and the opposite for the changelog. That is the way it

seems:

Discover how we now have two new pages, “Locks” and “Changelogs”. Fairly cool

function.

You possibly can strive all of this in the instance

undertaking

the place the gem is put in and able to go.

Why Lua?

To start with, I’m not the writer of the gem, so I’m simply assuming issues right here.

The primary time I noticed the gem, I puzzled: why use Lua inside a Ruby gem? It

may seem odd at first, however Redis helps operating Lua scripts. I assume the

writer of the gem had this in thoughts and wished to do extra nimble logic in Lua.

If you happen to have a look at the

Lua recordsdata within the gem’s repo,

they aren’t that difficult. The entire Lua scripts are known as later from

the Ruby code within the

SidekiqUniqueJobs::Script::Caller right here.

Please check out the supply code, it’s attention-grabbing to learn and determine how issues work.

Different gem

If you happen to use ActiveJob extensively, you’ll be able to strive active-job-uniqueness gem proper right here.

The concept is comparable, however as a substitute of customized Lua scripts, it makes use of Redlock to

lock gadgets in Redis.

To have a singular job utilizing this gem, you’ll be able to think about a job like this one:

class BookSalesJob < ActiveJob::Base

distinctive :until_executed

def carry out

...

finish

finishThe syntax is much less verbose however similar to the sidekiq-unique-jobs gem.

It’d remedy your case in the event you extremely depend on ActiveJob.

Last Ideas

I hope you gained some information in learn how to take care of duplicate jobs in your

app. I positively had enjoyable researching and taking part in round with totally different

options. If you happen to didn’t find yourself discovering what you had been on the lookout for, I hope that

a number of the examples impressed you to create one thing of your individual.

Right here’s the instance undertaking

with all of the code snippets.

I’ll see you within the subsequent one, cheers.