Switch Studying

Switch studying is a deep studying method by which a mannequin that has been educated for one job is used as a place to begin for a mannequin that performs the same job. Switch studying is helpful for duties for which quite a lot of pretrained fashions exist, resembling laptop imaginative and prescient and pure language processing.

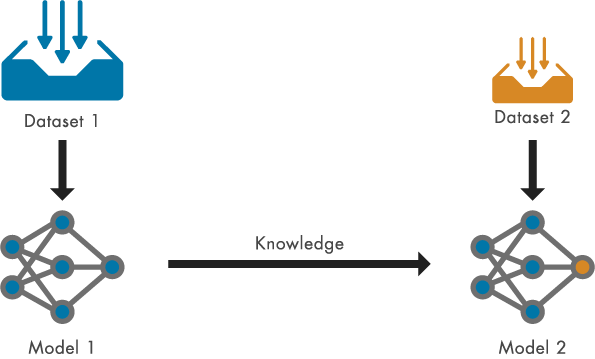

For laptop imaginative and prescient, many fashionable convolutional neural networks (CNNs) are pretrained on the ImageNet dataset, which comprises over 14 million pictures and a thousand courses of pictures. Updating and retraining a community with switch studying is often a lot quicker and simpler than coaching a community from scratch. Determine: Switch information from a pretrained mannequin to a different mannequin that may be educated quicker with much less coaching knowledge.

To get a pretrained mannequin, you possibly can discover MATLAB Deep Studying Mannequin Hub to entry fashions by class and get recommendations on selecting a mannequin. You may as well get pretrained networks from exterior platforms. You possibly can convert a mannequin from TensorFlow™, PyTorch®, or ONNX™ to a MATLAB mannequin through the use of an import operate, such because the importNetworkFromTensorFlow operate.

In R2024a, the imagePretrainedNetwork operate was launched and you need to use it to load most pretrained picture fashions. This operate masses a pretrained neural community and optionally adapts the community structure for switch studying and fine-tuning workflows. For an instance, see Retrain Neural Community to Classify New Photographs.

Determine: Switch information from a pretrained mannequin to a different mannequin that may be educated quicker with much less coaching knowledge.

To get a pretrained mannequin, you possibly can discover MATLAB Deep Studying Mannequin Hub to entry fashions by class and get recommendations on selecting a mannequin. You may as well get pretrained networks from exterior platforms. You possibly can convert a mannequin from TensorFlow™, PyTorch®, or ONNX™ to a MATLAB mannequin through the use of an import operate, such because the importNetworkFromTensorFlow operate.

In R2024a, the imagePretrainedNetwork operate was launched and you need to use it to load most pretrained picture fashions. This operate masses a pretrained neural community and optionally adapts the community structure for switch studying and fine-tuning workflows. For an instance, see Retrain Neural Community to Classify New Photographs.

internet = imagePretrainedNetwork("squeezenet",NumClassses=5);

You may as well put together networks for switch studying interactively utilizing the Deep Community Designer app. Load a pretrained built-in mannequin or import a mannequin from PyTorch or TensorFlow, after which, modify the final learnable layer of the mannequin with the app. This workflow is proven within the following animated determine and described intimately within the instance Put together Community for Switch Studying Utilizing Deep Community Designer.

Animated Determine: Interactive switch studying utilizing the Deep Community Designer app.

Animated Determine: Interactive switch studying utilizing the Deep Community Designer app.

GPUs and Parallel Computing

Excessive-performance GPUs have a parallel structure that’s environment friendly for deep studying, as deep studying algorithms are inherently parallel. When GPUs are mixed with clusters or cloud computing, coaching time for deep neural networks might be considerably lowered. Use the trainnet operate to coach networks, which makes use of a GPU if one is obtainable. For extra info on how one can make the most of GPUs for coaching, see Scale Up Deep Studying in Parallel, on GPUs, and within the Cloud.

The next ideas will enable you to time your code when working with GPUs:- Contemplate “warming up” your GPUs. Your code will run slower within the first 3-4 runs. So, measure the coaching time (see gputimeit) after the preliminary runs.

- Don’t overlook synchronization when working with GPUs. See the wait(gpudevice) operate to discover ways to synchronize GPUs.

Code Profiling

Earlier than attempting to hurry up coaching by enhancing your code, use code profiling to uncover the slowest elements of your code. Profiling is a strategy to measure the time it takes to run the code and determine which capabilities and features of code are consuming essentially the most time. You possibly can profile your code interactively with the MATLAB Profiler or programmatically with the profile operate.

Right here, we now have profiled the coaching within the instance Practice Community on Picture and Characteristic Information through the use of the profile operate.profile on -historysize 9000000 internet = trainnet(dsTrain,internet,"crossentropy",choices); profile viewerThe profiling outcomes are proven within the following determine. Observe that this particular instance trains a really small community and coaching may be very quick. However we are able to nonetheless observe {that a} portion of the coaching time is taken by studying the coaching knowledge from a datastore. When coaching way more advanced networks with bigger datasets, profiling can reveal extra alternatives for code optimization. For instance, you would possibly observe that preprocessing the enter knowledge earlier than coaching (moderately than repeatedly preprocessing knowledge at runtime) can save time, or that an excessive amount of time is spent on validation.

Determine: Code profiler outcomes for coaching a small neural community.

Determine: Code profiler outcomes for coaching a small neural community.

Monitoring Overhead

If you practice a deep neural community, you possibly can visualize the coaching progress. That is very useful to evaluate the efficiency of your community and whether or not it meets your necessities. Nonetheless, coaching visualizations add processing overhead to the coaching and improve coaching time. You probably have accomplished your evaluation, for instance by plotting the coaching progress for a number of epochs, take into account turning off the visualizations and inspecting the plots after the coaching completion.

Determine: Coaching progress of a CNN for regression.

One other strategy to monitor the coaching progress is through the use of the verbose output of the trainnet operate. Displaying the coaching progress on the command line has much less adverse impression on the coaching time than the visualization of the coaching progress. Nonetheless, to lower coaching time you possibly can flip off the verbose output or lower the frequency with which the progress is displayed.

Determine: Coaching progress of a CNN for regression.

One other strategy to monitor the coaching progress is through the use of the verbose output of the trainnet operate. Displaying the coaching progress on the command line has much less adverse impression on the coaching time than the visualization of the coaching progress. Nonetheless, to lower coaching time you possibly can flip off the verbose output or lower the frequency with which the progress is displayed.

Conclusion

On this weblog put up, we now have supplied a number of recommendations on how one can speed up coaching time for deep neural networks. The Deep Studying Toolbox documentation supplies many extra assets. If you’re undecided how one can get began with coaching acceleration or want particular recommendation, remark under. Or depart a remark to share with the AI weblog group your ideas and methods for accelerating deep studying coaching in MATLAB.