Hello, Please assist.

I’m constructing a chatbot with Openai. And need to use streaming. The issue is when openai response and add 1 phrase, Go add 1 line. Consequently in my terminal, i get this :

Stream response:

Good day

Good day!

Good day! How

Good day! How can

Good day! How can I

Good day! How can I help

Good day! How can I help you

Good day! How can I help you right now

Good day! How can I help you right now

Stream completed

That is my code

// With Streaming Response --- SSE

func ChatWithBot() gin.HandlerFunc {

return func(c *gin.Context) {

var message MessageRequest

// Getting the response from consumer

err := c.BindJSON(&message)

if err != nil {

c.JSON(http.StatusBadRequest, gin.H{"error": err.Error()})

return

}

userMessage := message.Message

// Setting the modal

OpenAI_API_Key := os.Getenv("OPENAI_API_KEY")

openAiClient := openai.NewClient(OpenAI_API_Key)

ctx := context.Background()

reqToOpenAI := openai.ChatCompletionRequest{

Mannequin: openai.GPT3Dot5Turbo,

MaxTokens: 8,

Messages: []openai.ChatCompletionMessage{

{

Position: openai.ChatMessageRoleUser,

Content material: userMessage,

},

},

Stream: true,

}

stream, err := openAiClient.CreateChatCompletionStream(ctx, reqToOpenAI)

if err != nil {

fmt.Printf("Error creating completion")

c.JSON(http.StatusInternalServerError, gin.H{"error": err.Error()})

return

}

defer stream.Shut()

// w := c.Author

// w.Header().Set("Content material-Sort", "textual content/plain; charset=utf-8")

// c.Header("Cache-Management", "no-cache")

// c.Header("Connection", "keep-alive")

fmt.Printf("Stream response: ")

full := ""

for {

response, err := stream.Recv()

if errors.Is(err, io.EOF) {

fmt.Println("nStream completed")

break

}

if err != nil {

fmt.Printf("nStream error: %vn", err)

return

}

openAIReply := response.Selections[0].Delta.Content material

// Ship every new reply as a separate SSE message

// Take away any newline characters from the response

openAIReply = strings.ReplaceAll(openAIReply, "n", "")

full += openAIReply

fmt.Println(full)

c.Author.Write([]byte(full))

c.Author.(http.Flusher).Flush()

}

}

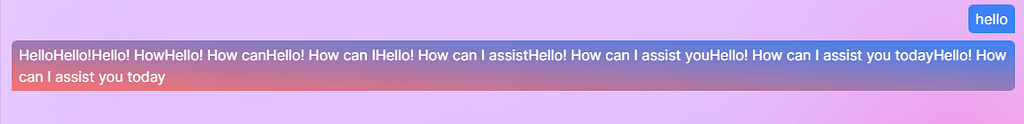

And in frontend i get this

And for refernce this can be a JS code i attempt to remake

async operate principal() {

let full = "";

const completion = await openai.chat.completions.create({

message: [{ role: "user", content: "Recipes for chicken fry" }],

mannequin: "gpt-3.5-turbo",

stream: true,

});

for await (const a part of completion) {

let textual content = half.decisions[0].delta.content material;

full += textual content;

console.clear();

console.log(full);

}

}

Thanks

After getting assist from Drew and Tim (in Slack) i lastly now can clear up the issue. thanks.

That is the Lastes replace code

for {

response, err := stream.Recv()

if errors.Is(err, io.EOF) {

fmt.Println("nStream completed")

return

}

if err != nil {

fmt.Printf("nStream error: %vn", err)

return

}

openAIReply := response.Selections[0].Delta.Content material

fmt.Printf(openAIReply)

// fmt.Fprint(openAIReply)

c.Author.WriteString(openAIReply)

c.Author.Flush()

// c.JSON(http.StatusOK, gin.H{"success": true, "message": openAIReply})

}