At this level, you may need heard about Explainable AI and wish to discover XAI strategies inside your work. However alongside the best way you may additionally have puzzled : Simply how nicely do XAI strategies work? And what must you do should you discover the outcomes of XAI aren’t as compelling as you’d like? Under, we discover a few of the questions surrounding XAI with a wholesome dose of skepticism.That is the second submit in a 3-post sequence on Explainable AI (XAI). The first submit highlighted examples and provided sensible recommendation on how and when to make use of XAI strategies for laptop imaginative and prescient duties. On this submit, we’ll develop the dialogue by providing recommendation on a few of the XAI limitations and challenges that you just would possibly discover alongside the trail of XAI adoption.

Are expectations too excessive?

XAI – like many subfields of AI – could be topic to a few of the hype, fallacies, and misplaced hopes related to AI at giant, together with the potential for unrealistic expectations.

Phrases equivalent to explainable and clarification threat falling beneath the fallacy of matching AI developments with human skills (the 2021 paper Why AI is More durable Thank We Suppose brilliantly describes 4 related fallacies in AI assumptions). This fallacy results in the unrealistic expectation that we’re approaching a cut-off date the place AI options won’t solely obtain nice feats and finally surpass human intelligence, however – on prime of that – they may have the ability to clarify how and why AI did what it did; due to this fact, rising the extent of belief in AI selections. As soon as we turn into conscious of this fallacy, it’s authentic to ask ourselves: How a lot can we realistically anticipate from XAI? On this submit we’ll focus on whether or not our expectations for XAI (and the worth XAI strategies would possibly add to our work) are too excessive and concentrate on answering three foremost questions:- Can XAI turn into a proxy for belief(worthiness)?

- What limitations of XAI strategies ought to we pay attention to?

- Can consistency throughout XAI strategies enhance consumer expertise?

1. Can XAI turn into a proxy for belief(worthiness)?

It’s pure to affiliate an AI resolution’s “capability to elucidate itself” to the diploma of belief people place in that resolution. Nevertheless, ought to explainability translate to trustworthiness?

XAI, regardless of its usefulness, falls wanting making certain belief in AI for at the very least these three causes: A. The premise that AI algorithms should have the ability to clarify their selections to people could be problematic. People can’t all the time clarify their decision-making course of, and even once they do, their explanations may not be dependable or constant. B. Having full belief in AI includes trusting not solely the mannequin, but in addition the coaching information, the workforce that created the AI mannequin, and your complete software program ecosystem. To make sure parity between fashions and outcomes, finest practices and conventions for information requirements, workflow requirements, and technical material experience have to be adopted. C. Belief is one thing that develops over time, however within the age the place early adopters need fashions as quick as they are often produced, fashions are typically launched to the general public early with the expectation they are going to be constantly up to date and improved upon in later releases – which could not occur. In lots of purposes, adopting AI fashions that stand the check of time by displaying persistently dependable efficiency and proper outcomes over time may be adequate, even with none XAI capabilities.2. What limitations of XAI strategies ought to we pay attention to?

Within the fields of picture evaluation and laptop imaginative and prescient, a typical interface for displaying the outcomes of XAI strategies consists of overlaying the “clarification” (often within the type of a heatmap or saliency map) on prime of the picture. This may be useful in figuring out which areas of the picture the mannequin deemed to be most related in its decision-making course of. It may well additionally help in diagnosing potential blunders that the deep studying mannequin may be making, which produce outcomes which might be seemingly right however in actuality the mannequin was trying in flawed place. A basic instance is the husky vs. wolf picture classification algorithm which in truth was a snow detector (Determine 1).

Determine 1: Instance of misclassification in a “husky vs. wolf” picture classifier as a consequence of a spurious correlation between photos of wolves and the presence of snow. The picture on the fitting, which reveals the results of the LIME post-hoc XAI approach , captures the classifier blunder. Supply: https://arxiv.org/pdf/1602.04938.pdf

The potential usefulness of post-hoc XAI outcomes has led to a rising adoption of strategies, equivalent to imageLIME, occlusion sensitivity, gradCAM for laptop imaginative and prescient duties. Nevertheless, these XAI strategies typically fall wanting delivering the specified clarification as a consequence of some well-known limitations. Under are 3 examples:A. XAI strategies can typically use related explanations for proper and incorrect selections.

For instance, within the earlier submit on this sequence, we confirmed that the heatmaps produced by the gradCAM operate offered equally convincing explanations (on this case, concentrate on the top space of the canine) each when their prediction is right (Determine 2, prime) in addition to incorrect (Determine 2, backside: a Labrador retriever was mistakenly recognized as a beagle).

B. Even in circumstances the place XAI strategies present {that a} mannequin is just not trying in the fitting place, that doesn’t essentially imply that it’s straightforward to know how you can repair the underlying downside.

In some simpler circumstances, equivalent to within the husky vs. wolf classification talked about earlier, a fast visible inspection of mannequin errors may have helped determine the spurious correlation between “presence of snow” and “photos of wolves.” There isn’t a assure that the identical course of would work for different (bigger or extra advanced) duties.

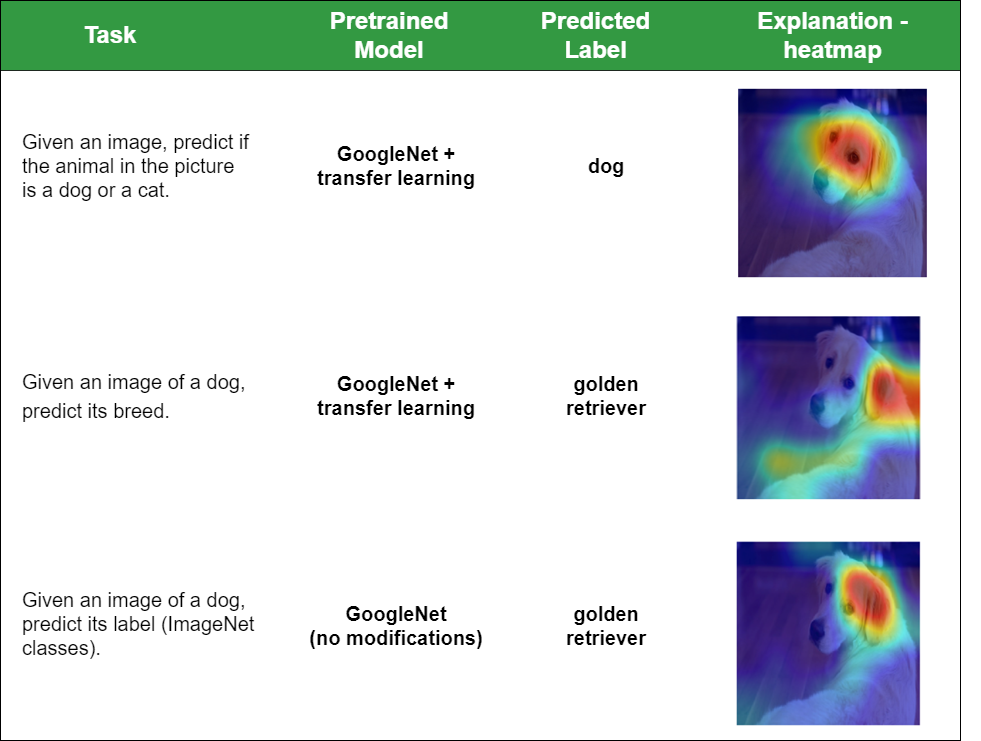

C. Outcomes are model- and task-dependent.

In our earlier submit on this sequence, we additionally confirmed that the heatmaps produced by the gradCAM operate in MATLAB offered totally different visible explanations related to the identical picture (Determine 3) relying on the pretrained mannequin and process. Determine 4 reveals these two examples and provides a third instance, by which the identical community (GoogLeNet) was used with out modification. A fast visible inspection of Determine 4 is sufficient to spot important variations among the many three heatmaps.

Determine 3: Take a look at picture for various picture classification duties and fashions (proven in Determine 4).

Determine 3: Take a look at picture for various picture classification duties and fashions (proven in Determine 4).

Determine 4: Utilizing gradCAM on the identical check picture (Determine 3), however for various picture classification duties and fashions.

Determine 4: Utilizing gradCAM on the identical check picture (Determine 3), however for various picture classification duties and fashions.

3. Can consistency throughout XAI strategies enhance consumer expertise?

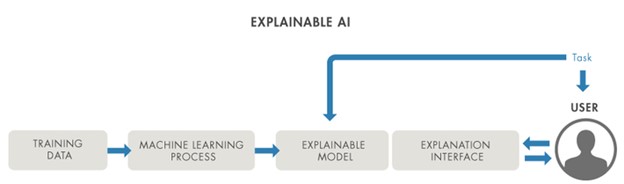

So as to perceive the why and how behind an AI mannequin’s selections and get a greater perception into its successes and failures, an explainable mannequin ought to be able to explaining itself to a human consumer via some kind of clarification interface (Determine 5). Ideally this interface ought to be wealthy, interactive, intuitive, and acceptable for the consumer and process.

Determine 5: Usability (UI/UX) facets of XAI: an explainable mannequin requires an appropriate interface.

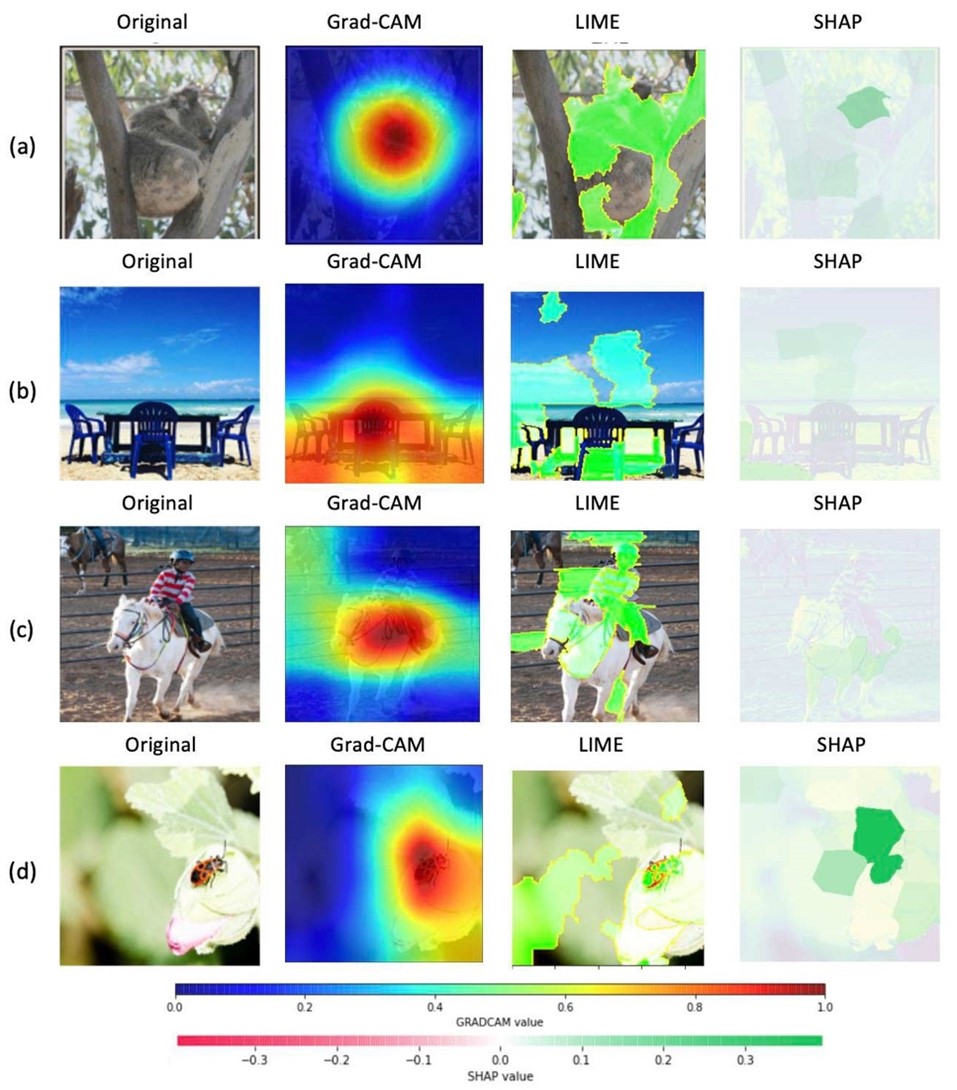

In visible AI duties, evaluating the outcomes produced by two or extra strategies could be problematic, because the mostly used post-hoc XAI strategies use considerably totally different clarification interfaces (that’s, visualization schemes) of their implementation (Determine 6):

Determine 5: Usability (UI/UX) facets of XAI: an explainable mannequin requires an appropriate interface.

In visible AI duties, evaluating the outcomes produced by two or extra strategies could be problematic, because the mostly used post-hoc XAI strategies use considerably totally different clarification interfaces (that’s, visualization schemes) of their implementation (Determine 6):

- CAM (Class Activation Maps), together with Grad-CAM, and occlusion sensitivity use a heatmap to correlate sizzling colours with salient/related parts of the picture.

- LIME (Native Interpretable Mannequin-Agnostic Explanations) generates superpixels, that are typicallly proven as highlighted pixels outlined in numerous pseudo-colors.

- SHAP (SHapley Additive exPlanations) values are used to divide pixels amongst those who enhance or lower the likelihood of a category being predicted.

Determine 6: Three totally different XAI strategies (Grad-CAM, LIME, and SHAP) for 4 totally different photos. Discover how the construction (together with border definition), selection of colours (and their which means), ranges of values and, consequently, which means of highlighted areas range considerably between XAI strategies. [Source: https://arxiv.org/abs/2006.11371]

Determine 6: Three totally different XAI strategies (Grad-CAM, LIME, and SHAP) for 4 totally different photos. Discover how the construction (together with border definition), selection of colours (and their which means), ranges of values and, consequently, which means of highlighted areas range considerably between XAI strategies. [Source: https://arxiv.org/abs/2006.11371]

Takeaway

On this weblog submit, we provided phrases of warning and mentioned some limitations of current XAI strategies. Regardless of the downsides, there are nonetheless many causes to be optimistic concerning the potential of XAI, as we’ll share the following (and last) submit on this sequence.