From Chess to Giant Language Fashions, reinforcement studying is a essential synthetic intelligence paradigm geared toward educating computer systems via exploration and ‘play’. Let’s check out the way it works and how one can get began.

It’s the early 90s, and as a baby of that point, I’m wandering my sacred place, my temple — the native video retailer.

Lengthy earlier than the world of streaming and internet-delivered media content material, the video retailer was the launchpad for a kid’s creativeness. Rows and rows of pure magic encased in chemically handled plastic packing containers that collectively gave rise to a odor that was distinctive to your native video retailer.

The odor of motion, journey, and delight, on the low low worth of $2 an evening, or $3 per week (new releases $5 in a single day!).

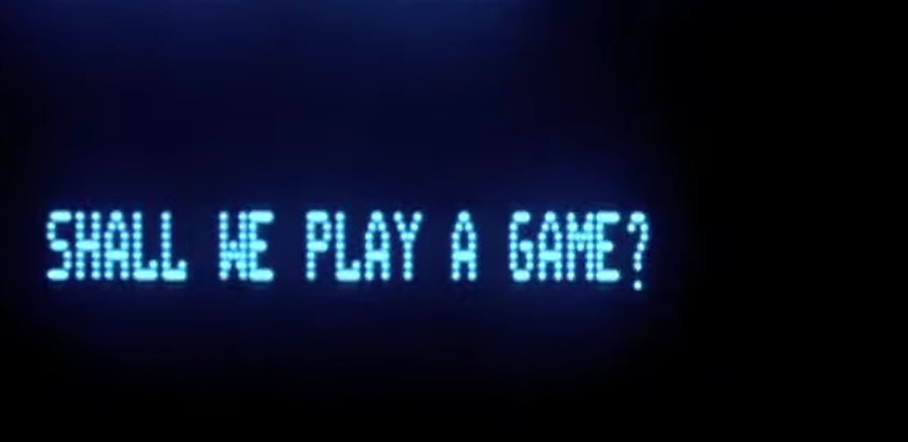

It was inside that hallowed place that I first got here throughout the 1983 basic film ‘WarGames’. Starring Matthew Broderick as a tenacious tech-savvy 80s teenager who hacks into equally 80s laptop programs solely to come upon the WOPR (a.okay.a ‘Joshua’) a pc that might suppose, purpose, discuss and — most significantly — play video games like an individual.

Should you haven’t seen the movie I’ll depart the remaining so that you can uncover, however secure to say the enjoyable begins if you realise that the WOPR not solely performs video games like chess and poker, but in addition world thermonuclear battle.

The film captured my creativeness, a pc that might suppose, that discovered by enjoying video games and bettering itself! I didn’t realise till a few years later that ‘WarGames’ is a mild — maybe unintended — introduction to the idea of reinforcement studying (RL). Within the following piece, we’re going to stroll via the essential idea of reinforcement studying, do a few of our personal experiments utilizing Python, and shut out with some solutions on learn how to enter the enjoyable (and addictive) world of aggressive reinforcement studying!

What’s Reinforcement Studying?

“Video games are essentially the most elevated type of investigation.” — Albert Einstein

For individuals who have some familiarity with different types of machine studying like linear regression or binary classification, the best way you go in regards to the technique of growing ML is pretty customary.

You begin with a bunch of labeled knowledge and a speculation, feed that knowledge into your algorithm, practice it, after which make inference on knowledge that the mannequin hasn’t beforehand seen.

Reinforcement studying nevertheless, is a completely totally different proposition.

Think about that you just’ve been dropped right into a on line casino and sat in entrance of a collection of poker machines and advised you have to win as a lot cash as potential over the subsequent couple of hours.

The factor is, you don’t understand how a lot any of those machines pays out, solely that all of them pay otherwise and inside a sure vary. So one machine would possibly pay between $1 and $3 per go, one other would possibly pay between $2 and $20, and so forth.

So what is going to you do to maximise your income? Properly, you’ll most likely begin by enjoying the machines randomly, simply to type of suss out what each’s pay vary is. This can work nicely sufficient at first, however it’s most likely not a viable long run technique. As a substitute, you’ll most likely play the machines for some time and begin to get a way of which machines are paying out extra, then regularly converge on enjoying solely these machines that pay out on the highest quantity potential, and keep on with these till your time is up.

Congratulations, you’ve simply carried out the human equal of reinforcement studying! So let’s attempt to map our fictitious on line casino journey onto the sides and terminology of RL.

Beneath is what may be thought of the standard diagram of how this sort of machine studying operates. It does an excellent job of displaying the repetitive or ‘reinforcing’ nature of the paradigm.

Don’t fear an excessive amount of in regards to the symbols simply but, the diagram above boils down to 1 essential aspect: Reinforcement studying is about an agent making an attempt to maximise its rewards via taking actions in its surroundings.

Now we’ll overlay our fantasy on line casino state of affairs on prime of the identical diagram to present it some context.

Let’s break this down.

The agent is the reinforcement studying mannequin or course of itself. It’s the factor which is able to take actions in an effort to maximise the reward or objective that you just’ve set. In our on line casino state of affairs, the agent may be regarded as, nicely, you!

You’re the agent.

It’s the agent’s job to search out methods to do higher at no matter job it’s being requested to do, play poker, beat somebody at chess or go, or any variety of reinforcement studying actions!

The motion(s) are issues you are able to do in an effort to obtain your objective. In our case, the actions are enjoying the assorted slot machines in entrance of us, however within the case of a maze, the actions could be issues like transferring ahead, left, proper or backwards — and even doing nothing in any respect.

The surroundings in our state of affairs is the slot machines themselves, however this might simply as simply be a maze, a chessboard or the highway itself within the case of autonomous autos! The surroundings is the house from inside which actions may be taken.

The reward is a straightforward one in our case, it’s all of the juicy income from the slot machines! The agent (us) has the objective of maximising the reward (cash) that they will get from the slot machines. It is a very simplistic instance of a reward. If you look into extra complicated varieties of reinforcement studying, calculating rewards can turn into a extra concerned state of affairs primarily based on every kind of various circumstances and values which are occurring within the present, historic and even future state.

And on that — state is the virtually the very last thing in our diagram. Now the state isn’t one thing that’s tremendous essential to our slot machines state of affairs, as a result of it actually could be very easy and there’s not loads to concentrate on in ‘state’, however If we had been a extra complicated RL surroundings, like say — a chess board — state could be a key characteristic. The state is sort of a snapshot of the place every little thing is at that second. So for utilizing reinforcement studying to play a sport of chess, the state is the place of all of the items on the board at every flip.

For autonomous driving, state would possibly encompass the automotive’s present location, pace, orientation, and details about close by objects and site visitors circumstances.

Figuring out state helps our RL algorithm (and us — as actual brokers) make choices that higher our possibilities at maximising our rewards over time.

Reinforcement studying has even turn into a essential issue within the fine-tuning of huge language fashions (LLMs), with a mechanism generally known as reinforcement studying via human suggestions (RLHF) placing people within the loop to behave because the reward operate itself, manually highlighting extra appropriate responses by the mannequin as a part of the advance course of.

And that’s it! We’ve coated our ‘lap’ across the technique of the RL diagram. Besides, we didn’t point out these little t’s and t+1’s, did we? Properly, t simply denotes time. Keep in mind, the method of reinforcement studying isn’t a linear sequence like different machine studying. Reinforcement studying doesn’t begin out with a stack of labelled knowledge for coaching. As a substitute, the agent should traverse the surroundings, making selections and studying with every iteration (hopefully) to make higher selections every time.

So the place we have now Rt+1, we’re simply denoting the speedy reward (R) that the agent receives at every time step or iteration. Equally, St+1 denotes the state that the surroundings transitions to after the agent takes an motion at the moment step.

Phew! Okay — so now we’ve obtained a number of the idea out of the best way. Are you continue to there? Right here’s the essential issues to recollect.

- Reinforcement studying is about brokers that take actions in environments to maximise rewards.

- Reinforcement studying doesn’t begin with a variety of labelled knowledge to coach from like different ML strategies. As a substitute, info is discovered via exploration of the surroundings.

- Reinforcement studying can be utilized by machine studying algorithms to turn into nice at video games like Chess and Go, in addition to clear up actual world issues like controlling autonomous autos or managing provide chain replenishment.

Now that we’re all schooled up on the fundamentals of idea, how about we dig into just a little sensible code? Seize your Jupyter pocket book, Python and let’s get to making a reinforcement studying experiment!

The N-Armed Bandit Downside

It was no accident that I picked the idea of slot machines with various payouts for instance reinforcement studying. The ‘N-armed bandit’ downside (so-called as a result of old skool slot machines had an arm-like lever you would pull and so they had been bandits as a result of they took all of your cash) is definitely a basic downside on the earth of reinforcement studying.

It’s basically a carbon copy of our earlier on line casino story, a bunch of slot machines with variable payouts and an agent that has no visibility of these payouts firstly of the method. The intention is to maximise payouts over a collection of iterations, and that is precisely the issue we’re going to arrange by way of our Python surroundings. So, let’s go!

As per normal in terms of making a Jupyter pocket book, you’re actually spoilt for selection. You possibly can set one up regionally in VS Code, or — as I’ll do right here — you may create one on-line utilizing a free service like Google Colab or Sagemaker Studio Lab.

I’m going to make use of Google Colab for mine. Simply head to https://colab.analysis.google.com/ and enroll and begin a fundamental CPU pocket book.

With the intention to arrange our experiment, we’re going to want one library — matplotlib — in an effort to visually measure the success of our tinkering, your surroundings might have already got this put in, however simply in case:

!pip set up numpy matplotlib

Now in an effort to work on our n-armed bandit downside we’re going to want some bandits (slots machines). In reinforcement studying phrases, what we’re doing right here is defining the environment. So let’s arrange some ‘bandits’ and outline their values randomly inside a sure vary (however we gained’t inform our agent in regards to the vary, in order that they’ll be “getting in blind”).

import numpy as np

import matplotlib.pyplot as pltnum_bandits = 5

t = 2000

q_true = np.random.regular(0, 1, num_bandits)

def get_reward(imply):

return np.random.regular(imply, 1)

plt.determine(figsize=(8, 6))

plt.bar(vary(num_bandits), q_true, align='heart', alpha=0.7)

plt.xlabel('Bandit')

plt.ylabel('True Imply Worth')

plt.title('True Imply Values for Bandits')

plt.xticks(vary(num_bandits))

plt.present()

We’ve created 5 bandits, and a ‘t’ of 2000 (basically we’re going to have 2000 turns at our slot machines to attempt to maximise the typical payout over time).

We’ve additionally arrange a q_true array which ensures a random payout or reward for every machine inside some boundary. Lastly, our get_reward operate offers the reward for every ‘go’ at a machine, introducing a slight quantity of variation for the reward, to make our course of extra attention-grabbing. Let’s plot out the true imply reward for every of our 5 slot machines:

So we will see a few of our bandits truly pay out damaging rewards, whereas #3 pays out a imply of 1.5. Okay, so to recap we’ve arrange the environment, our reward and our reward operate for accessing the reward. We’ve additionally arrange our time steps (t = 2000), so we’re almost able to go.

Random choice

Now it’s time to take some actions! First up, to spotlight the distinction between utilizing fundamental reinforcement versus a very random appraoch, let’s outline a operate for merely enjoying bandits at random. This shall be our baseline to see how our reinforcement studying strategies stack up.

# Use a completely random choice course of as a baseline

def random_selection():

arm_count = {i: 0 for i in vary(num_bandits)}

q_t = [0] * num_bandits

step_vs_reward = {}

average_reward = 0for step in vary(1, t + 1):

# decide an motion randomly

picked = np.random.selection(num_bandits)

arm_count[picked] += 1

reward = get_reward(q_true[picked])

average_reward += reward

# replace common reward for that motion

q_t[picked] = q_t[picked] + (reward - q_t[picked]) / arm_count[picked]

step_vs_reward[step] = average_reward / step

return step_vs_reward

Above, we’ve basically created that random methodology. At every time step or ‘t’, the strategy will select a bandit at random, acquire the reward, replace the typical and go round once more! Let’s give it a go and plot the outcomes.

random_rewards = random_selection()plt.determine(figsize=(12, 8))

# Plot the reward curves

plt.subplot(2, 1, 1)

plt.plot(checklist(random_rewards.keys()), checklist(random_rewards.values()), label='Random')

plt.xlabel('Steps')

plt.ylabel('Common Reward')

plt.title('Random Bandit Methods')

plt.legend()

plt.tight_layout()

plt.present()

Okay, so our fully random course of didn’t actually appear to get an ideal rating over 2000 turns, with a mean reward that’s simply barely greater than 0. What about if we re-run the experiment however this time put it up in opposition to a easy reinforcement course of generally known as ‘grasping’ choice.

Grasping methodology

Grasping choice is a mathematical course of by which our agent will over time persistently select the bandit that has the best payout essentially the most variety of instances. Every flip, the agent will retain a ‘reminiscence’ of the bandit with the best recognized reward essentially the most variety of instances at that step, and get an increasing number of ‘caught’ to the best paying bandit that it’s conscious of, the thought being that it will maximise the rewards over time, beating out our ‘let’s pull every little thing randomly’ mechanism.

It’s mainly an all-out ‘exploitation’ technique. Exploit the recognized surroundings for max payout. It’s essential to do not forget that reality, as we’ll clarify in a short while why this won’t be one of the best general technique.

For the equation lovers on the market, the center of our grasping methodology will use the next:

Q(a)←Q(a)+N(a)(R−Q(a))

- Q(a) is the estimated worth of motion a.

- N(a) represents the variety of instances motion a has been chosen.

- R is the reward obtained after our motion.

It’s a fundamental mechanism for studying from the earlier turns along with the cash you make every flip, to kind higher and higher estimates of future rewards to assist within the choice. Right here’s our code:

# outline a grasping course of

def grasping():

arm_count = {i: 0 for i in vary(num_bandits)}

q_t = [0] * num_bandits

step_vs_reward = {}

average_reward = 0for step in vary(1, t + 1):

# decide an motion greedily

picked = np.argmax(q_t)

arm_count[picked] += 1

reward = get_reward(q_true[picked])

average_reward += reward

# replace common reward for that motion

q_t[picked] = q_t[picked] + (reward - q_t[picked]) / arm_count[picked]

step_vs_reward[step] = average_reward / step

return step_vs_reward

The road picked = np.argmax(q_t)is the place the strategy will try to pick out what it estimates to be the bandit that can pay out the best every flip. Let’s put all this collectively, generate a brand new set of bandits, and evaluate our strategies!

num_bandits = 5

t = 2000q_true = np.random.regular(0, 1, num_bandits)

plt.determine(figsize=(8, 6))

plt.bar(vary(num_bandits), q_true, align='heart', alpha=0.7)

plt.xlabel('Bandit')

plt.ylabel('True Imply Worth')

plt.title('True Imply Values for Bandits')

plt.xticks(vary(num_bandits))

plt.present()

greedy_rewards = grasping()

random_rewards = random_selection()

plt.determine(figsize=(12, 8))

# Plot the reward curves

plt.subplot(2, 1, 1)

plt.plot(checklist(greedy_rewards.keys()), checklist(greedy_rewards.values()), label='Grasping')

plt.plot(checklist(random_rewards.keys()), checklist(random_rewards.values()), label='Random')

plt.xlabel('Steps')

plt.ylabel('Common Reward')

plt.title('Grasping vs Random Bandit Methods')

plt.legend()

plt.tight_layout()

plt.present()

The outcomes are in, and it appears to be like just like the grasping methodology is gaining a much better common reward than random choice. Random choice is giving us virtually no common reward after some preliminary randomness, whereas the grasping mechanism has averaged a a lot greater payout as a result of it’s been in a position to converge on the best common paying bandit:

Now that is good progress, however there’s a flaw in our grasping solely method. You see, the best way the algorithm presently works, as soon as it finds a excessive paying bandit, it’s very more likely to converge on it and keep on with it like glue. Which means whereas it’ll outperform random choice, it gained’t stability the usefulness of exploration (in search of out the prospect at a good greater payout) versus exploitation (maximising payout from what it already is aware of in regards to the surroundings).

It’s straightforward to spotlight this. Take a look at our re-run of the experiment, this time we’ve elevated the variety of bandits to eight:

Our grasping methodology nonetheless outperforms randomness, however it appears to have settled on a mean payout of about 0.6, though we all know that a few of our bandits pays out 1.5 or greater per flip. So how can we enhance our reinforcement studying? By introducing just a little extra likelihood that the agent will generally select to discover its surroundings as an alternative of at all times exploiting! Do try this, we’ll introduce a 3rd methodology — a grasping epsilon pushed agent.

Grasping Epsilon

“Not all those that wander are misplaced.” -J.R.R Tolkein

All this actually means, is we’ll introduce a 3rd methodology that makes use of a small constructive quantity — our epsilon worth — to find out whether or not the agent exploits its presently recognized surroundings for the utmost reward, or chooses to discover the surroundings for a probably higher or new most reward!

Let’s create a brand new methodology to encapsulate this variation:

def epsilon_greedy():

arm_count = {i: 0 for i in vary(num_bandits)}

q_t = [0] * num_bandits

step_vs_reward = {}

average_reward = 0for step in vary(1, t + 1):

# Select a random motion with chance epsilon

if np.random.rand() < epsilon:

picked = np.random.selection(num_bandits)

else:

picked = np.argmax(q_t)

arm_count[picked] += 1

reward = get_reward(q_true[picked])

average_reward += reward

q_t[picked] = q_t[picked] + (reward - q_t[picked]) / arm_count[picked]

step_vs_reward[step] = average_reward / step

return step_vs_reward

Let’s additionally add an epsilon worth the place we’ve outlined our bandits and turns:

num_bandits = 10

t = 2000

epsilon = 0.1 # Decrease worth is much less exploration, greater worth is extra exploration

Our epsilon is a worth between 0 and 1 and our epsilon_greedy operate will every flip generate a random quantity between 0 and 1, and evaluate it to our epsilon. If the epsilon is greater, we’ll decide a random bandit (discover) slightly than go along with our grasping methodology. If decrease, we’ll simply maximise rewards with what we already know. Let’s run the whole thing once more, with all 3 methods run in opposition to one another:

Now issues have gotten tremendous attention-grabbing! Our random methodology remains to be the worst performing of the lot, however early on we will see that our grasping methodology was main the best way! Nonetheless, at across the 200 step mark, it appears to be like like our option to generally discover the surroundings has truly paid off. Over the long term, our epsilon grasping methodology has come out forward and produced a better common reward.

Apparently, for those who repeat the experiment time and again, there are occasions that grasping will beat out grasping epsilon, owing to the inherent threat / randomness in selecting exploration at instances. Altering the variety of bandits to be much less additionally appears to favour the grasping methodology, because it’s faster / simpler to converge on the best paying bandit.

Attempt repeating the experiment with solely 3 bandits and see what occurs! Or attempt growing the epsilon worth to encourage your agent to do extra exploration.