Recap

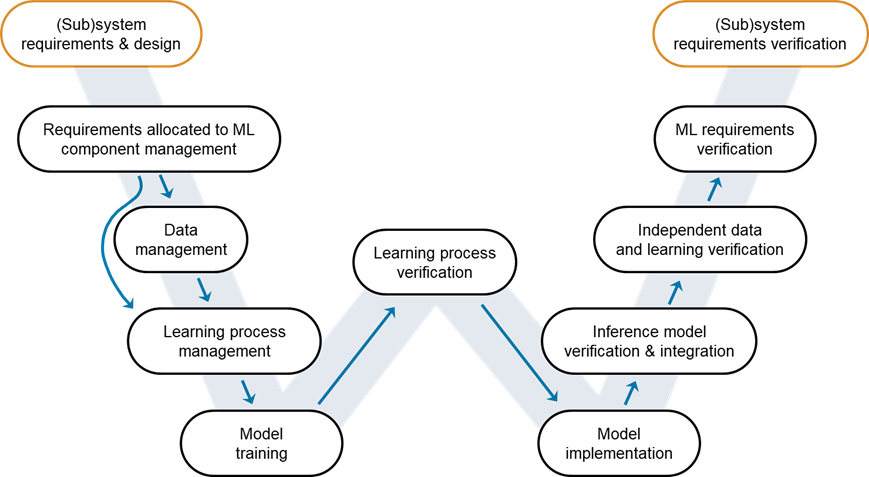

Within the earlier posts, we emphasised the significance of Verification and Validation (V&V) within the growth of AI fashions, significantly for functions in safety-critical industries akin to aerospace, automotive, and healthcare. Our dialogue launched the W-shaped growth workflow, an adaptation of the standard V-cycle for AI functions developed by EASA and Daedalean. By means of the W-shaped workflow, we detailed the journey from setting AI necessities to coaching a sturdy pneumonia detection mannequin with the MedMNISTv2 dataset. We coated testing the mannequin’s efficiency, strengthening its protection towards adversarial examples, and figuring out out-of-distribution information. This course of underscores the significance of complete V&V in crafting reliable and safe AI methods for high-stakes functions.

Determine 1: W-shaped growth course of. Credit score: EASA, Daedalean

It’s now time to stroll up the steps of the right-hand facet of the W-diagram, beginning with Mannequin Implementation.

Determine 1: W-shaped growth course of. Credit score: EASA, Daedalean

It’s now time to stroll up the steps of the right-hand facet of the W-diagram, beginning with Mannequin Implementation.

Mannequin Implementation

The transition from the Studying Course of Verification to the Mannequin Implementation stage throughout the W-shaped growth workflow signifies a pivotal second within the lifecycle of an AI challenge. At this juncture, the main focus shifts from refining and verifying the AI mannequin’s studying capabilities to getting ready the mannequin for a real-world utility. The profitable completion of the Studying Course of Verification stage provides confidence within the reliability and effectiveness of the skilled mannequin, setting the stage for its adaptation into an inference mannequin appropriate for manufacturing environments. Mannequin implementation is a crucial section within the W-shaped growth workflow, because it transitions the AI mannequin from a theoretical or experimental stage to a sensible, operational utility.

The distinctive code era framework, offered by MATLAB and Simulink, is instrumental on this section of the W-shaped growth workflow. It facilitates the seamless transition of AI fashions from the event stage to deployment in manufacturing environments. By automating the conversion of fashions developed in MATLAB into deployable code, this framework eliminates the necessity for manually re-coding in several programming languages (e.g., C/C++ and CUDA code). This automation considerably reduces the chance of introducing coding errors throughout the translation course of, which is essential for sustaining the integrity of the AI mannequin in safety-critical functions. Determine 2: MATLAB and Simulink code era instruments

You may verify your strong deep studying mannequin within the MATLAB workspace by utilizing the analyzeNetworkForCodegen operate. This is a useful software for assessing whether or not our community is prepared for code era.

Determine 2: MATLAB and Simulink code era instruments

You may verify your strong deep studying mannequin within the MATLAB workspace by utilizing the analyzeNetworkForCodegen operate. This is a useful software for assessing whether or not our community is prepared for code era.

analyzeNetworkForCodegen(internet)

Supported

_________

none "Sure"

arm-compute "Sure"

mkldnn "Sure"

cudnn "Sure"

tensorrt "Sure"

Confirming that the skilled community is appropriate with all goal libraries opens up many potentialities for code era. In eventualities the place certification is a key aim, significantly in safety-critical functions, one may take into account choosing code era that avoids utilizing third-party libraries (indicated by the ‘none’ worth). This strategy won’t solely simplify the certification course of but in addition improve the mannequin’s portability and ease of integration into various computing environments, guaranteeing that the AI mannequin will be deployed with the best ranges of reliability and efficiency throughout numerous platforms.

If further deployment necessities regarding reminiscence footprint, fixed-point arithmetic, and different computational constraints come into play, leveraging the Deep Studying Toolbox Mannequin Quantization Library turns into extremely helpful. This assist bundle addresses the challenges of deploying deep studying fashions in environments the place sources are restricted or the place excessive effectivity is paramount. By enabling quantization, pruning, or projection strategies, Deep Studying Toolbox Mannequin Quantization Library considerably reduces the reminiscence footprint and computational calls for of deep neural networks.

Determine 3: Quantizing a deep neural community utilizing the Deep Community Quantizer app

Leveraging the Deep Community Quantizer app, we efficiently compressed our mannequin’s dimension by an element of 4, transitioning from floating-point to int8 representations. Remarkably, this optimization incurred a mere 0.7% discount within the mannequin’s accuracy for classifying take a look at information.

Utilizing MATLAB Coder and GPU Coder, we are able to implement our AI mannequin in C++ and CUDA to run effectively on platforms supporting these languages. This step is essential for deploying AI fashions to real-time methods, the place execution pace and low latency are important. Producing this code includes establishing a configuration object, specifying the goal language, and defining the deep studying configuration to make use of, on this case, cuDNN for GPU acceleration.

Determine 3: Quantizing a deep neural community utilizing the Deep Community Quantizer app

Leveraging the Deep Community Quantizer app, we efficiently compressed our mannequin’s dimension by an element of 4, transitioning from floating-point to int8 representations. Remarkably, this optimization incurred a mere 0.7% discount within the mannequin’s accuracy for classifying take a look at information.

Utilizing MATLAB Coder and GPU Coder, we are able to implement our AI mannequin in C++ and CUDA to run effectively on platforms supporting these languages. This step is essential for deploying AI fashions to real-time methods, the place execution pace and low latency are important. Producing this code includes establishing a configuration object, specifying the goal language, and defining the deep studying configuration to make use of, on this case, cuDNN for GPU acceleration.

cfg = coder.gpuConfig("mex");

cfg.TargetLang = "C++";

cfg.GpuConfig.ComputeCapability = "6.1";

cfg.DeepLearningConfig = coder.DeepLearningConfig("cudnn");

cfg.DeepLearningConfig.AutoTuning = true;

cfg.DeepLearningConfig.CalibrationResultFile = "quantObj.mat";

cfg.DeepLearningConfig.DataType = "int8";

enter = ones(inputSize,"int8");

codegen -config cfg -args enter predictCodegen -report

Determine 4: GPU Coder code era report

Determine 4: GPU Coder code era report

Inference Mannequin Verification and Integration

The Inference Model Verification and Integration section represents two crucial, interconnected phases in deploying AI fashions, significantly in functions as crucial as pneumonia detection. These phases are important for transitioning a mannequin from a theoretical assemble right into a sensible, operational software inside a healthcare system.

For the reason that mannequin has been reworked to an implementation or inference type in C++ and CUDA, we have to confirm that the mannequin continues to precisely determine circumstances of pneumonia and regular situations from new, unseen chest X-ray photos, with the identical stage of accuracy and reliability because it did within the growth or studying surroundings when the mannequin was skilled utilizing Deep Studying Toolbox. Furthermore, we should combine the AI mannequin into the bigger system below design. This section is pivotal because it ensures that the mannequin not solely features in isolation but in addition performs as anticipated throughout the context of a complete system. This section could typically happen concurrently with the earlier mannequin implementation section, particularly when leveraging the suite of instruments offered by MathWorks. Within the Simulink harness proven in Determine 5, the deep studying mannequin is well built-in into the bigger system utilizing an Picture Classifier block, which serves because the core element for making predictions. Surrounding this central block are subsystems devoted to runtime monitoring, information acquisition, and visualization, making a cohesive surroundings for deploying and evaluating the AI mannequin. The runtime monitoring subsystem is essential for assessing the mannequin’s real-time efficiency, guaranteeing predictions are in step with anticipated outcomes. This runtime monitoring system implements the out-of-distribution detector we developed on this earlier put up. The information acquisition subsystem facilitates the gathering and preprocessing of enter information, guaranteeing that the mannequin receives information within the appropriate format. In the meantime, the visualization subsystem supplies a graphical illustration of the AI mannequin’s predictions and the system’s general efficiency, making it simpler to interpret the mannequin outcomes throughout the context of the broader system. Determine 5: Simulink harness integrating the deep studying mannequin

The output from the runtime monitor is especially insightful. As an illustration, when the runtime monitor subsystem processes a picture that matches the mannequin’s coaching information distribution, the visualization subsystem shows this final result in inexperienced, signaling confidence within the output offered by the AI mannequin. Conversely, when a picture presents a state of affairs the mannequin is much less aware of, the out-of-distribution detector highlights this anomaly in crimson. This distinction underscores the crucial functionality of a reliable AI system: not solely to provide correct predictions inside identified contexts but in addition to determine and appropriately deal with unknown examples.

In Determine 6, we are able to see the state of affairs the place an instance is flagged and rejected regardless of the considerably excessive confidence of the mannequin when making the prediction, illustrating the system’s means to discern and act on out-of-distribution information, thereby enhancing the general security and reliability of the AI utility.

Determine 5: Simulink harness integrating the deep studying mannequin

The output from the runtime monitor is especially insightful. As an illustration, when the runtime monitor subsystem processes a picture that matches the mannequin’s coaching information distribution, the visualization subsystem shows this final result in inexperienced, signaling confidence within the output offered by the AI mannequin. Conversely, when a picture presents a state of affairs the mannequin is much less aware of, the out-of-distribution detector highlights this anomaly in crimson. This distinction underscores the crucial functionality of a reliable AI system: not solely to provide correct predictions inside identified contexts but in addition to determine and appropriately deal with unknown examples.

In Determine 6, we are able to see the state of affairs the place an instance is flagged and rejected regardless of the considerably excessive confidence of the mannequin when making the prediction, illustrating the system’s means to discern and act on out-of-distribution information, thereby enhancing the general security and reliability of the AI utility.

Determine 6: Examples of the output of the runtime monitor subsystem – accepting predictions (left, information is taken into account to be in-distribution) and rejecting predictions (proper, information is taken into account to be out-of-distribution).

At this stage, additionally it is essential to contemplate the implementation of a complete testing technique, if not already in place. Using MATLAB Check or Simulink Check, we are able to develop a set of automated checks designed to carefully confirm the performance and efficiency of the AI mannequin throughout numerous eventualities. This strategy allows us to systematically validate all facets of our work, from the accuracy of the mannequin’s predictions to its integration throughout the bigger system.

Determine 6: Examples of the output of the runtime monitor subsystem – accepting predictions (left, information is taken into account to be in-distribution) and rejecting predictions (proper, information is taken into account to be out-of-distribution).

At this stage, additionally it is essential to contemplate the implementation of a complete testing technique, if not already in place. Using MATLAB Check or Simulink Check, we are able to develop a set of automated checks designed to carefully confirm the performance and efficiency of the AI mannequin throughout numerous eventualities. This strategy allows us to systematically validate all facets of our work, from the accuracy of the mannequin’s predictions to its integration throughout the bigger system.

Unbiased Knowledge and Studying Verification

The Unbiased Knowledge and Studying Verification section goals to carefully confirm that information units have been managed appropriately via the info administration life cycle, which turns into possible solely after the inference mannequin has been completely verified on the goal platform. This section includes an impartial evaluate to substantiate that the coaching, validation, and take a look at information units adhere to stringent information administration necessities, and are full and consultant of the appliance’s enter area.

Whereas the accessibility of MedMNIST v2 dataset used on this instance clearly helped to speed up the event course of, it additionally underscores a basic problem. The general public nature of the dataset implies that sure facets of knowledge verification, significantly these guaranteeing dataset compliance with particular information administration necessities and the entire representativeness of the appliance’s enter area, can’t be absolutely addressed within the conventional sense. The training verification step is supposed to confirm that the skilled mannequin has been satisfactorily verified, together with the required protection analyses. Knowledge and studying necessities have been verified and can all be collectively highlighted along with different remaining necessities within the following part.Necessities Verification

The Necessities Verification section concludes the W-shaped growth course of, specializing in verifying the necessities.

Within the second put up of this sequence, we highlighted the method of authoring necessities utilizing the Necessities Toolbox. As depicted in Determine 7, we now have reached a stage the place the features and checks applied are straight linked with their corresponding necessities. Determine 7: Linking of necessities with implementation and checks

This linkage is essential for closing the loop within the growth course of. By operating all applied checks, we are able to confirm that our necessities have been adequately applied. Determine 8 illustrates the potential to run all checks straight from the Necessities Editor, enabling verification that each one the necessities have been applied and efficiently examined.

Determine 7: Linking of necessities with implementation and checks

This linkage is essential for closing the loop within the growth course of. By operating all applied checks, we are able to confirm that our necessities have been adequately applied. Determine 8 illustrates the potential to run all checks straight from the Necessities Editor, enabling verification that each one the necessities have been applied and efficiently examined.

Determine 8: Operating checks from inside Necessities Editor

At this level, we are able to confidently assert that our growth course of has been thorough and meticulous, guaranteeing that the AI mannequin for pneumonia detection is just not solely correct and strong, but in addition virtually viable. By linking every requirement to particular features and checks, we’ve established clear traceability that enhances the transparency and accountability of our growth efforts. Moreover, the flexibility to systematically confirm each requirement via direct testing from the Necessities Editor underscores our complete strategy to necessities verification. This marks the end result of the W-shaped course of, affirming that our AI mannequin meets the stringent standards set forth for healthcare functions.

With this stage of diligence, we conclude this case research and consider we’re well-prepared to deploy the mannequin, assured in its potential to precisely and reliably help in pneumonia detection, thereby contributing to improved affected person care and healthcare efficiencies.

Determine 8: Operating checks from inside Necessities Editor

At this level, we are able to confidently assert that our growth course of has been thorough and meticulous, guaranteeing that the AI mannequin for pneumonia detection is just not solely correct and strong, but in addition virtually viable. By linking every requirement to particular features and checks, we’ve established clear traceability that enhances the transparency and accountability of our growth efforts. Moreover, the flexibility to systematically confirm each requirement via direct testing from the Necessities Editor underscores our complete strategy to necessities verification. This marks the end result of the W-shaped course of, affirming that our AI mannequin meets the stringent standards set forth for healthcare functions.

With this stage of diligence, we conclude this case research and consider we’re well-prepared to deploy the mannequin, assured in its potential to precisely and reliably help in pneumonia detection, thereby contributing to improved affected person care and healthcare efficiencies.

| Recall that the demonstrations and verifications mentioned on this case research make the most of a “toy” medical dataset for illustrative functions (MedMNIST v2). The methodologies and processes outlined are designed to focus on greatest practices in AI mannequin growth. They totally apply to real-world information eventualities, emphasizing the need of rigorous testing and validation to make sure the mannequin’s efficacy and reliability in scientific settings. |