TorchVision, a PyTorch laptop imaginative and prescient package deal, has a easy API for picture pre-processing in its torchvision.transforms module. The module comprises a set of widespread, composable picture transforms and provides you a simple solution to write new customized transforms. As you’ll anticipate, these customized transforms could be included in your pre-processing pipeline like every other rework from the module.

Let’s begin with a typical use case, making ready PIL pictures for one of many pre-trained TorchVision picture classifiers:

import io

import requests

import torchvision.transforms as T

from PIL import Picture

resp = requests.get('https://sparrow.dev/property/img/cat.jpg')

img = Picture.open(io.BytesIO(resp.content material))

preprocess = T.Compose([

T.Resize(256),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)

])

x = preprocess(img)

x.form

# Anticipated consequence

# torch.Dimension([3, 224, 224])Right here, we apply the next so as:

- Resize a PIL picture to

(<peak>, 256), the place<peak>is the worth that maintains the facet ratio of the enter picture. - Crop the

(224, 224)heart pixels. - Convert the PIL picture to a PyTorch tensor (which additionally strikes the channel dimension to the start).

- Normalize the picture by subtracting a recognized ImageNet imply and customary deviation.

Let’s go a notch deeper to know precisely how these transforms work.

Transforms

TorchVision transforms are extraordinarily versatile – there are only a few guidelines. To be able to be composable, transforms should be callables. Which means you possibly can really simply use lambdas if you’d like:

times_2_plus_1 = T.Compose([

lambda x: x * 2,

lambda x: x + 1,

])

x.imply(), times_2_plus_1(x).imply()

# Anticipated consequence

# (tensor(1.2491), tensor(3.4982))However usually, you’ll need to use callable lessons as a result of they offer you a pleasant solution to parameterize the rework at initialization. For instance, if you already know you need to resize pictures to have peak of 256 you possibly can instantiate the T.Resize rework with a 256 as enter to the constructor:

resize_callable = T.Resize(256)Any PIL picture handed to resize_callable() will now get resized to (<peak>, 256):

resize_callable(img).measurement

# Anticipated consequence

# (385, 256)This habits is essential as a result of you’ll sometimes need TorchVision or PyTorch to be accountable for calling the rework on an enter. We really noticed this within the first instance: the part transforms (Resize, CenterCrop, ToTensor, and Normalize) have been chained and known as contained in the Compose rework. And the calling code wouldn’t have information of issues like the scale of the output picture you need or the imply and customary deviation for normalization.

Apparently, there isn’t a Rework base class. Some transforms don’t have any father or mother class in any respect and a few inherit from torch.nn.Module. Which means when you’re writing a rework class, the constructor can do no matter you need. The one requirement is that there should be a __call__() methodology to make sure the instantiated object is callable. Word: when transforms override the torch.nn.Module class, they are going to sometimes outline the ahead() methodology after which the bottom class takes care of __call__().

Moreover, there are not any actual constraints on the callable’s inputs or outputs. Just a few examples:

T.Resize: PIL picture in, PIL picture out.T.ToTensor: PIL picture in, PyTorch tensor out.T.Normalize: PyTorch tensor in, PyTorch tensor out.

NumPy arrays might also be a sensible choice generally.

Okay. Now that we all know slightly about what transforms are, let’s take a look at an instance that TorchVision offers us out of the field.

Instance Rework: Compose

The T.Compose rework takes a listing of different transforms within the constructor and applies them sequentially to the enter. We will check out the __init__() and __call__() strategies from a latest commit hash to see how this works:

class Compose:

def __init__(self, transforms):

self.transforms = transforms

def __call__(self, img):

for t in self.transforms:

img = t(img)

return imgQuite simple! You possibly can go the T.Compose constructor a listing (or every other in-memory sequence) of callables and it’ll dutifully apply them to any enter one by one. And see that the enter img could be any kind you need. Within the first instance, the enter was PIL and the output was a PyTorch tensor. Within the second instance, the enter and output have been each tensors. T.Compose doesn’t care!

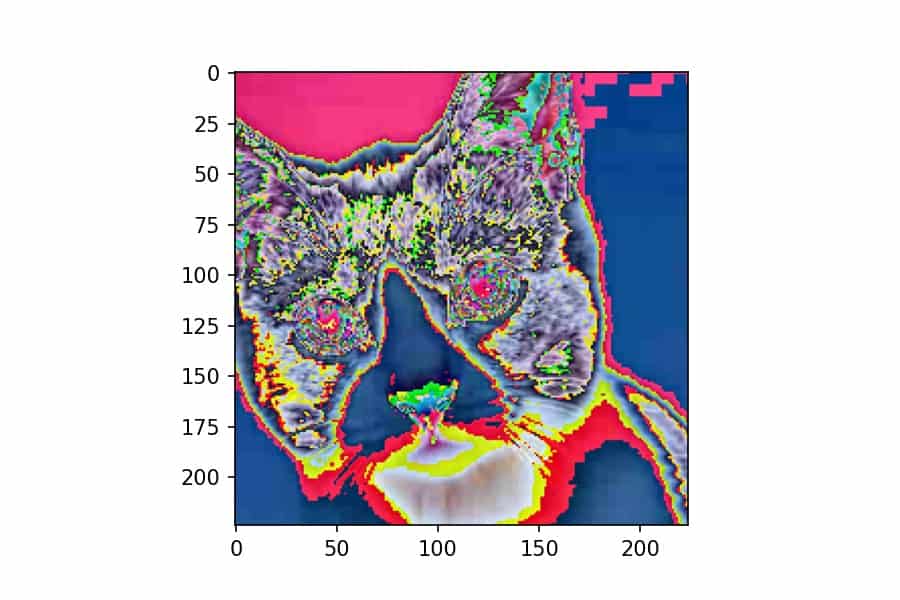

Let’s instantiate a brand new T.Compose rework that may allow us to visualize PyTorch tensors. Keep in mind, we took a PIL picture and generated a PyTorch tensor that’s prepared for inference in a TorchVision classifier. Let’s take a PyTorch tensor from that transformation and convert it into an RGB NumPy array that we are able to plot with Matplotlib:

%matplotlib inline

import matplotlib.pyplot as plt

import numpy as np

reverse_preprocess = T.Compose([

T.ToPILImage(),

np.array,

])

plt.imshow(reverse_preprocess(x));The T.ToPILImage rework converts the PyTorch tensor to a PIL picture with the channel dimension on the finish and scales the pixel values as much as int8. Then, since we are able to go any callable into T.Compose, we go within the np.array() constructor to transform the PIL picture to NumPy. Not too dangerous!

Purposeful Transforms

As we’ve now seen, not all TorchVision transforms are callable lessons. Actually, TorchVision comes with a bunch of good practical transforms that you just’re free to make use of. In case you take a look at the torchvision.transforms code, you’ll see that just about the entire actual work is being handed off to practical transforms.

For instance, right here’s the practical model of the resize logic we’ve already seen:

import torchvision.transforms.practical as F

F.resize(img, 256).measurement

# Anticipated consequence

# (385, 256)It does the identical work, however it’s important to go further arguments in whenever you name it. My recommendation: use practical transforms for writing customized rework lessons, however in your pre-processing logic, use callable lessons or single-argument features which you could compose.

At this level, we all know sufficient about TorchVision transforms to write down one among our personal.

Customized Transforms

Let’s write a customized rework that erases the highest left nook of a picture with the colour of a randomly chosen pixel. We’ll use the F.erase() perform and we’ll permit the caller to specify what what number of pixels they need to erase in each instructions:

import torch

class TopLeftCornerErase:

def __init__(self, n_pixels: int):

self.n_pixels = n_pixels

def __call__(self, img: torch.Tensor) -> torch.Tensor:

all_pixels = img.reshape(3, -1).transpose(1, 0)

idx = torch.randint(len(all_pixels), (1,))[0]

random_pixel = all_pixels[idx][:, None, None]

return F.erase(img, 0, 0, self.n_pixels, self.n_pixels, random_pixel)Within the constructor, all we do is take the variety of pixels as a parameter from the caller. The magic occurs within the __call__() methodology:

- Create a reshaped view of the picture tensor as a

(n_pixels, 3)tensor - Randomly choose a pixel index utilizing

torch.randint() - Add two dummy dimensions to the tensor. It’s because

F.erase()and to the picture, which has these two dimensions. - Name and return

F.erase(), which takes 5 arguments: the tensor, theicoordinate to start out at, thejcoordinate to start out at, thepeakof the field to erase, thewidthof the field to erase and the random pixel.

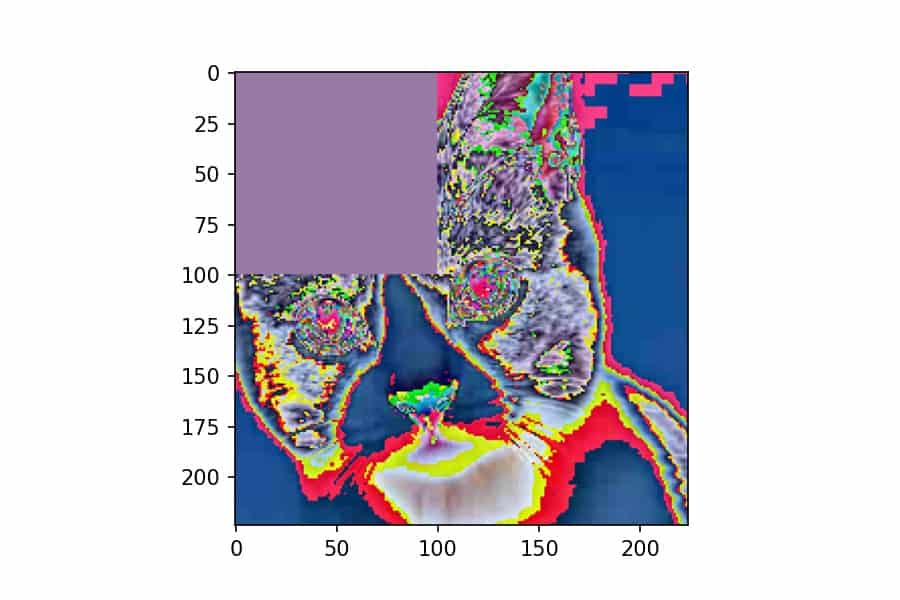

We will apply this tradition rework similar to every other rework. Let’s use T.Compose to each apply this erase rework after which convert it to NumPy for plotting:

torch.manual_seed(1)

erase = T.Compose([

TopLeftCornerErase(100),

reverse_preprocess,

])

plt.imshow(erase(x));We’ve seen this sort of rework composition a number of occasions now. One factor that’s essential to level out is that that you must name torch.manual_seed() if you’d like a deterministic (and due to this fact reproducible) consequence for any TorchVision rework that has random habits in it. That is new as of model 0.8.0.

And that’s about all there may be to learn about TorchVision transforms! They’re light-weight and versatile, however utilizing them will make your picture preprocessing code a lot simpler to cause about.