Addressing the challenges of caching a GraphQL API utilizing API gateways

GraphQL is commonly used as an API gateway for microservices, however may play properly with current options for API gateways. For instance utilizing Kong, an API gateway and microservice administration platform. As an API Gateway, Kong has many capabilities that you should use to boost GraphQL APIs. A type of capabilities is including a proxy cache to the upstream companies you add to Kong. However relating to caching with GraphQL, there are a number of caveats you could contemplate because of the nature of GraphQL. On this submit, we’ll go over the challenges of caching a GraphQL API and the way an API gateway can assist with this.

Caching is a vital a part of any API — you wish to be certain that your customers get the most effective response time on their requests as potential. In fact, caching shouldn’t be a one-size-fits-all answer, and there are a number of totally different methods for various situations.

Suppose your API is a heavy-traffic, crucial software. It’s going to get hit exhausting by many customers, and also you wish to ensure that it may scale with demand. Caching can assist you do this by lowering the load in your servers and enhancing all customers’ efficiency whereas doubtlessly lowering prices and offering further safety features. Caching with Kong is commonly utilized on the proxy layer, that means that this proxy will cache requests and serve the response of the final name to an endpoint if this exists within the cache.

GraphQL APIs have their challenges in caching that you simply want to pay attention to earlier than implementing a caching technique, as you’ll study within the subsequent part.

GraphQL has been getting more and more common amongst firms. GraphQL is finest described as a question language for APIs. It’s declarative, which suggests you write what you need your knowledge to appear to be and go away the small print of that request as much as the server. That is good for us as a result of it makes our code extra readable and maintainable by separating issues into client-side and server-side implementations.

GraphQL APIs can resolve challenges like over fetching and below fetching (additionally known as the N+1 downside). However as with every API, a poorly designed GraphQL API may end up in some horrendous N+1 points. Customers have management over the response of a GraphQL request by modifying the question they ship to the GraphQL API, they usually may even make the information nested on a number of ranges. Whereas there are actually methods to alleviate this downside on the backend through the design of the resolvers or caching methods, what if there was a technique to make your GraphQL API blazing quick with out altering a single line of code?

StepZen provides caching to queries and mutations that depend on

@relaxation, see right here for extra data.

Through the use of Kong and GraphQL Proxy Cache Superior plugin, you completely can! Let’s have a look.

While you’re utilizing Kong as an API gateway, it may be used to proxy requests to your upstream companies, together with GraphQL APIs. With the enterprise plugin GraphQL Proxy Caching Superior, you’ll be able to add proxy caching to any GraphQL API. With this plugin, you’ll be able to allow proxy caching on the GraphQL service, route, and even globally. Let’s do that out by including a GraphQL service to Kong created with StepZen.

With StepZen, you’ll be able to create GraphQL APIs declaratively through the use of the CLI or writing GraphQL SDL (Scheme Definition Language). It’s a language-agnostic technique to create GraphQL because it depends fully on the GraphQL SDL. The one factor it’s worthwhile to get began is an current knowledge supply, for instance, a (No)SQL database or a REST API. You’ll be able to then generate a schema and resolvers for this knowledge supply utilizing the CLI or write the connections utilizing GraphQL SDL. The GraphQL schema can then be deployed to the cloud immediately out of your terminal, with built-in authentication.

To get a GraphQL utilizing StepZen, you’ll be able to join your knowledge supply or use one of many pre-built examples from Github. On this submit, we’ll be taking a GraphQL API created on prime off a MySQL database from the examples. By cloning this repository to your native machine, you’ll get a set of configuration information and .graphql information containing the GraphQL schema. To run the GraphQL API and deploy it to the cloud, it’s worthwhile to be utilizing the StepZen CLI.

You’ll be able to set up the CLI from npm:

npm i -g stepzen

After putting in the CLI, you’ll be able to sign-up for a StepZen account to deploy the GraphQL API on a non-public, secured endpoint. Optionally, you’ll be able to proceed with out signing up however keep in mind that the deployed GraphQL endpoint shall be public. To start out and deploy the GraphQL API, you’ll be able to run:

stepzen begin

Operating this command will return a deployed endpoint in your terminal (like https://public3b47822a17c9dda6.stepzen.web/api/with-mysql/__graphql). Additionally, it produces a localhost handle that you should use to discover the GraphQL API domestically utilizing GraphiQL.

The service you’ve created within the earlier part can now be added to your Kong gateway through the use of Kong Supervisor or the Admin API. Let’s use the Admin API so as to add our newly created GraphQL API to Kong, which solely requires us to ship a couple of cURL requests. First, we have to add the service, after which a route for this service must be added.

You’ll be able to run the next command so as to add the GraphQL service and route. Relying on the setup of your Kong gateway, you would possibly want so as to add authentication headers to your request:

curl -i -X POST

--url http://localhost:8001/companies/

--data 'identify=graphql-service'

--data 'url=https://public3b47822a17c9dda6.stepzen.web/api/with-mysql/__graphql'curl -i -X POST

--url http://localhost:8001/companies/graphql-service/routes

--data 'hosts[]=stepzen.web'

The GraphQL API has now been added as an upstream service to the Kong gateway, that means you’ll be able to already proxy to it. However not earlier than we’ll be including the GraphQL Proxy Caching Superior plugin to it, which can be performed utilizing a cURL command:

curl -i -X POST

--url http://localhost:8001/companies/graphql-service/plugins/

--data 'identify=graphql-proxy-cache-advanced'

--data 'config.technique=reminiscence'

Utilizing Kong as a proxy for the GraphQL API with the addition of this plugin, all requests are cached routinely on the question stage. The default TTL (Time To Stay) of the cache is 300 seconds except you overwrite it (for instance 600 seconds) by including --data' config.cache_ttl=600. Within the subsequent part, we’ll discover the cache by querying the proxied GraphQL endpoint.

The proxied GraphQL API shall be obtainable by Kong in your gateway endpoint. While you ship a request to it, it is going to anticipate to obtain a header worth with the hostname of the GraphQL. As it’s a request to a GraphQL API, remember the fact that all requests needs to be POST requests with the Content material-Sort: software/json header and a GraphQL question appended within the physique.

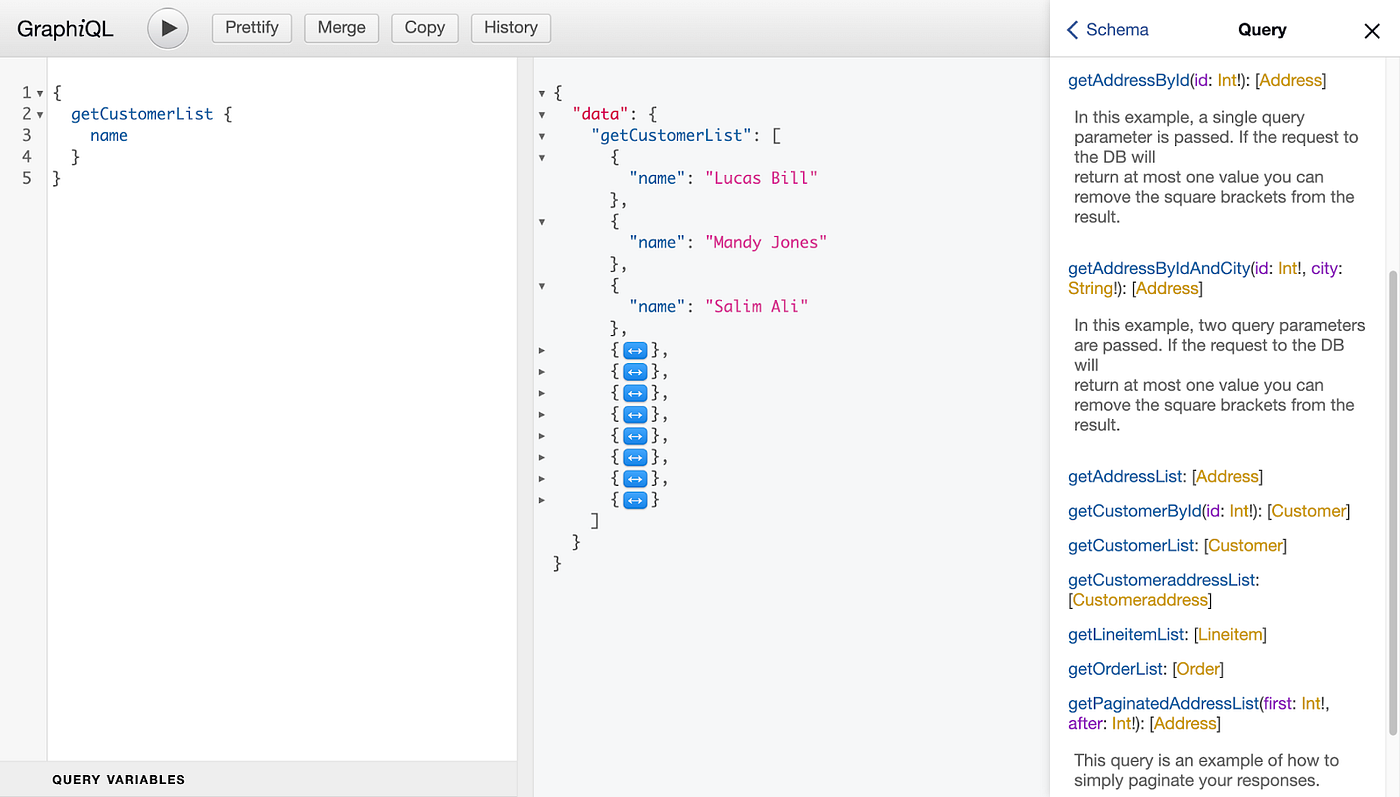

To ship a request to the GraphQL API, you could first assemble a GraphQL question. While you go to the endpoint the place the GraphQL API is deployed, you discover a GraphiQL interface — an interactive playground to discover the GraphQL API. On this interface, yow will discover the entire schema, together with the queries and presumably different operations the GraphQL API accepts.

On this interface, you’ll be able to ship queries and discover the consequence and the schema as you see above. To ship this similar question to the proxied GraphQL API, you should use the next cURL:

curl -i -X POST 'http://localhost:8000/'

--header 'Host: stepzen.web'

--header 'Content material-Sort: software/json'

--data-raw '{"question":"question getCustomerList {n getCustomerList {n namen }n}","variables":{}}'

This cURL will return each the response consequence and the response headers:

HTTP/1.1 200 OK

Content material-Sort: software/json; charset=utf-8

Content material-Size: 445

Connection: keep-alive

X-Cache-Key: 5abfc9adc50491d0a264f1e289f4ab1a

X-Cache-Standing: Miss

StepZen-Hint: 410b35b512a622aeffa2c7dd6189cd15

Range: Origin

Date: Wed, 22 Jun 2022 14:12:23 GMT

Through: kong/2.8.1.0-enterprise-edition

Alt-Svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000

X-Kong-Upstream-Latency: 256

X-Kong-Proxy-Latency: 39{

"knowledge": {

"getCustomerList": [

{

"name": "Lucas Bill"

},

{

"name": "Mandy Jones"

},

…

]

}

}

Within the above, the response of the GraphQL API will be seen, which is an inventory of faux prospects with the identical knowledge construction because the question we despatched to it. Additionally, the response headers are outputted, and listed here are two headers that we must always deal with: X-Cache-Standing and X-Cache-Key. The primary header will present if the cache was hit, and the second exhibits the important thing of the cached response. To cache the response, Kong will take a look at the endpoint you ship the request to and the contents of the submit physique – which incorporates the question and potential question parameters. The GraphQL Proxy Superior Plugin does not cache a part of the response for those who reuse components of a GraphQL question in a special request.

On the primary request, the worth for X-Cache-Standing will all the time be Miss as no cached response is offered. Nonetheless, X-Cache-Key will all the time have a worth as a cache shall be created on the request. Thus the worth for X-Cache-Key would be the key of the newly created cached response or the prevailing cache response from a earlier request.

Let’s make this request once more and deal with the values of those two response headers:

X-Cache-Standing: Hit

X-Cache-Key: 5abfc9adc50491d0a264f1e289f4ab1a

The second time we hit the proxied GraphQL API endpoint, the cache was hit, and the cache key remains to be the identical worth as for the primary request. This implies the earlier request’s response has been cached and is now returned. As an alternative, the cached response was served because the cache standing indicated. Until you modify the default TTL of the cache, the cached response stays obtainable for 300 seconds. In case you ship this similar request to the proxied GraphQL API after the TTL expires, the cache standing shall be Miss.

It’s also possible to examine the cached response immediately by sending a request to the GraphQL Proxy Superior Plugin immediately. To get the cached response from the earlier request, you should use the next endpoint on the Admin API of your Kong occasion. For instance:

curl http://localhost:8001/graphql-proxy-cache-advanced/5abfc9adc50491d0a264f1e289f4ab1a

This request returns HTTP 200 OK if the cached worth exists and HTTP 404 Not Discovered if it does not. Utilizing the Kong Admin API, you’ll be able to delete a cached response by its key and even purge all cached responses. See the Delete cached entity part within the GraphQL Proxy Cache Superior documentation for extra data on this topic.

This submit taught you methods to add a GraphQL API to Kong to create a proxied GraphQL endpoint. By including it to Kong, you don’t solely get all of the options that Kong affords as a proxy service, however you too can implement caching for the GraphQL API. You are able to do this by putting in the GraphQL Proxy Cache Superior, which is offered for Plus and Enterprise cases. With this plugin, you’ll be able to implement proxy caching for GraphQL APIs, letting you cache the response of any GraphQL request.

Comply with us on Twitter or be part of our Discord group to remain up to date about our newest developments.