The article begins by formulating an issue relating to how one can extract emails from any web site utilizing Python, offers you an summary of options, after which goes into nice element about every answer for freshmen.

On the finish of this text, you’ll know the outcomes of evaluating strategies of extracting emails from an internet site. Proceed studying to seek out out the solutions.

Chances are you’ll wish to learn out the disclaimer on net scraping right here:

⚖️ Beneficial Tutorial: Is Web Scraping Authorized?

You will discover the complete code of each net scrapers on our GitHub right here. 👈

Drawback Formulation

Entrepreneurs construct electronic mail lists to generate leads.

Statistics present that 33% of entrepreneurs ship weekly emails, and 26% ship emails a number of occasions per thirty days. An electronic mail checklist is a incredible device for each firm and job looking out.

For example, to seek out out about employment openings, you possibly can hunt up an worker’s electronic mail deal with of your required firm.

Nonetheless, manually finding, copying, and pasting emails right into a CSV file takes time, prices cash, and is vulnerable to error. There are loads of on-line tutorials for constructing electronic mail extraction bots.

When trying to extract electronic mail from an internet site, these bots expertise some issue. The problems embody the prolonged knowledge extraction occasions and the prevalence of sudden errors.

Then, how will you acquire an electronic mail deal with from an organization web site in essentially the most environment friendly method? How can we use strong programming Python to extract knowledge?

Technique Abstract

This submit will present two methods to extract emails from web sites. They’re known as Direct Electronic mail Extraction and Oblique Electronic mail Extraction, respectively.

💡 Our Python code will seek for emails on the goal web page of a given firm or particular web site when utilizing the direct electronic mail extraction technique.

For example, when a consumer enters “www.scrapingbee.com” into their display, our Python electronic mail extractor bot scrapes the web site’s URLs. Then it makes use of a regex library to search for emails earlier than saving them in a CSV file.

💡 The second technique, the oblique electronic mail extraction technique, leverages Google.com’s Search Engine End result Web page (SERP) to extract electronic mail addresses as an alternative of utilizing a particular web site.

For example, a consumer might kind “scrapingbee.com” as the web site title. The e-mail extractor bot will search on this time period and return the outcomes to the system. The bot then shops the e-mail addresses extracted utilizing regex right into a CSV file from these search outcomes.

👉 Within the subsequent part, you’ll be taught extra about these strategies in additional element.

These two strategies are glorious electronic mail list-building instruments.

The principle problem with different electronic mail extraction strategies posted on-line, as was already mentioned, is that they extract lots of of irrelevant web site URLs that don’t include emails. The programming operating by way of these approaches takes a number of hours.

Uncover our two glorious strategies by persevering with studying.

Answer

Technique 1 Direct Electronic mail Extraction

This technique will define the step-by-step course of for acquiring an electronic mail deal with from a selected web site.

Step 1: Set up Libraries.

Utilizing the pip command, set up the next Python libraries:

- You should use Common Expression (

re) to match an electronic mail deal with’s format. - You should use the

requestmodule to ship HTTP requests. bs4is a stunning soup for net web page extraction.- The

dequemodule of thecollectionspackage deal permits knowledge to be saved in containers. - The

urlsplitmodule within theurlibpackage deal splits a URL into 4 elements. - The emails might be saved in a DataFrame for future processing utilizing the

pandasmodule. - You should use

tldlibrary to amass related emails.

pip set up re pip set up request pip set up bs4 pip set up python-collections pip set up urlib pip set up pandas pip set up tld

Step 2: Import Libraries.

Import the libraries as proven under:

import re import requests from bs4 import BeautifulSoup from collections import deque from urllib.parse import urlsplit import pandas as pd from tld import get_fld

Step 3: Create Consumer Enter.

Ask the consumer to enter the specified web site for extracting emails with the enter() perform and retailer them within the variable user_url:

user_url = enter("Enter the web site url to extract emails: ")

if "https://" in user_url:

user_url = user_url

else:

user_url = "https://"+ user_url

Step 4: Arrange variables.

Earlier than we begin writing the code, let’s outline some variables.

Create two variables utilizing the command under to retailer the URLs of scraped and un-scraped web sites:

unscraped_url = deque([user_url]) scraped_url = set()

It can save you the URLs of internet sites that aren’t scraped utilizing the deque container. Moreover, the URLs of the websites that have been scraped are saved in a set knowledge format.

As seen under, the variable list_emails accommodates the retrieved emails:

list_emails = set()

Using a set knowledge kind is primarily meant to eradicate duplicate emails and hold simply distinctive emails.

Allow us to proceed to the following step of our major program to extract electronic mail from an internet site.

Step 5: Including Urls for Content material Extraction.

Net web page URLs are transferred from the variable unscraped_url to scrapped_url to start the method of extracting content material from the user-entered URLs.

whereas len(unscraped_url):

url = unscraped_url.popleft()

scraped_url.add(url)

The popleft() technique removes the online web page URLs from the left aspect of the deque container and saves them within the url variable.

Then the url is saved in scraped_url utilizing the add() technique.

Step 6: Splitting of URLs and merging them with base URL.

The web site accommodates relative hyperlinks that you simply can not entry straight.

Subsequently, we should merge the relative hyperlinks with the bottom URL. We want the urlsplit() perform to do that.

elements = urlsplit(url)

Create a elements variable to phase the URL as proven under.

SplitResult(scheme="https", netloc="www.scrapingbee.com", path="/", question='', fragment="")

For example proven above, the URL https://www.scrapingbee.com/ is split into scheme, netloc, path, and different components.

The cut up end result’s netloc variable accommodates the web site’s title. Proceed studying to find out how this process advantages our programming.

base_url = "{0.scheme}://{0.netloc}".format(elements)

Subsequent, we create the essential URL by merging the scheme and netloc.

Base URL means the principle web site’s URL is what you kind into the browser’s deal with bar if you enter it.

If the consumer enters relative URLs when requested by this system, we should then convert them again to base URLs. We are able to accomplish this by utilizing the command:

if '/' in elements.path:

half = url.rfind("/")

path = url[0:part + 1]

else:

path = url

Allow us to perceive how every line of the above command works.

Suppose the consumer enters the next URL:

This URL is a relative hyperlink, and the above set of instructions will convert it to a base URL (https://www.scrapingbee.com). Let’s see the way it works.

If the situation finds that there’s a “/” within the path of the URL, then the command finds the place is the final slash ”/” is positioned utilizing the rfind() technique. The “/” is positioned on the twenty seventh place.

Subsequent line of code shops the URL from 0 to 27 + 1, i.e., twenty eighth merchandise place, i.e., https://www.scrapingbee.com/. Thus, it converts to the bottom URL.

Within the final command, If there is no such thing as a relative hyperlink from the URL, it’s the similar as the bottom URL. That hyperlinks are within the path variable.

The next command prints the URLs for which this system is scraping.

print("Trying to find Emails in %s" % url)

Step 7: Extracting Emails from the URLs.

The HTML Get Request Command entry the user-entered web site.

response = requests.get(url)

Then, extract all electronic mail addresses from the response variable utilizing a common expression, and replace them to the list_emails set.

new_emails = ((re.findall(r"b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+.[A-Z|a-z]{2,}b", response.textual content, re.I)))

list_emails.replace(new_emails)

The regression is constructed to match the e-mail deal with syntax displayed within the new emails variable. The regression format pulls the e-mail deal with from the web site URL’s content material with the response.textual content technique. And re.I flag technique ignores the font case. The list_emails set is up to date with new emails.

The subsequent is to seek out the entire web site’s URL hyperlinks and extract them with the intention to retrieve the e-mail addresses which can be at the moment accessible. You may make the most of a strong, stunning soup module to hold out this process.

soup = BeautifulSoup(response.textual content, 'lxml')

A gorgeous soup perform parses the HTML doc of the webpage the consumer has entered, as proven within the above command.

You will discover out what number of emails have been extracted with the next command.

print("Electronic mail Extracted: " + str(len(list_emails)))

The URLs associated to the web site might be discovered with “a href” anchor tags.

for tag in soup.find_all("a"):

if "href" in tag.attrs:

weblink = tag.attrs["href"]

else:

weblink = ""

Stunning soups discover all of the anchor tag “a” from the web site.

Then if href is within the attribute of tags, then soup fetches the URL within the weblink variable else it’s an empty string.

if weblink.startswith('/'):

weblink = base_url + weblink

elif not weblink.startswith('https'):

weblink = path + weblink

The href accommodates only a hyperlink to a selected web page starting with “/,” the web page title, and no base URL.

For example, you possibly can see the next URL on the scraping bee web site:

<a href="/#pricing" class="block hover:underline">Pricing</a><a href="/#faq" class="block hover:underline">FAQ</a><a href="https://weblog.finxter.com/documentation" class="text-white hover:underline">Documentation</a>

Thus, the above command combines the extracted href hyperlink and the bottom URL.

For instance, within the case of pricing, the weblink variable is as follows:

Weblink = "https://www.scrapingbee.com/#pricing"

In some circumstances, href doesn’t begin with both “/” or “https”; in that case, the command combines the trail with that hyperlink.

For instance, href is like under:

<a href="https://weblog.finxter.com/how-to-extract-emails-from-any-website-using-python/mailto:assist@scrapingbee.com?topic=Enterprise plan&physique=Hello there, I"d like to debate the Enterprise plan." class="btn btn-sm btn-black-o w-full mt-13">1-click quote</a>

Now let’s full the code with the next command:

if not weblink in unscraped_url and never weblink in scraped_url:

unscraped_url.append(weblink)

print(list_emails)

The above command appends URLs not scraped to the unscraped url variable. To view the outcomes, print the list_emails.

Run this system.

What if this system doesn’t work?

Are you getting errors or exceptions of Lacking Schema, Connection Error, or Invalid URL?

A few of the web sites you aren’t capable of entry for some purpose.

Don’t fear! Let’s see how one can hit these errors.

Use the Attempt Exception command to bypass the errors as proven under:

strive:

response = requests.get(url)

besides (requests.exceptions.MissingSchema, requests.exceptions.ConnectionError, requests.exceptions.InvalidURL):

proceed

Insert the command earlier than the e-mail regex command. Exactly, place this command above the new_emails variable.

Run this system now.

Did this system work?

Does it carry on operating for a number of hours and never full it?

This system searches and extracts all of the URLs from the given web site. Additionally, It’s extracting hyperlinks from different area title web sites. For instance, the Scraping Bee web site has URLs reminiscent of https://seekwell.io/., https://codesubmit.io/, and extra.

A well-built web site has as much as 100 hyperlinks for a single web page of an internet site. So this system will take a number of hours to extract the hyperlinks.

Sorry about it. It’s important to face this problem to get your goal emails.

Bye Bye, the article ends right here……..

No, I’m simply joking!

Fret Not! I provides you with the very best answer within the subsequent step.

Step 8: Repair the code issues.

Right here is the answer code for you:

if base_url in weblink: # code1

if ("contact" in weblink or "Contact" in weblink or "About" in weblink or "about" in weblink or 'CONTACT' in weblink or 'ABOUT' in weblink or 'contact-us' in weblink): #code2

if not weblink in unscraped_url and never weblink in scraped_url:

unscraped_url.append(weblink)

First off, apply code 1, which specifies that you simply solely embody base URL web sites from hyperlinks weblinks to forestall scraping different area title web sites from a particular web site.

For the reason that majority of emails are offered on the contact us and about net pages, solely these hyperlinks from these websites shall be extracted (Seek advice from code 2). Different pages will not be thought-about.

Lastly, unscraped URLs are added to the unscrapped_url variable.

Step 9: Exporting the Electronic mail Deal with to CSV file.

Lastly, we are able to save the e-mail deal with in a CSV file (email2.csv) by way of knowledge body pandas.

url_name = "{0.netloc}".format(elements)

col = "Record of Emails " + url_name

df = pd.DataFrame(list_emails, columns=[col])

s = get_fld(base_url)

df = df[df[col].str.accommodates(s) == True]

df.to_csv('email2.csv', index=False)

We use get_fld to save lots of emails belonging to the primary stage area title of the bottom URL. The s variable accommodates the primary stage area of the bottom URL. For instance, the primary stage area is scrapingbee.com.

We embody solely emails ending with the web site’s first-level area title within the knowledge body. Different domains that don’t belong to the bottom URL are ignored. Lastly, the knowledge body transfers emails to a CSV file.

As beforehand acknowledged, an online admin can keep as much as 100 hyperlinks per web page.

As a result of there are greater than 30 hyperlinks on every web page for a traditional web site, it can nonetheless take a while to complete this system. When you imagine that the software program has extracted sufficient electronic mail, chances are you’ll manually halt it utilizing strive besides KeyboardInterrupt and increase SystemExit command as proven under:

strive:

whereas len(unscraped_url):

…

if base_url in weblink:

if ("contact" in weblink or "Contact" in weblink or "About" in weblink or "about" in weblink or 'CONTACT' in weblink or 'ABOUT' in weblink or 'contact-us' in weblink):

if not weblink in unscraped_url and never weblink in scraped_url:

unscraped_url.append(weblink)

url_name = "{0.netloc}".format(elements)

col = "Record of Emails " + url_name

df = pd.DataFrame(list_emails, columns=[col])

s = get_fld(base_url)

df = df[df[col].str.accommodates(s) == True]

df.to_csv('email2.csv', index=False)

besides KeyboardInterrupt:

url_name = "{0.netloc}".format(elements)

col = "Record of Emails " + url_name

df = pd.DataFrame(list_emails, columns=[col])

s = get_fld(base_url)

df = df[df[col].str.accommodates(s) == True]

df.to_csv('email2.csv', index=False)

print("Program terminated manually!")

increase SystemExit

Run this system and revel in it…

Let’s see what our incredible electronic mail scraper software produced. The web site I’ve entered is www.abbott.com.

Output:

Technique 2 Oblique Electronic mail Extraction

You’ll be taught the steps to extract electronic mail addresses from Google.com utilizing the second technique.

Step 1: Set up Libraries.

Utilizing the pip command, set up the next Python libraries:

bs4is a Stunning soup for extracting google pages.- The

pandasmodule can save emails in a DataFrame for future processing. - You should use Common Expression (

re) to match the Electronic mail Deal with format. - The

requestlibrary sends HTTP requests. - You should use

tldlibrary to amass related emails. timelibrary to delay the scraping of pages.

pip set up bs4 pip set up pandas pip set up re pip set up request pip set up time

Step 2: Import Libraries.

Import the libraries.

from bs4 import BeautifulSoup import pandas as pd import re import requests from tld import get_fld import time

Step 3: Setting up Search Question.

The search question is written within the format “@websitename.com“.

Create an enter for the consumer to enter the URL of the web site.

user_keyword = enter("Enter the Web site Identify: ")

user_keyword = str('"@') + user_keyword +' " '

The format of the search question is “@websitename.com,” as indicated within the code for the user_keyword variable above. The search question has opening and ending double quotes.

Step 4: Outline Variables.

Earlier than transferring on to the guts of this system, let’s first arrange the variables.

web page = 0 list_email = set()

You may transfer by way of a number of Google search outcomes pages utilizing the web page variable. And list_email for extracted emails set.

Step 5: Requesting Google Web page.

On this step, you’ll discover ways to create a Google URL hyperlink utilizing a consumer key phrase time period and request the identical.

The Important a part of coding begins as under:

whereas web page <= 100:

print("Looking Emails in web page No " + str(web page))

time.sleep(20.00)

google = "https://www.google.com/search?q=" + user_keyword + "&ei=dUoTY-i9L_2Cxc8P5aSU8AI&begin=" + str(web page)

response = requests.get(google)

print(response)

Let’s study what every line of code does.

- The

whereasloop allows the e-mail extraction bot to retrieve emails as much as a particular variety of pages, on this case 10 Pages. - The code prints the web page variety of the Google web page being extracted. The primary web page is represented by web page quantity 0, the second by web page 10, the third by web page 20, and so forth.

- To stop having Google’s IP blocked, we slowed down the programming by 20 seconds and requested the URLs extra slowly.

Earlier than making a google variable, allow us to be taught extra in regards to the google search URL.

Suppose you search the key phrase “Germany” on google.com. Then the Google search URL shall be as follows

https://www.google.com/search?q=germany

When you click on the second web page of the Google search end result, then the hyperlink shall be as follows:

https://www.google.com/search?q=germany&ei=dUoTY-i9L_2Cxc8P5aSU8AI&begin=10

How does that hyperlink work?

- The consumer key phrase is inserted after the “

q=” image, and the web page quantity is added after the “begin=” as proven above within the google variable. - Request a Google webpage after that, then print the outcomes. To check whether or not it’s functioning or not. The web site was efficiently accessed in the event you acquired a 200 response code. When you obtain a 429, it implies that you’ve got hit your request restrict and should wait two hours earlier than making any extra requests.

Step 6: Extracting Electronic mail Deal with.

On this step, you’ll discover ways to extract the e-mail deal with from the google search end result contents.

soup = BeautifulSoup(response.textual content, 'html.parser')

new_emails = ((re.findall(r"b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+.[A-Z|a-z]{2,}b", soup.textual content, re.I)))

list_email.replace(new_emails)

web page = web page + 10

The Stunning soup parses the online web page and extracts the content material of html net web page.

With the regex findall() perform, you possibly can acquire electronic mail addresses, as proven above. Then the brand new electronic mail is up to date to the list_email set. The web page is added to 10 for navigating the following web page.

n = len(user_keyword)-1

base_url = "https://www." + user_keyword[2:n]

col = "Record of Emails " + user_keyword[2:n]

df = pd.DataFrame(list_email, columns=[col])

s = get_fld(base_url)

df = df[df[col].str.accommodates(s) == True]

df.to_csv('email3.csv', index=False)

And eventually, goal emails are saved to the CSV file from the above traces of code. The checklist merchandise within the user_keyword begins from the 2nd place till the area title.

Run this system and see the output.

Technique 1 Vs. Technique 2

Can we decide which strategy is simpler for constructing an electronic mail checklist: Technique 1 Direct Electronic mail Extraction or Technique 2 Oblique Electronic mail Extraction? The output’s electronic mail checklist was generated from the web site abbot.com.

Let’s distinction two electronic mail lists that have been extracted utilizing Strategies 1 and a couple of.

- From Technique 1, the extractor has retrieved 60 emails.

- From Technique 2, the extractor has retrieved 19 emails.

- The 17 electronic mail lists in Technique 2 will not be included in Technique 1.

- These emails are employee-specific somewhat than company-wide. Moreover, there are extra worker emails in Technique 1.

Thus, we’re unable to suggest one process over one other. Each strategies present contemporary electronic mail lists. Because of this, each of those strategies will improve your electronic mail checklist.

Abstract

Constructing an electronic mail checklist is essential for companies and freelancers alike to extend gross sales and leads.

This text affords directions on utilizing Python to retrieve electronic mail addresses from web sites.

The very best two strategies to acquire electronic mail addresses are offered within the article.

With the intention to present a suggestion, the 2 strategies are lastly in contrast.

The primary strategy is a direct electronic mail extractor from any web site, and the second technique is to extract electronic mail addresses utilizing Google.com.

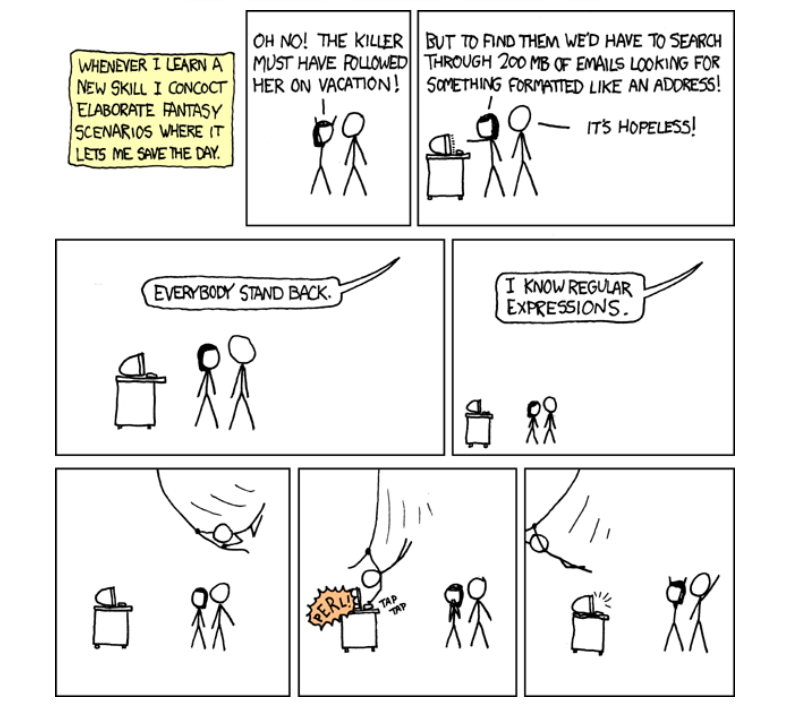

Regex Humor