Methods to keep away from reminiscence points?

A typical reminiscence format of a operating program may be as follows:

- Static reminiscence: static dimension, static allocation (compile time), for international variables, and static native variables.

- Stack reminiscence (Name Stack): static dimension, dynamic allocation (run time), for native variables.

- Heap reminiscence: dynamic dimension, dynamic allocation (run time). Its programmer managed for some programming languages (variable-sized objects).

For all information, reminiscence have to be allotted (i.e., reminiscence house reserved).

Nice! We now know the way and when our program makes use of reminiscence!

Now, let’s see how programming languages handle (allocate and free) Heap reminiscence!

There are a number of forms of reminiscence administration:

- Guide: C, C++

- Guide retain-release (MRR): Goal-C

- Automated Reference Counting (ARC): Goal-C, Swift

- Rubbish Assortment (totally automated administration): Java, JavaScript

- Possession: Rust

Let’s take a fast look contained in the magic of every choice!

Guide reminiscence administration

In C, capabilities equivalent to malloc() are used to dynamically allocate reminiscence from the Heap. When the reminiscence is now not wanted, the pointer is handed to free which deallocates the reminiscence in order that it may be used for different functions.

#embody <stdlib.h>int *array = malloc(num_items * sizeof(int));

malloc() will attempt to discover unused reminiscence that’s giant sufficient to carry the required variety of bytes and reserve it. In any other case, this system terminates with an error message.

malloc() isn’t routinely deallocated. It should even be deallocated explicitly utilizing free.

Forgetting to deallocate results in reminiscence leaks and operating out of reminiscence!

We should not use a freed pointer until reassigned or reallocated!

Wow, isn’t that tough?

Let’s have a look inside C++!

In C++, reminiscence administration is completed utilizing the new and delete operators. new is used to allocate reminiscence throughout execution time. delete deallocates the reserved reminiscence.

As with the C language, we have to be cautious to not neglect to free reminiscence and keep away from entry errors!

Regardless of the issue of manually managing the reminiscence, the benefit is that we all know the precise wants of our program, and we are able to free the reminiscence immediately after its use.

We additionally be sure that objects exist so long as they need to, however now not.

You too can create your automated method of managing reminiscence primarily based on low-level reminiscence administration capabilities!

From Guide Retain Launch (MRR) to Automated Reference Counting (ARC)

iOS and OS X apps obtain reminiscence administration via reference-counting:

- Once we declare possession of an object, we enhance its reference rely.

- Once we’re performed with the item, we lower its reference rely.

- Because the rely reaches zero, the working system is allowed to destroy it.

As soon as upon a time, with Goal-C, we manually managed an object’s reference rely by calling particular memory-management strategies:

alloc: enhance by one the reference counting (personal an object).retain: enhance by one the reference Counting (take possession of an object).launch,autorelease: lower by one the reference counting (relinquish possession of an object).

That is known as Guide Retain Launch (MRR)!

It’s our job to say and relinquish possession of each object in this system:

- If we neglect to free an object, its underlying reminiscence isn’t freed, leading to a reminiscence leak (we’ll see it in additional element later).

- Once we attempt to free an object too many instances, it leads to a dangling pointer (we’ll see it in additional element later).

- In both case, this system will most probably crash.

It’s laborious to maintain the stability between each alloc, retain, copy and launch or autorelease!

Luckily, with the brand new variations of Goal-C and Swift, now we have moved to ARC!

Automated Reference Counting works the very same method as MRR, nevertheless it routinely inserts the suitable memory-management strategies for us. Which means that we won’t manually name once more retain, launch, or autorelease. Whoa!

Automated Reference Counting lets us fully neglect about reminiscence administration. The thought is to concentrate on high-level performance as a substitute of the underlying reminiscence administration.

You could find extra particulars about ARC right here. Get pleasure from!

Aha, that’s an enormous step to routinely handle reminiscence, nevertheless it’s not the one method! Let’s proceed our discovery!

Rubbish assortment

Rubbish Assortment (GC) is the approach used for automated reminiscence administration like in Java and JavaScript.

In Java, objects are allotted utilizing a new operator.

To simplify the working mechanism of a GC, it’s like having programmed a thread within the background which is able to run each interval to research reminiscence utilization and attempt to free unused objects.

Basically, all GC concentrate on two areas:

- Discover out all objects which might be nonetheless alive or used (Marking Reachable Objects).

- Eliminate every part else — the supposedly useless and unused objects (eradicating unused objects, or sweep).

This algorithm is named Mark and Sweep. Gotcha!

Nicely, what can we discover from all these definitions:

- the GC is tied to the runtime and to not the programming language.

- virtually the execution interval isn’t common and might occur at indeterminate intervals: both after a sure period of time has handed, or when the runtime sees out there reminiscence getting low.

- this suggests that objects usually are not essentially launched on the actual second they’re now not used.

- regular program execution is suspended whereas the rubbish assortment algorithm runs as a way to discover what’s used and clear what’s unused.

Whoa! It’s not very magical!

To be trustworthy, I don’t like GC due to this indeterminism. I fairly favor the ARC strategy and an ahead-of-time method fairly than a runtime job ️which can gradual this system execution.

Rubbish assortment vs ARC

With ARC, the compiler will inject code into the executable that retains observe of object reference counts and can routinely launch objects as obligatory, fairly than having the runtime search for and get rid of unused objects within the background.

Automated Reference Counting (ARC). At compile time, it inserts into the item code messages retain and launch which enhance and reduce the reference rely at run time, marking for deallocation these objects when the variety of references to them reaches zero. ARC differs from tracing rubbish assortment in that there is no such thing as a background course of that deallocates the objects asynchronously at runtime. Not like tracing rubbish assortment, ARC doesn’t deal with reference cycles routinely. Automated Reference Counting — Wikipedia

Wonderful! It’s performed at compilation. A deterministic destruction, i.e., forward of time and no want for background processing!

Reminiscence administration vs reminiscence security

I feel you’re beginning to notice that automated doesn’t indicate secure:

- What if GC arrives late to free reminiscence?

- What if we use the variable on the identical time it’s being launched by the GC (multi-threading)?

- What if ARC doesn’t handle retain cycles properly?

- What about momentary information?

- What about international variables?

- What if the enter dimension exceeds the reminiscence?

Sadly, these instances trigger many reminiscence issues that result in program crashes and typically to safety violations!

Within the majority of instances, automated reminiscence administration ensures a sure diploma of security, Nevertheless, this isn’t adequate, and we’ll see why. So, let’s transfer on to see what reminiscence issues can happen and the way can we keep away from them!

Reminiscence jargon

Earlier than we start, right here is a few necessary reminiscence jargon:

Buffer is a spot to place info briefly whereas ready for one thing else to course of the info or whereas we’re processing it. For instance, when enter comes from the keyboard, it’s saved in an enter buffer till it’s learn and utilized by the applying.

Pointer is a variable that shops the reminiscence deal with of an object.

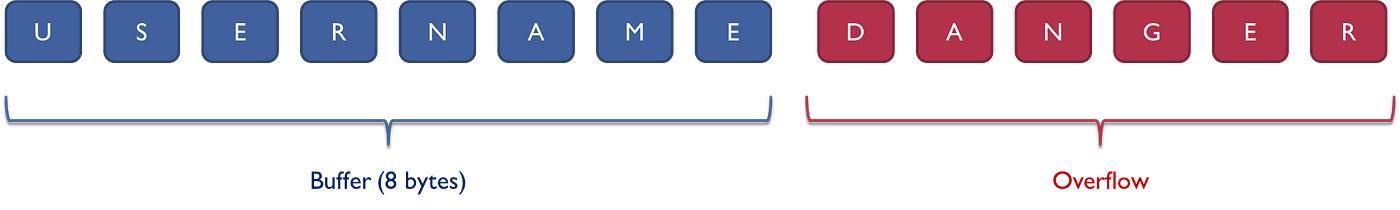

Buffer overflow

Aha, I feel you already know this well-known safety vulnerability!

A buffer overflow, or buffer overrun, is an anomaly the place a program, whereas writing information to a buffer, overruns the buffer’s boundary and overwrites adjoining reminiscence areas. Buffer overflow — Wikipedia

In different phrases, a buffer overflow happens when a program tries to place extra information right into a buffer than it will possibly maintain, or when a program tries to place information right into a reminiscence space past a buffer:

Ah, you see why I mentioned that automated doesn’t indicate secure!

Buffer overflow vulnerabilities can happen in code that:

- depends on exterior information to manage its conduct.

- is dependent upon information properties which might be utilized outdoors the fast scope of the code.

- has many reminiscence manipulation capabilities in C and C++ that don’t carry out bounds checking, and it will possibly simply overwrite the allotted bounds of the buffers they function upon.

Writing outdoors the bounds of a block of allotted reminiscence can corrupt information, crash this system, or trigger the execution of malicious code.

To keep away from this sort of violation, we have to confirm all code that accepts enter from customers by way of the HTTP request and guarantee it gives applicable dimension and sort checking on all such inputs.

It’s additionally beneficial to validate that array indices are throughout the correct bounds earlier than utilizing them as an index to an array.

This drawback considerations all programming languages whether or not the reminiscence is managed manually or routinely as a result of it’s as a result of method it’s coded (BufferOverflowException, ArrayIndexOutOfBoundsException, IndexOutOfBoundsException).

FYI, there’s one other buffer drawback: Buffer over-read, which happens when studying a buffer and makes this system go over the buffer restrict and browse the adjoining reminiscence.

Null dereference

A null pointer dereference happens when a NULL pointer is used as if pointing to a legitimate reminiscence space.

A null pointer shouldn’t be confused with an uninitialized pointer:

- an uninitialized variable is a variable that’s declared however not set to an outlined recognized worth earlier than getting used.

- a null pointer is a pointer that doesn’t level to any reminiscence location (pointing to nothing). It shops the bottom deal with of the phase.

NULL pointer dereference can occur:

- when this system doesn’t verify for an error after calling a perform that may return with a NULL pointer if the perform fails.

- when this system doesn’t correctly anticipate or deal with distinctive circumstances that hardly ever happen throughout regular operation of the software program.

- via a lot of flaws, together with race circumstances and easy programming omissions.

In C, dereferencing a null pointer is undefined conduct. In Java, entry to a null reference triggers a NullPointerException.

To keep away from this sort of violation:

- earlier than utilizing a pointer, guarantee it isn’t equal to NULL.

- when releasing pointers, guarantee they don’t seem to be set to NULL. You should definitely set them to NULL as soon as they’re freed.

- when working with a multi-threaded or in any other case asynchronous setting, be sure that correct locking APIs are used to lock earlier than the if assertion; and unlock when it has completed.

- in Java, NullPointerException may be caught by error dealing with code, however the popular follow is to make sure that such exceptions by no means happen.

- use a defensive programming strategy.

For fan Null pointer dereference in Google Chrome and Google Chrome (cybersecurity-help.cz).

Dangling and wild pointers

Did you hear concerning the Dangling pointer vulnerability utilizing DOM plugin array — Mozilla and the Dangling pointer vulnerability in nsTreeSelection — Mozilla?

Safety researcher Sergey Glazunov reported a dangling pointer vulnerability within the implementation of

navigator.pluginsby which thenavigatorobject might retain a pointer to the plugins array even after it had been destroyed.An attacker might probably use this difficulty to crash the browser and run arbitrary code on a sufferer’s pc. 584512 — (CVE-2010–2767) nsPluginArray — reminiscence corruption (mozilla.org)

The dangling pointer arises when the referencing object is deleted or deallocated and the pointer nonetheless pointing to a reminiscence location. It creates an issue as a result of the pointer is pointing to the reminiscence that’s not out there. Oops!

A pointer that’s not initialized correctly earlier than its first use (not even NULL) is named a Wild Pointer. The uninitialized pointer’s conduct is completely undefined as a result of it could level to some arbitrary location that may be the reason for this system crash. That’s why it’s known as a wild pointer. OMG!

These points occur when JavaScript engines are written utilizing C++ (Rust for Firefox precise variations).

Utilizing an automated reminiscence administration mechanism (GC or ARC) significantly reduces the chance of encountering these pointer issues, however that doesn’t forestall reminiscence leaks as we’ll see beneath. Let’s transfer on!

I feel you’ve encountered this error at the very least as soon as for those who’re utilizing JavaScript within the browser:

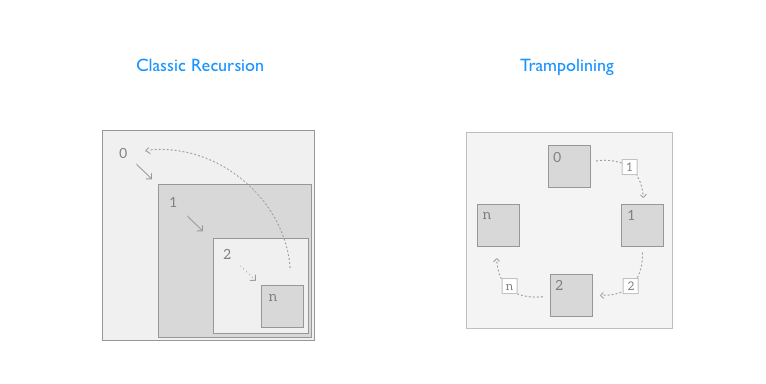

This error normally happens with recursive calls and signifies that the utmost Stack dimension has been exceeded:

Right here too, the GC doesn’t assist as a result of every iteration references the earlier one!

This drawback isn’t particular to JavaScript, however to all programming languages that wouldn’t have the Tail Name Optimization mechanism.

A tail name is when the final assertion of a perform is a name to a different perform. The optimization consists in having the tail name perform change its father or mother perform within the stack. This fashion, recursive capabilities received’t develop the stack.

The query now’s what may be performed if the language doesn’t implement Tail Name Optimization (TCO) by default?

Nicely, there’s a magic sample that may assist us on this case, and it may be utilized in all languages to carefully imitate the conduct of TCO: the Trampoline sample.

A trampoline perform wraps our recursive perform in a loop. Underneath the hood, it calls the recursive perform piece by piece till it now not produces recursive calls:

// Trampoline

const Trampoline = fn => (...args) => {

let consequence = fn(...args)

whereas (typeof consequence === 'perform') {

consequence = consequence()

}

return consequence

}

With Trampoline, we make virtually no change to the traditional recursive algorithm (non-TCO!), however we skip the call-stack build-up totally. It’s only a new Utility!

A beautiful sample!

This error happens when there’s inadequate house to allocate an object within the Heap, and the Heap can’t be expanded additional. On this scenario, the GC can’t assist!

OOM error normally signifies that this system is doing one thing improper, equivalent to the next:

- holding onto objects too lengthy

- attempting to course of an excessive amount of information at a time

- having many international variables (an issue in perform and variables scoop)

For instance, in JavaScript, the references which might be immediately pointed to the foundation (international or window) are all the time lively (used), and the GC can’t clear them!

So, what we are able to do to keep away from OOM? let’s see!

To course of giant information,

- for backend purposes utilizing Node JS, we are able to use stream and pagination, geospatial queries.

- for frontend purposes utilizing JavaScript and React, we are able to use some technics like windowing, or pagination.

- typically, it’s extremely beneficial to undertake these rules: processing on demand, show on demand, originally we solely load and course of what is important (lazy analysis).

With the intention to keep away from robust references, attempt earlier than exploiting these APIs: WeakMap, WeakSet, WeakHashMap.

To cache information, we are able to undertake a mechanism equivalent to LRU (least-recently-used) by setting a max variety of probably the most just lately used gadgets that we need to maintain.

To have scooped capabilities and variables, we are able to undertake a Purposeful Programming strategy. For JavaScript, we must always keep away from international variables, home windows, and international listeners as a lot as potential.

Reminiscence leaks

Whereas the GC successfully handles a very good portion of reminiscence, it doesn’t assure a foolproof answer to reminiscence leaking. The GC is fairly good, however not flawless. Reminiscence leaks can nonetheless sneak up. Reminiscence leaks are a real drawback in Java. Understanding Reminiscence Leaks in Java | Baeldung

I like this rationalization. It sums up every part I need to clarify on this article: a GC is necessary however not adequate. We shouldn’t depend on the GC to wash every part up for us, however we must always assist it do its job properly!

I feel you’re shocked that we are able to encounter reminiscence leaks regardless of the presence of a GC. Let’s look collectively!

A reminiscence leak is a scenario the place objects within the heap are now not in use, however the rubbish collector is unable to take away them from reminiscence. Therefore, they’re unnecessarily maintained. When does this case happen?

In Java, reminiscence leaks can happen on account of:

- static variables

- unclosed assets (make a brand new connection or open a stream)

- every time a category’

finalize()technique is overridden, then objects of that class aren’t immediately rubbish collected. As an alternative, the GC queues them for finalization, which happens at a later cut-off date - when utilizing this ThreadLocal, every thread will maintain an implicit reference to its copy of a ThreadLocal variable. It would keep its personal copy as a substitute of sharing the useful resource throughout a number of threads, so long as the thread is alive

In JavaScript, reminiscence leaks can happen on account of:

- Undeclared or unintended international variables

- Forgotten

setTimeoutandsetInterval - Out of DOM reference or Indifferent node: nodes which have been faraway from the DOM however are nonetheless out there within the reminiscence

- Uncleaned DOM occasion listener

- WebSocket subscription and request to an API

- React elements that carry out state updates and run asynchronous operations could cause reminiscence leak points if the state is up to date after the part is unmounted.

Wow! That’s scary understanding that JS and Java reminiscence administration makes use of rubbish assortment!

Multithreading and Race situation

In numerous parallel programming fashions, course of/threads share a standard deal with house, which they learn and write to asynchronously. On this mannequin, all processes have equal entry to shared reminiscence.

A race situation happens in a shared reminiscence program when two threads entry the identical variable utilizing shared reminiscence information and at the very least one thread executes a write operation. The entry is concurrent so they may occur concurrently.

For info, it’s secure for a number of threads to attempt to learn a shared useful resource so long as they don’t attempt to modify it.

All methods comprising a multiprocessing setting are weak to a race situation assault!

In a race situation, shared reminiscence may be corrupted by threads.

Varied mechanisms equivalent to locks (synchronized) and semaphore could also be used to manage entry to the shared reminiscence.

Java affords a number of information buildings permitting concurrent entry: DelayQueue, BlockingQueue, ConcurrentMap, ConcurrentHashMap.

What a journey into reminiscence! It was very instructive!

We noticed how the reminiscence of a program is laid out and the way it’s managed: manually or routinely by way of ARC or GC.

We now have seen that routinely managing reminiscence is necessary however not adequate. Some reminiscence points can happen even in a run-time with a GC.

Buffer overflow, Buffer over-read, Null dereference, dangling and wild pointers, Stack Overflow, and out of reminiscence (OOM) are exceptions for all programming languages. They’re extra associated to an “unsafe” coding defect than an inside drawback within the programming language or the runtime.

An important piece of recommendation is to take a defensive strategy in writing code. A program ought to be capable to run correctly even via unexpected processes or when surprising entries.

That’s all the oldsters for this journey. Joyful studying!