Sustaining a service that processes over a billion occasions per day poses many studying alternatives. In an ecommerce panorama, our service must be able to deal with international site visitors, flash gross sales, vacation seasons, failovers and the like. Inside this weblog, I will element how I scaled our Server Pixels service to extend its occasion processing efficiency by 170%, from 7.75 thousand occasions per second per pod to 21 thousand occasions per second per pod.

Capturing a Shopify buyer’s journey is vital to contributing insights into advertising efforts of Shopify retailers. Much like Net Pixels, Server Pixels grants Shopify retailers the liberty to activate and perceive their prospects’ behavioral knowledge by sending this structured knowledge from storefronts to advertising companions. Nevertheless, not like the Net Pixel service, Server Pixels sends these occasions by the server reasonably than client-side. This server-side knowledge sharing is confirmed to be extra dependable permitting for higher management and observability of outgoing knowledge to our companions’ servers. The service provider advantages from this as they’re able to drive extra gross sales at a decrease price of acquisition (CAC). With regional opt-out privateness laws constructed into our service, solely prospects who’ve allowed monitoring may have their occasions processed and despatched to companions. Key occasions in a buyer’s journey on a storefront are captured comparable to checkout completion, search submissions and product views. Server Pixels is a service written in Golang which validates, processes, augments, and consequently, produces multiple billion buyer occasions per day. Nevertheless, with the administration of such numerous occasions, issues of scale begin to emerge.

The Downside

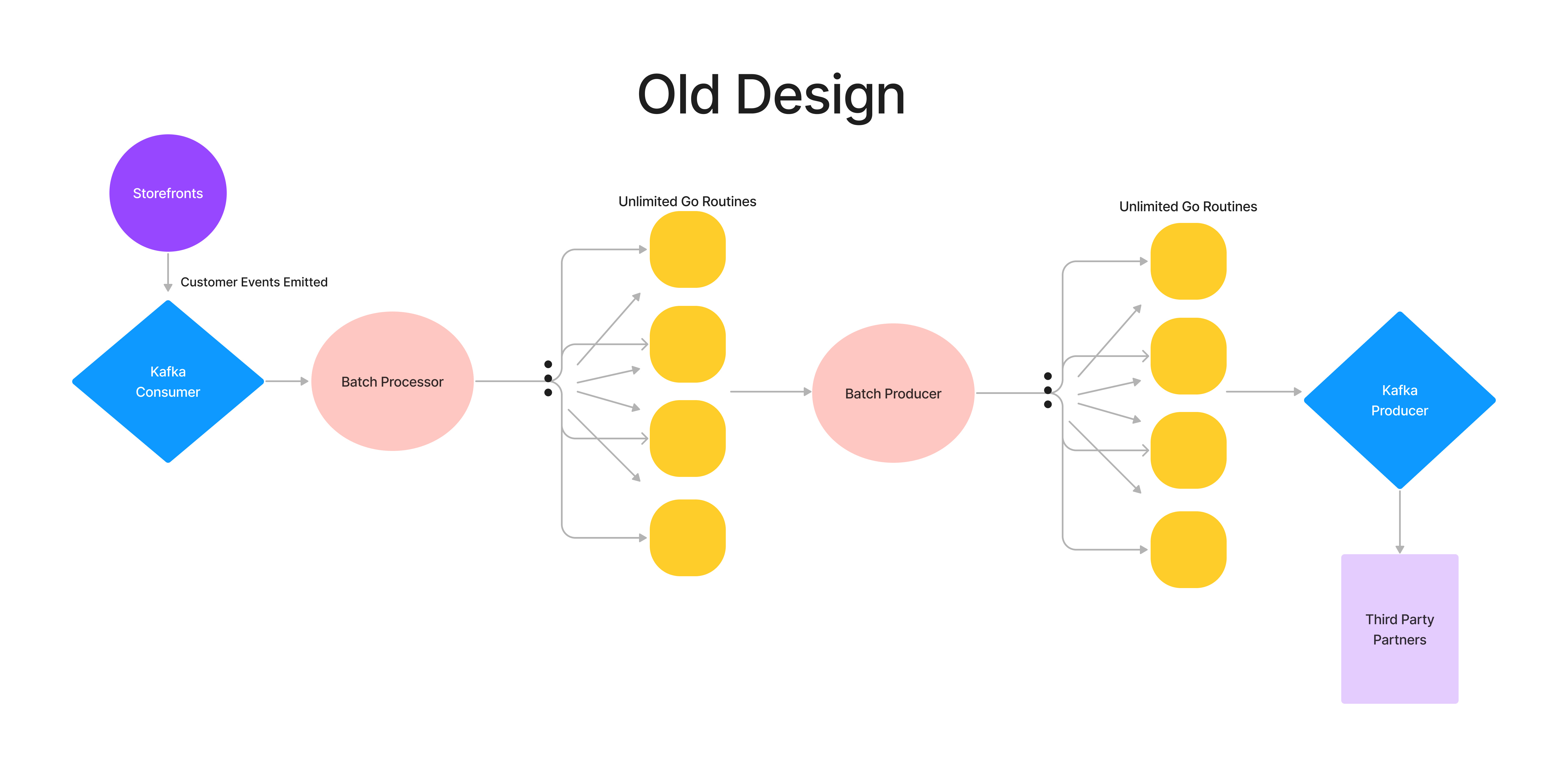

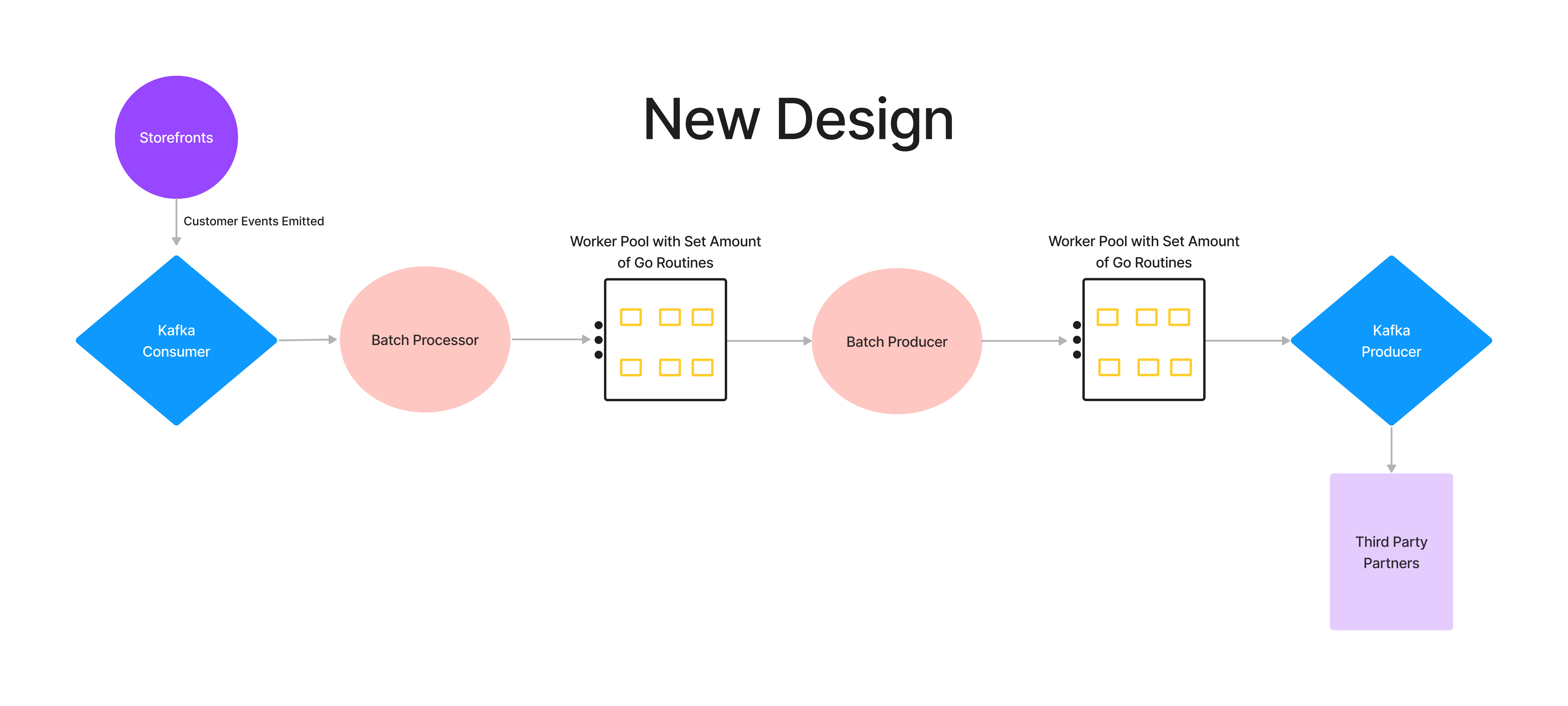

Server Pixels leverages Kafka infrastructure to handle the consumption and manufacturing of occasions. We started to have an issue with our scale when a rise in buyer occasions triggered a rise in consumption lag for our Kafka’s enter matter. Our service was vulnerable to falling behind occasions if any downstream elements slowed down. Proven within the diagram under, our downstream elements course of (parse, validate, and increase) and produce occasions in batches:

The issue with our unique design was that an infinite variety of threads would get spawned when batch occasions wanted to be processed or produced. So when our service obtained a rise in occasions, an unsustainable variety of goroutines had been generated and ran concurrently.

Goroutines may be considered light-weight threads which are capabilities or strategies that run concurrently with different capabilities and threads. In a service, spawning an infinite variety of goroutines to execute more and more rising duties on a queue isn’t ideally suited. The machine executing these duties will proceed to expend its assets, like CPU and reminiscence, till it reaches its restrict. Moreover, our group has a service stage goal (SLO) of 5 minutes for occasion processing, so any delays in processing our knowledge would exceed our processing past its timed deadline. In anticipation of 3 times the same old load for BFCM, we would have liked a approach for our service to work smarter, not tougher.

Our answer? Go employee swimming pools.

The Resolution

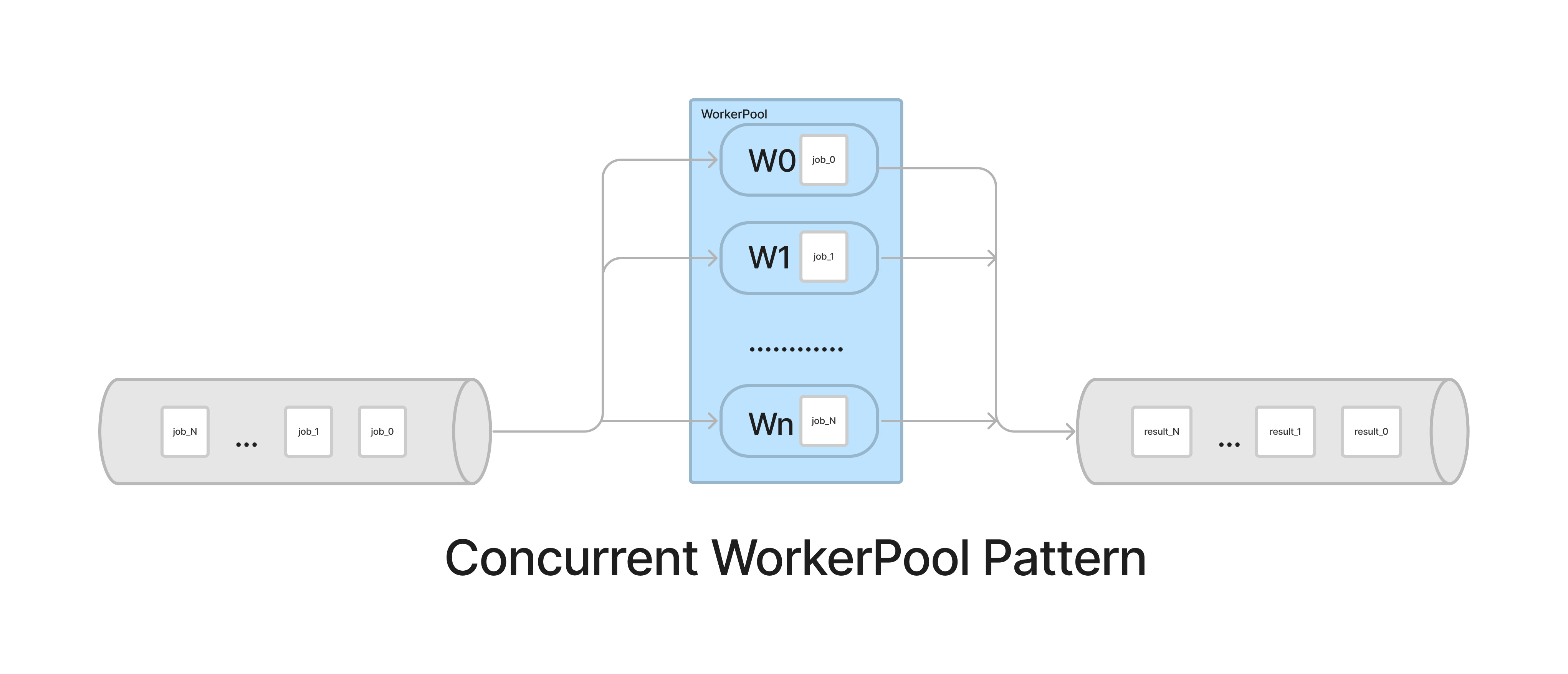

The employee pool sample is a design wherein a set variety of staff are given a stream of duties to course of in a queue. The duties keep within the queue till a employee is free to choose up the duty and execute it. Employee swimming pools are nice for controlling the concurrent execution for a set of outlined jobs. Because of these staff controlling the quantity of concurrent goroutines in motion, much less stress is placed on our system’s assets. This design additionally labored completely for scaling up in anticipation of BFCM with out relying totally on vertical or horizontal scaling.

When tasked with this new design, I used to be shocked on the intuitive setup for employee swimming pools. The premise was making a Go channel that receives a stream of jobs. You may consider Go channels as pipes that join concurrent goroutines collectively, permitting them to speak with one another. You ship values into channels from one goroutine and obtain these values into one other goroutine. The Go staff retrieve their jobs from a channel as they grow to be obtainable, given the employee isn’t busy processing one other job. Concurrently, the outcomes of those jobs are despatched to a different Go channel or to a different a part of the pipeline.

So let me take you thru the logistics of the code!

The Code

I outlined a employee interface that requires a CompleteJobs operate that requires a go channel of sort Job.

The Job sort takes the occasion batch, that’s integral to finishing the duty, as a parameter. Different varieties, like NewProcessorJob, can inherit from this struct to suit completely different use instances of the precise process.

New staff are created utilizing the operate NewWorker. It takes workFunc as a parameter which processes the roles. This workFunc may be tailor-made to any use case, so we are able to use the identical Employee interface for various elements to do various kinds of work. The core of what makes this design highly effective is that the Employee interface is used amongst completely different elements to do various various kinds of duties based mostly on the Job spec.

CompleteJobs will name workFunc on every Job because it receives it from the jobs channel.

Now let’s tie all of it collectively.

Above is an instance of how I used staff to course of our occasions in our pipeline. A job channel and a set variety of numWorkers staff are initialized. The employees are then posed to obtain from the jobs channel within the CompleteJobs operate in a goroutine. Placing go earlier than the CompleteJobs operate permits the operate to run in a goroutine!

As occasion batches get consumed within the for loop above, the batch is transformed right into a Job that’s emitted to the roles channel with the go employee.CompleteJobs(jobs, &producerWg) runs concurrently and receives these jobs.

However wait, how do the employees know when to cease processing occasions?

When the system is able to be scaled down, wait teams are used to make sure that any current duties in flight are accomplished earlier than the system shuts down. A waitGroup is a sort of counter in Go that blocks the execution of a operate till its inner counter turns into zero. As the employees had been created above, the waitGroup counter was incremented for each employee that was created with the operate producerWg.Add(1). Within the CompleteJobs operate wg.Finished() is executed when the roles channel is closed and jobs cease being obtained. wg.Finished decrements the waitGroup counter for each employee.

When a context cancel sign is obtained (signified by <- ctx.Finished() above ), the remaining batches are despatched to the Job channel so the employees can end their execution. The Job channel is closed safely enabling the employees to interrupt out of the loop in CompleteJobs and cease processing jobs. At this level, the WaitGroups’ counters are zero and the outputBatches channel,the place the outcomes of the roles get despatched to, may be closed safely.

The Enhancements

As soon as deployed, the time enchancment utilizing the brand new employee pool design was promising. I performed load testing that confirmed as extra staff had been added, extra occasions could possibly be processed on one pod. As talked about earlier than, in our earlier implementation our service might solely deal with round 7.75 thousand occasions per second per pod in manufacturing with out including to our consumption lag.

My group initially set the variety of staff to fifteen every within the processor and producer. This launched a processing carry of 66% (12.9 thousand occasions per second per pod). By upping the employees to 50, we elevated our occasion load by 149% from the previous design leading to 19.3 thousand occasions per second per pod. Presently, with efficiency enhancements we are able to do 21 thousand occasions per second per pod. A 170% improve! This was an incredible win for the group and gave us the muse to be adequately ready for BFCM 2021, the place we skilled a max of 46 thousand occasions per second!

Go employee swimming pools are a light-weight answer to hurry up computation and permit concurrent duties to be extra performant. This go employee pool design has been reused to work with different elements of our service comparable to validation, parsing, and augmentation.

Through the use of the identical Employee interface for various elements, we are able to scale out every a part of our pipeline in another way to satisfy its use case and anticipated load.

Cheers to extra quiet BFCMs!

Kyra Stephen is a backend software program developer on the Occasion Streaming – Buyer Habits group at Shopify. She is captivated with engaged on expertise that impacts customers and makes their lives simpler. She additionally loves enjoying tennis, obsessing about music and being within the solar as a lot as attainable.

Wherever you’re, your subsequent journey begins right here! If constructing methods from the bottom as much as resolve real-world issues pursuits you, our Engineering weblog has tales about different challenges we’ve encountered. Intrigued? Go to our Engineering profession web page to search out out about our open positions and study Digital by Design.