A detailed have a look at the efficiency of Python’s scikit-learn vs. Java’s Tribuo

Machine studying (ML) is necessary as a result of it could actually derive insights and make predictions utilizing an acceptable dataset. As the quantity of knowledge being generated will increase globally, so do the potential purposes of ML. Particular ML algorithms will be tough to implement, since doing so requires important theoretical and sensible experience.

Fortuitously, lots of the most helpful ML algorithms have already been carried out and are bundled collectively into packages known as libraries. The perfect libraries for performing ML must be recognized and studied, since there are various libraries at present accessible.

Scikit-learn is a really well-established Python ML library broadly utilized in business. Tribuo is a lately open sourced Java ML library from Oracle. At first look, Tribuo gives many necessary instruments for ML, however there’s restricted printed analysis finding out its efficiency.

This venture compares the scikit-learn library for Python and the Tribuo library for Java. The main focus of this comparability is on the ML duties of classification, regression, and clustering. This consists of evaluating the outcomes from coaching and testing a number of totally different fashions for every process.

This research confirmed that the brand new Tribuo ML library is a viable, aggressive providing and may actually be thought-about when ML options in Java are carried out.

This text explains the methodology of this work; describes the experiments which examine the 2 libraries; discusses the outcomes of the experiments and different findings; and, lastly, presents the conclusions. This text assumes readers have familiarity with ML’s objectives and terminology.

Methodology

To make a comparability between scikit-learn and Tribuo, the duties of classification, regression, and clustering have been thought-about. Whereas every process was distinctive, a standard methodology was relevant to every process. The flowchart proven in Determine 1 illustrates the methodology adopted on this work.

Determine 1. A flowchart that illustrates the methodology of this work

Figuring out a dataset acceptable to the duty was the logical first step. The dataset wanted to be not too small, since a mannequin needs to be skilled with a enough quantity of knowledge. The dataset additionally wanted to be not too massive, to permit the fashions being developed to be skilled in an affordable period of time. The dataset additionally wanted to own options which might be used with out requiring extreme preprocessing.

This work centered on the comparability of two ML libraries, not on the preprocessing of knowledge. With that mentioned, it’s nearly all the time the case {that a} dataset will want some preprocessing.

As soon as the information preprocessing was full, the information was re-exported to a comma-separated worth formatted file. Having a single, preprocessed dataset facilitated the comparability of a particular ML process between the 2 libraries by isolating the coaching, testing, and analysis of the algorithms. For instance, the classification algorithms from the Tribuo Java library and the scikit-learn Python library may load precisely the identical information file. This facet of the experiments was managed very fastidiously.

Selecting comparable algorithms. To make an correct comparability between scikit-learn and Tribuo, it was necessary that the identical algorithms have been in contrast. This third step of defining the widespread algorithms for every library required finding out every library to establish what algorithms can be found that might be precisely in contrast. For instance, for the clustering process, Tribuo at present solely helps Ok-Means and Ok-Means++, so these have been the one algorithms widespread to each libraries which might be in contrast. Moreover, it was essential that every algorithm’s parameters have been exactly managed for every library’s particular implementation.

To proceed with the clustering instance, when the Ok-Means++ object for every library was instantiated, the next parameters have been used:

◉ most iterations = 100

◉ variety of clusters = 6

◉ variety of processors to make use of = 4

◉ deterministic randomness for centroids = 1

The subsequent step was to establish every library’s finest algorithm for a particular ML process. This concerned testing and tuning a number of totally different algorithms and their parameters to see which one carried out the perfect. For some folks, that is when ML is basically enjoyable!

Concretely, for the regression process, the random forest regressor and XGBoost regressor have been discovered to be the perfect for scikit-learn and Tribuo, respectively. Being the perfect on this context meant to attain the perfect rating for the duty’s analysis metric. The method of choosing the optimum set of parameters for a studying algorithm is called hyperparameter optimization.

A aspect observe: In recent times, automated machine studying (AutoML) has emerged as a technique to save effort and time within the technique of hyperparameter optimization. AutoML can also be able to performing mannequin choice, so in concept this complete course of might be automated. Nonetheless, the investigation and use of AutoML instruments was out of the scope of this work.

Evaluating the algorithms. As soon as the preprocessed datasets have been accessible, the libraries’ widespread algorithms had been outlined, and the libraries’ finest scoring algorithms had been recognized, it was time to fastidiously consider the algorithms. This concerned verifying that every library break up the dataset into coaching and check information in an equivalent approach however was solely relevant to the classification and regression duties. This additionally required writing some analysis capabilities which produced comparable output for each Python and Java, and for every of the ML duties.

At this level, it needs to be clear that Jupyter notebooks have been used for these comparisons. As a result of there have been two libraries and three ML duties, six totally different notebooks have been evaluated: A single pocket book was used to carry out the coaching and testing of the algorithms for every ML process for one of many libraries. From a terminology standpoint, a pocket book can also be known as an experiment on this work.

All through this research many, many executions of every pocket book have been carried out for testing, tuning, and so forth. As soon as all the things was finalized, three unbiased executions of every experiment have been made in a really managed approach. This meant that the check system was operating solely the important purposes, to make sure that a most quantity of CPU and reminiscence sources have been accessible to the experiment being executed. The outcomes have been recorded instantly within the notebooks.

The ultimate step within the methodology of this work was to match the outcomes. This included calculating the typical coaching instances for every mannequin’s three coaching instances. The common of every algorithm’s three recorded analysis metrics was additionally calculated. These outcomes have been all reviewed to make sure the values have been constant and no apparent reporting error had been made.

Experiments

F1 scores have been used to match every algorithm’s skill to make right predictions. It was the perfect metric to make use of for the reason that dataset was unbalanced. Within the check information, of a complete of 28,158 data, there have been 21,918 recordings with no rain and solely 6,240 entries indicating rain.

One thing else is value mentioning: On this experiment for the Tribuo library, typically loading the preprocessed dataset took a really very long time. This situation has been mounted within the 4.2 launch of Tribuo. Though information loading isn’t the main target of this research, poor information loading efficiency generally is a important drawback when ML algorithms are used.

Clustering. The clustering process used a generated dataset of isotropic Gaussian blobs, that are merely units of factors usually distributed round an outlined variety of centroids. This dataset used six centroids. On this case, every level had 5 dimensions. There have been a complete of six million data on this dataset, making it fairly massive.

The advantage of utilizing a synthetic dataset like that is {that a} level’s assigned cluster is thought, which is beneficial for evaluating the standard of the clustering. In any other case, evaluating a clustering process is harder. Utilizing the cluster assignments, an adjusted mutual info rating is used to judge the clusters, which signifies the quantity of correlation between the clusters.

Experimental outcomes

Listed here are the outcomes for classification, regression, and clustering.

Classification. As talked about above, the F1 rating is the easiest way to judge these classification algorithms, and an F1 rating near 1 is best than a rating not near 1. The F1 “Sure” and “No” scores for every class have been included. It was necessary to look at each values since this dataset was unbalanced. The leads to Desk 1 present that the F1 scores have been very shut for the algorithms widespread to each libraries, however Tribuo’s fashions have been barely higher.

Desk 1. Classifier outcomes utilizing the identical algorithm

Desk 2 signifies that Tribuo’s XGBoost classifier obtained the perfect F1 scores out of all of the classifiers in an affordable period of time. The time values used within the tables containing these outcomes are all the time the algorithm coaching instances. This work was within the mannequin which achieved the perfect F1 scores, however there might be different conditions or purposes that are extra involved with mannequin coaching velocity and have extra tolerance for incorrect predictions. For these circumstances, it’s value noting that the scikit-learn coaching instances are higher than these obtained by Tribuo—for the algorithms widespread to each libraries.

Desk 2. Classifier finest algorithm outcomes

It’s useful to have a visualization specializing in these F1 scores. Determine 2 reveals a stacked column chart combining every mannequin’s F1 rating for the Sure class and the No class. Once more, this reveals Tribuo’s XGBoost classifier mannequin was the perfect.

Determine 2. A stacked column chart combining every mannequin’s F1 scores

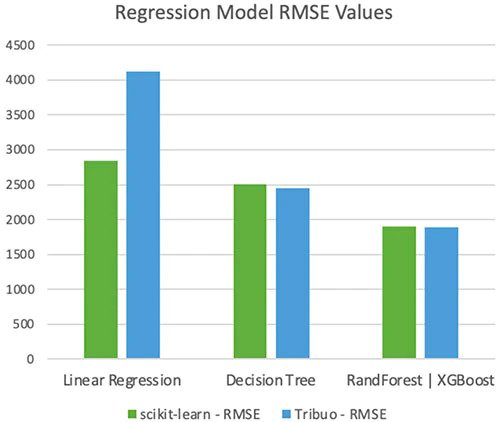

Regression. Remember that a decrease RMSE worth is a greater rating than a better RMSE worth, and the R2 rating closest to 1 is finest.

Desk 3 reveals the outcomes from the regression algorithms widespread to the scikit-learn and Tribuo libraries. Each libraries’ implementations of stochastic gradient descent scored very poorly, so these large values aren’t included right here. Of the remaining algorithms widespread to each libraries, scikit-learn’s linear regression mannequin scored higher than Tribuo’s linear regression mannequin, and Tribuo’s determination tree mannequin beat out scikit-learn’s mannequin.

Desk 3. Regressor outcomes utilizing the identical algorithm

Desk 4 reveals the outcomes for the mannequin from every library which produced the bottom RMSE worth and highest R2 rating. Right here, the Tribuo XGBoost regressor mannequin achieved the perfect scores, which have been simply barely higher than the scikit-learn random forest regressor.

Desk 4. Regressor outcomes for the perfect algorithm

Visualizations of those tables, which summarize the scores from the regression experiments, reinforce the outcomes. Determine 3 reveals a clustered column chart of the RMSE values, whereas Determine 4 reveals a clustered column chart of the R2 scores. The poor scoring stochastic gradient descent fashions aren’t included. Recall that the 2 columns on the appropriate are evaluating every library’s finest scoring mannequin, which is why the scikit-learn random forest mannequin is aspect by aspect with the Tribuo XGBoost mannequin.

Determine 3. A clustered column chart evaluating the RMSE values

Determine 4. A clustered column chart evaluating the R2 scores

Clustering. For a clustering mannequin, an adjusted mutual info worth of 1 signifies excellent correlation between clusters.

Desk 5 reveals the outcomes of the 2 libraries’ Ok-Means and Ok-Means++ algorithms. It’s not stunning that many of the fashions get a 1 for his or her adjusted mutual info worth. It is a results of how the factors on this dataset have been generated. Solely the Tribuo Ok-Means implementation didn’t obtain an ideal adjusted mutual info worth. It’s value mentioning once more that though there are a number of different clustering algorithms accessible in scikit-learn, none of them may end coaching utilizing this huge dataset.

Desk 5. Clustering outcomes

Extra findings

Evaluating library documentation. To organize the ML fashions for comparability, the scikit-learn documentation was closely consulted. The scikit-learn documentation is excellent. The API docs are full and verbose, they usually present easy, related examples. There’s additionally a consumer information which gives further info past what’s contained within the API docs. It’s straightforward to seek out the suitable info when fashions are being constructed and examined.

Good documentation is without doubt one of the predominant objectives of the scikit-learn venture. On the time of writing, Tribuo doesn’t have an equal set of printed documentation. The Tribuo API docs are full and there are useful tutorials which describe the way to carry out the usual ML duties. To carry out duties past this requires extra effort, however some hints will be discovered by reviewing the suitable unit assessments within the supply code.

Reproducibility. There are particular conditions when ML fashions are used the place reproducibility is necessary. Reproducibility means having the ability to prepare or use a mannequin repeatedly and observe the identical consequence with a hard and fast dataset. This may be tough to attain, for instance, when a mannequin is determined by a random quantity generator and the mannequin has been skilled a number of instances inflicting a number of invocations of the mannequin’s random quantity generator.

Different issues. The comparisons described on this work have been performed utilizing Jupyter notebooks. It’s well-known that Jupyter features a Python kernel by default. Nonetheless, Jupyter doesn’t natively assist Java. Fortuitously, a Java kernel will be added to Jupyter utilizing a venture known as IJava. The performance offered by this kernel enabled the comparisons made on this research. Clearly, these kernels aren’t instantly associated to the libraries underneath research however are famous since they offered the surroundings wherein these libraries have been exercised.

The standard remark that Python is extra concise than Java wasn’t actually relevant in these experiments. The var key phrase, which was launched in Java 10, gives native variable sort inference and reduces some boilerplate code typically related to Java. Creating capabilities within the notebooks nonetheless requires defining the varieties of the parameters since Java is statically typed. In some circumstances, getting the generics proper requires referencing the Tribuo API docs.

The ML duties of classification, regression, and clustering have been the main target of the comparisons made on this work. It needs to be famous once more that scikit-learn gives many extra algorithm implementations than Tribuo for every of those duties. Moreover, scikit-learn presents a broader vary of options, similar to an API for visualizations and dimensionality discount methods.

Supply: oracle.com