I used to be following this documentation and had some experiences I wished to doc. I wished to perform this.

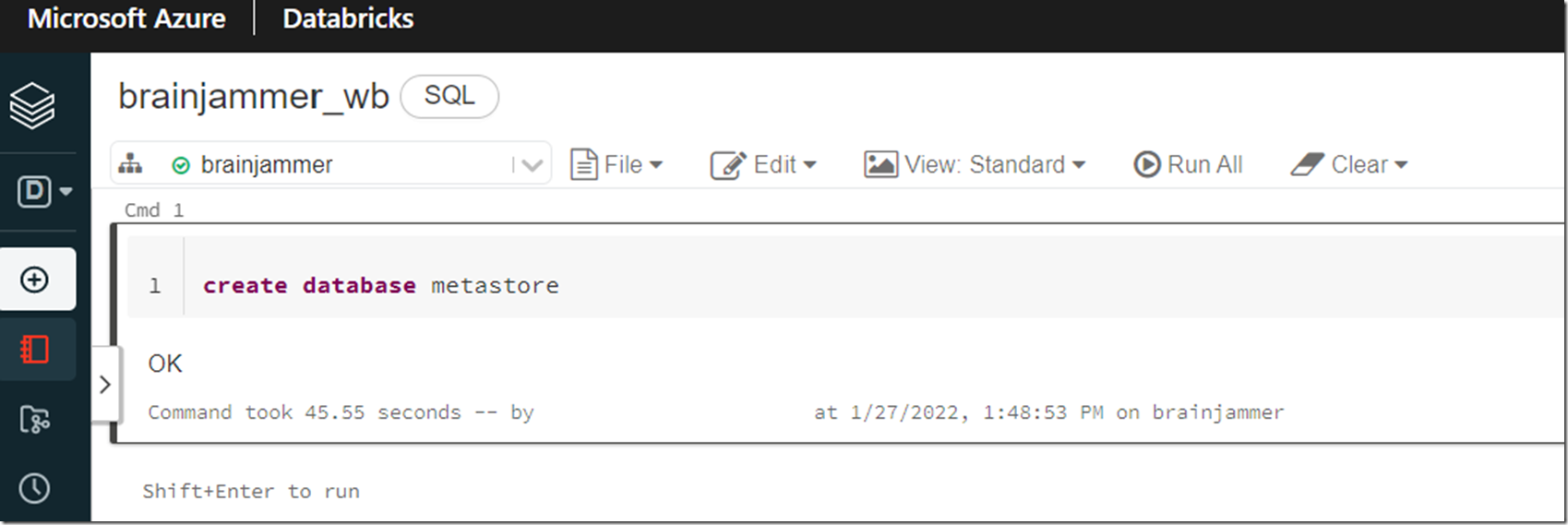

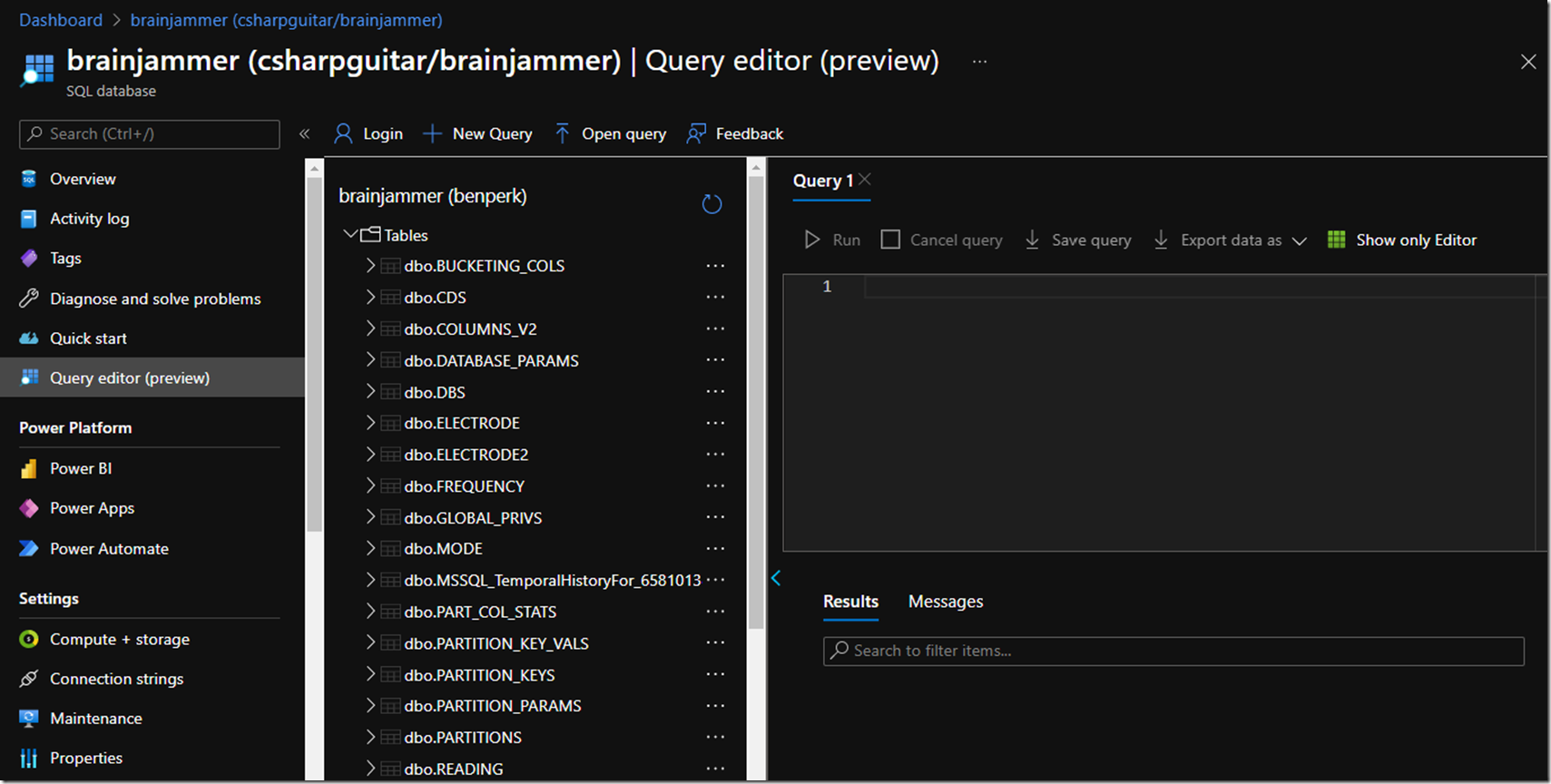

Determine 1, Exterior Apache Hive metastore utilizing Azure Databricks and Azure SQL

Determine 2, Exterior Apache Hive metastore utilizing Azure Databricks and Azure SQL

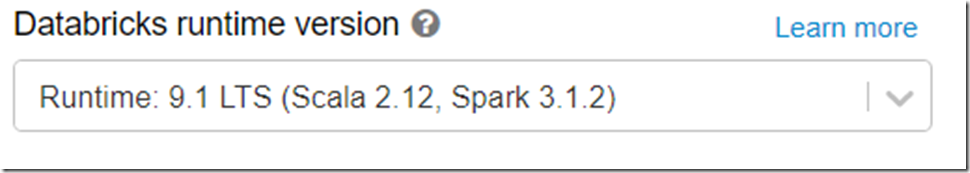

The cluster model I used was essentially the most present one.

Determine 3, Azure Databricks Runtime: 9.1 LTS (Scala 2.12, Spark 3.1.2) cluster

Right here is how my closing Spark config ended up wanting like.

datanucleus.schema.autoCreateTables true

spark.hadoop.javax.jdo.choice.ConnectionUserName benperk@csharpguitar

datanucleus.fixedDatastore false

spark.hadoop.javax.jdo.choice.ConnectionURL jdbc:sqlserver://csharpguitar.database.home windows.internet:1433;database=brainjammer

spark.hadoop.javax.jdo.choice.ConnectionPassword *************

spark.hadoop.javax.jdo.choice.ConnectionDriverName com.microsoft.sqlserver.jdbc.SQLServerDriver

Principally, the datanucleus.autoCreateSchema configuration title is not right. So I modified datanucleus.autoCreateSchema true to datanucleus.schema.autoCreateTables true

- Attributable to: MetaException(message:Model info not present in metastore.)

- Attributable to: javax.jdo.JDODataStoreException: Required desk lacking : “VERSION” in Catalog “” Schema “”. DataNucleus requires this desk to carry out its persistence operations. Both your MetaData is inaccurate, or it’s worthwhile to allow “datanucleus.schema.autoCreateTables“

All of those messages occurred as a result of I included these two entries in my Spark config

- spark.sql.hive.metastore.model 3.1, additionally tried spark.sql.hive.metastore.model 2.3.7

- spark.sql.hive.metastore.jars builtin

- AnalysisException: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient;

- Error in SQL assertion: IllegalArgumentException: Builtin jars can solely be used when hive execution model == hive metastore model. Execution: 2.3.7 != Metastore: 3.1. Specify a legitimate path to the proper hive jars utilizing spark.sql.hive.metastore.jars or change spark.sql.hive.metastore.model to 2.3.7.

- Builtin jars can solely be used when hive execution model == hive metastore model. Execution: 2.3.7 != Metastore: 0.13.0. Specify a legitimate path to the proper hive jars utilizing spark.sql.hive.metastore.jars or change spark.sql.hive.metastore.model to 2.3.7.

- Builtin jars can solely be used when hive execution model == hive metastore model. Execution: 2.3.7 != Metastore: 0.13.0. Specify a legitimate path to the proper hive jars utilizing spark.sql.hive.metastore.jars or change spark.sql.hive.metastore.model to 2.3.7.

I did discover some info on StackOverflow about including these two strains to the Spark config, which supplied some good info, seems, apparently the title has modified

- datanucleus.autoCreateSchema true

- datanucleus.fixedDatastore false