What’s Edge AI?

Knowledge science, machine studying, and AI have change into integral to scientific and engineering analysis. To gasoline innovation, firms have plowed appreciable sources into knowledge assortment, aggregation, transformation, and engineering. With knowledge volumes hitting vital mass at many organizations, expectations are growing to reveal the information’s worth. AI has clearly demonstrated its worth, particularly when working AI on the cloud or a knowledge middle. An important subsequent part for AI is mannequin deployment and operation to the “Edge”.

What distinguishes the Edge from different forms of deployment is the execution location. The core idea of Edge AI is to maneuver the workload (that’s, inference utilizing an AI mannequin) to the place the information is collected or the place the mannequin output is consumed. In Edge AI, the servers working the workload shall be positioned proper on the manufacturing unit manufacturing line, the division retailer flooring, or the wind farm. Many such use instances require solutions in milliseconds, not seconds. Moreover, Web connectivity could also be restricted, knowledge volumes massive, and the information confidential. An amazing benefit of Edge AI is that mannequin inference occurs at extraordinarily shut bodily proximity to the information assortment web site. This reduces processing latency as knowledge doesn’t must journey throughout the general public Web. Moreover, knowledge privateness and safety enhance, and knowledge switch, compute, and storage prices lower. Higher but, most of the obstacles stopping the Edge imaginative and prescient can lastly be overcome.

Why is Edge AI Essential?

Let’s assume we run a really massive electrical era supplier with hundreds of belongings. AI know-how can help in lots of facets, from predictive upkeep to automation and optimization. These belongings span hydro-electric, nuclear, wind, and photo voltaic services. Inside every asset, there are literally thousands of condition-monitoring sensors. Every location will possible require a number of servers, outfitted with strong {hardware}, equivalent to a powerful CPU and a strong GPU.

Whereas ample compute capability to assist Edge AI is now sensible, a number of new hurdles emerge: How do you successfully administer and oversee a large server fleet with restricted connectivity? How do you deploy AI mannequin updates to the {hardware} with out having to ship a crew to bodily are likely to the {hardware}? How do you guarantee a brand new mannequin can run throughout a large number of {hardware} and software program configurations? How do you guarantee all mannequin dependencies are met? The workflow we are going to present you at GTC will reveal how we’re beginning to deal with these hurdles.What’s NVIDIA Fleet Command?

To deal with the problem of bringing fashions to the sting, NVIDIA launched Fleet Command. NVIDIA Fleet Command is a cloud-based software for managing and deploying AI functions throughout edge gadgets. It eases the rollout of mannequin updates, centralizes machine configuration administration, and screens particular person system well being.

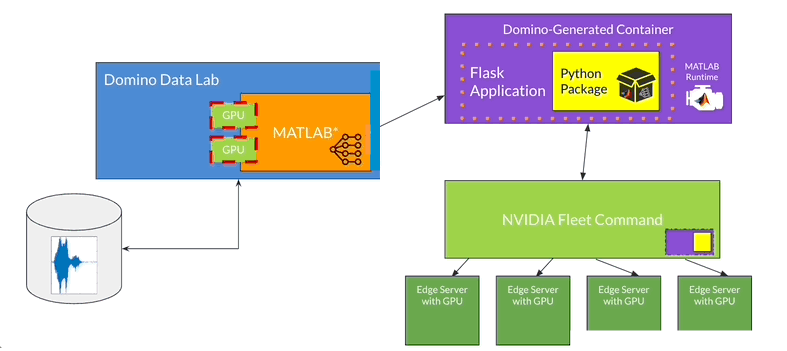

For greater than three years, Domino Knowledge Lab and MathWorks have partnered to serve and scale analysis and improvement for joint clients. MATLAB and Simulink can run on Domino’s MLOps platform, unlocking entry to strong enterprise computing and really huge knowledge troves. Customers can speed up discovery by accessing enterprise-grade NVIDIA GPUs and parallel computing clusters. With Domino, MATLAB customers can amplify its nice instruments for coaching AI fashions, integrating AI into system design, and interoperability with different computing platforms and applied sciences. Engineers can collaborate with friends by sharing knowledge, automated batch job execution, and APIs.Demo: Deploy AI Mannequin to the Edge

At GTC, we are going to reveal an entire workflow from mannequin creation to edge deployment, and in the end utilizing the mannequin for inference on the edge. This workflow is collaborative and cross-functional, bridging the work of information scientists, engineers, and IT professionals.

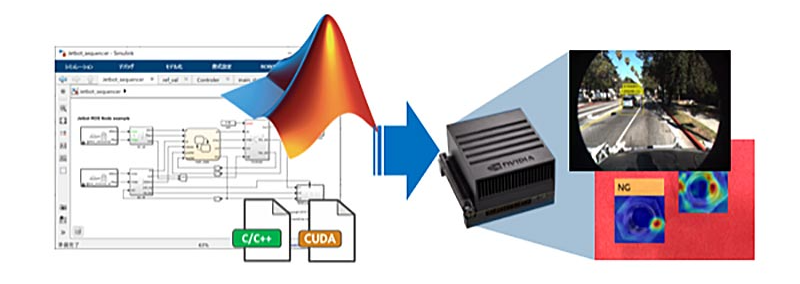

- The engineers carry out switch studying utilizing MATLAB. They carry out mannequin surgical procedure on a pretrained deep studying mannequin and retrain the mannequin on new knowledge, which they’ll entry utilizing Domino’s seamless integration with cloud repositories. Additionally they leverage on enterprise-grade NVIDIA GPUs to speed up the mannequin retraining.

- The engineers bundle the deep studying mannequin utilizing MATLAB Compiler SDK after which, use Domino to publish the mannequin into an NVIDIA Fleet Command-compatible Kubernetes container.

- The IT crew hundreds the container into the corporate’s NVIDIA Fleet Command container registry utilizing a Domino API.

- As soon as configured, Fleet Command deploys the container to x86-based, GPU-powered manufacturing unit flooring edge servers. The mannequin is then accessible the place it’s wanted, with near-instantaneous inference.

Watch the next animation to be taught extra particulars in regards to the workflow. To be taught much more, be part of us at GTC!

Conclusion

This workflow showcases many vital facets for edge success. MathWorks, NVIDIA, and Domino partnered to allow scientists and engineers speed up the tempo of discovery and unleash the facility of information. The demo additionally showcases the facility of collaboration throughout disciplines and platform openness. With Domino and MATLAB, enterprises can present the best specialists with their most well-liked software. Engineers and knowledge scientists can entry and collaborate on any knowledge kind of any scale, wherever it’s saved. The workflow offers an easy, repeatable course of to get a mannequin into manufacturing on the edge, the place it’s wanted.

We stay up for having you be part of us on-line for the session at GTC! Keep in mind, search for session S52424: Deploy a Deep Studying Mannequin from the Cloud to the Edge. Be happy to achieve out to us with questions at yuval.zukerman@dominodatalab.com or domino@mathworks.com.