Talking pictures into existence utilizing DALL-E mini and meeting

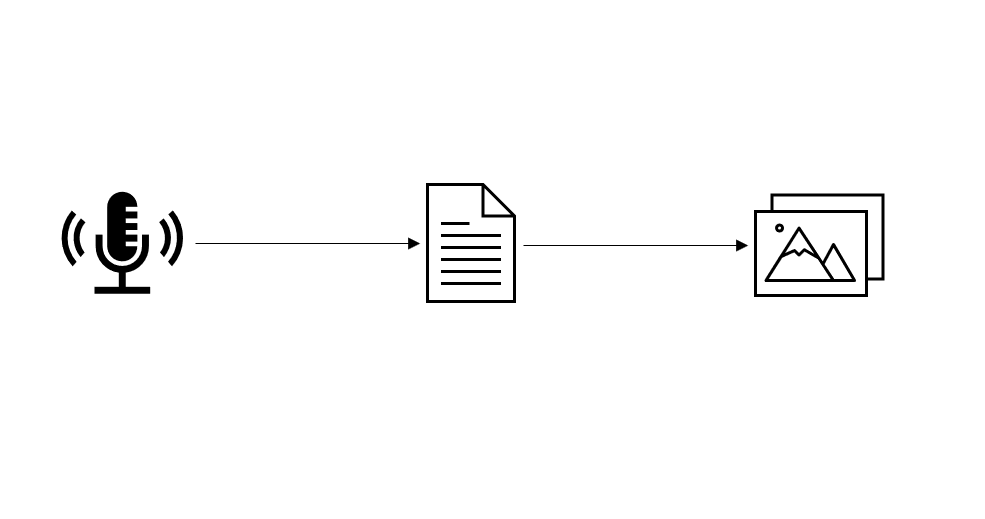

Speech, textual content, and pictures are the 3 ways humanity has transmitted info all through historical past. On this undertaking, we’re going to construct an utility that listens to the speech, turns that speech into textual content, then turns that textual content into pictures. All this may be accomplished within the afternoon. We reside in a exceptional time!

This undertaking was influenced by a YouTube tutorial, so please examine that out, as I discovered it very useful and so they deserve credit score.

Background data wanted:

- DALL-E was created by the group OpenAI. This launched the world to AI-generated pictures and took off in recognition a few 12 months in the past. They’ve a free API that does all types of different enjoyable AI-related features additionally.

- DALL-E mini is an open-source various to DALL-E that tinkerers, such as you and I, can mess around with free of charge. That is the engine we’ll be leveraging on this tutorial

- DALL-E Playground is an open supply utility that does two issues: 1. Makes use of Google Colab to create and run a backend DALL-E mini server which supplies the GPU processing wanted to generate pictures. And a pair of. Supplies a front-end internet interface by way of javascript that customers can work together with and consider their pictures on.

- Reengineers DALL-E Playground’s front-end interface from JavaScript to streamlit Python (as a result of 1. The UI seems higher 2. It features extra seamlessly with the speech-to-text API and three. Python is cooler).

- Leverages AssemblyAI’s transcription fashions to transcribe speech into the textual content enter DALL-E mini engine can work with

- Listens to speech and shows artistic and fascinating pictures

This undertaking is damaged up into two major information: principal.py and dalle.py.

If the summaries of the information under sound like gibberish to you, cling in there! As a result of throughout the code ,itself, there are various feedback which break down these ideas extra totally!

The principal script is used for each the streamlit internet utility and the voice-to-text API connection. It entails configuring the streamlit session-state, creating visible options equivalent to buttons and sliders on the net app interface, organising a WebSockets server, filling in all of the parameters required for pyaudio, creating asynchronous features for sending and receiving the speech information concurrently between our utility and the AssemblyAi’s server.

The dalle.py file is used to attach the streamlit internet utility to the Google Colab server working the DALL-E mini engine. This file has just a few features which serve the next functions:

- Establishes a connection to backend server and verifies it’s legitimate

- Initiates name to the server by sending textual content enter for processing

- Retrieves picture JSON information, and decodes information utilizing base64.b64decode()

Please reference my GitHub right here to see the total utility. I attempted to incorporate feedback and a breakdown of what every chunk of code is doing as I went alongside, so hopefully, it’s pretty intuitive. And please reference the unique undertaking’s repository right here for extra context.

principal file:

dalle file:

This undertaking is a proof of idea for one thing I’d wish to have in my home at some point. I’d wish to have a display on my wall in the course of an ornamental body. Let’s name it a wise image body. This display may have a built-in microphone that listens to all conversations spoken in proximity. Utilizing speech-to-text transcription and pure language processing, the body will filter and select essentially the most fascinating assortment of phrases spoken each 30 seconds or so. From there, the textual content will probably be frequently visualized to dynamically add extra depth to the environment.

Think about visible representations and themes of dialog being displayed on the wall throughout hangouts and household gatherings in actual time. What number of artistic concepts can emerge from one thing much like this? How can the temper of the home change and morph relying on the temper of the contributors? The home will really feel much less like an inorganic construction and extra like a participant, itself. Very fascinating to consider.

Alas, this undertaking was a enjoyable technique to get our palms soiled and mess around with these ideas. It’s type of disappointing that the DALL-E mini doesn’t have the identical type of extraordinarily high-quality pictures that engines just like the OpenAI DALL-E2 have. Nonetheless, I nonetheless loved studying the method and ideas behind the expertise on this undertaking. Probably in just a few years, APIs for these high-resolution image-generating companies will probably be simpler to entry and mess around with anyway. Due to anybody who made it all over. And good luck in your journey in direction of studying daily.