Written by Harry Roberts on CSS Wizardry.

This text began life as a Twitter

thread, however I felt it wanted a extra everlasting spot. It’s best to comply with me on Twitter in case you aren’t

already.

I’ve been requested a number of occasions—principally in workshops—why HTTP/2 (H/2)

waterfalls typically nonetheless seem like HTTP/1.x (H/1). Why are hings are performed in

sequence slightly than in parallel?

Let’s unpack it!

Honest warning, I’m going to oversimplify some phrases and ideas. My aim

is for instance a degree slightly than clarify the protocol intimately.

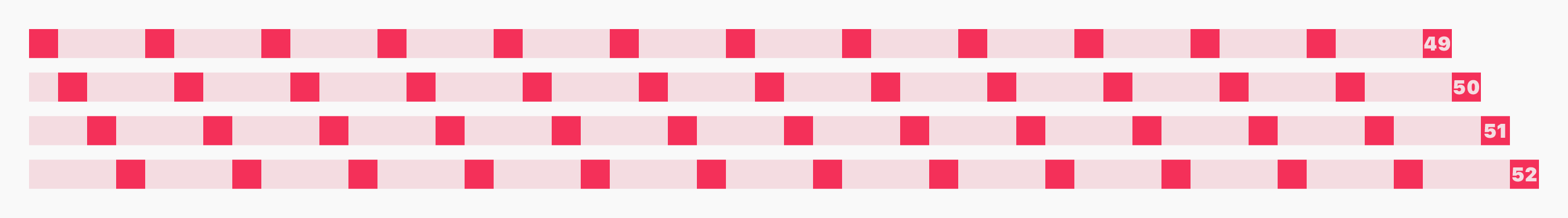

One of many guarantees of H/2 was infinite parallel requests (up from the

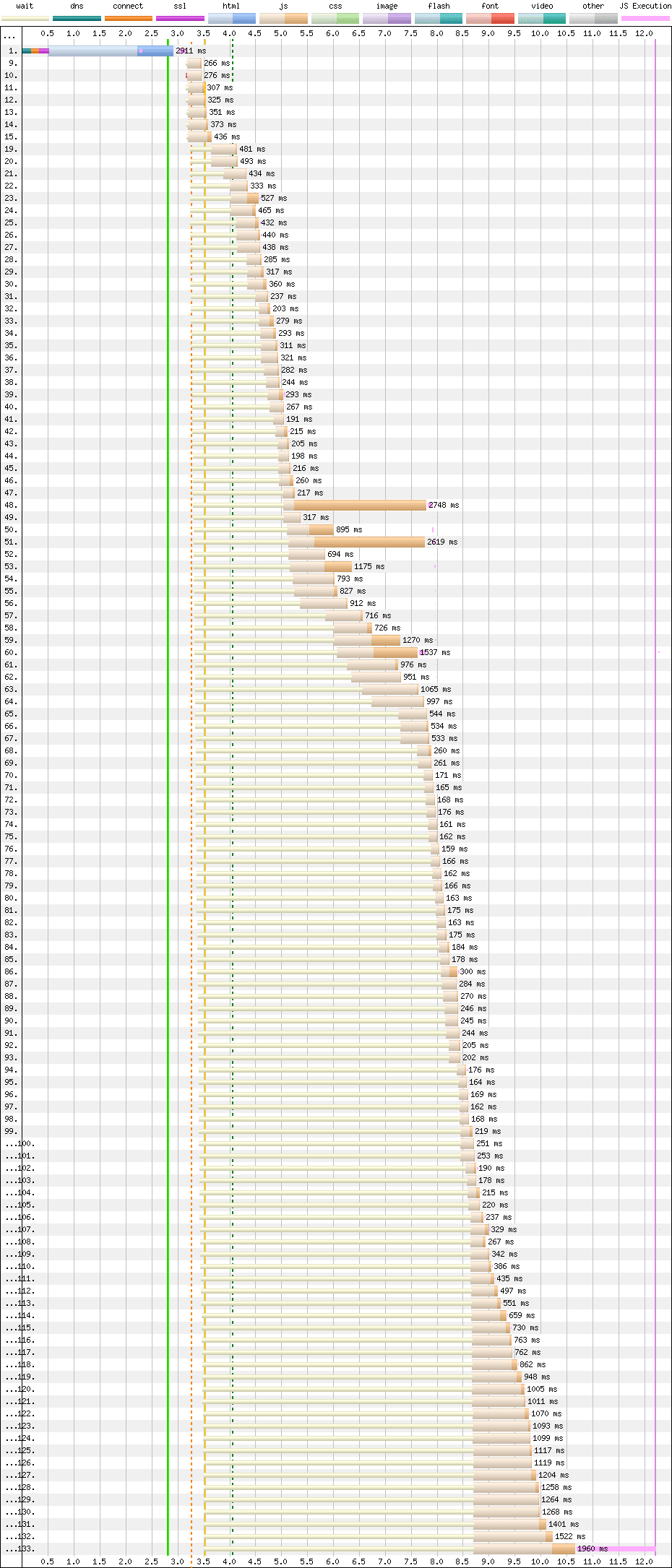

historic six concurrent connections in H/1). So why does this H/2-enabled web site

have such a staggered waterfall? This doesn’t seem like H/2 in any respect!

Issues get somewhat clearer if we add Chrome’s queueing time to the graph. All

of those information have been found on the identical time, however their requests have been

dispatched in sequence.

information have been found round 3.25s, however have been all requested someday after that.

As a efficiency engineer, one of many first shifts in thought is that we don’t

care solely about when sources have been found or requests have been dispatched (the

leftmost a part of every entry). We additionally care about when responses are completed

(the rightmost a part of every entry).

Once we cease and give it some thought, ‘when was a file helpful?’ is far more

necessary than ‘when was a file found?’. After all, a late-discovered file

may also be late-useful, however actually the one factor that issues is

usefulness.

With H/2, sure, we will make way more requests at a time, however making extra requests

doesn’t magically make all the things quicker. We’re nonetheless restricted by gadget and

community constraints. We nonetheless have finite bandwidth, solely now it wants sharing

amongst extra information—it simply will get diluted.

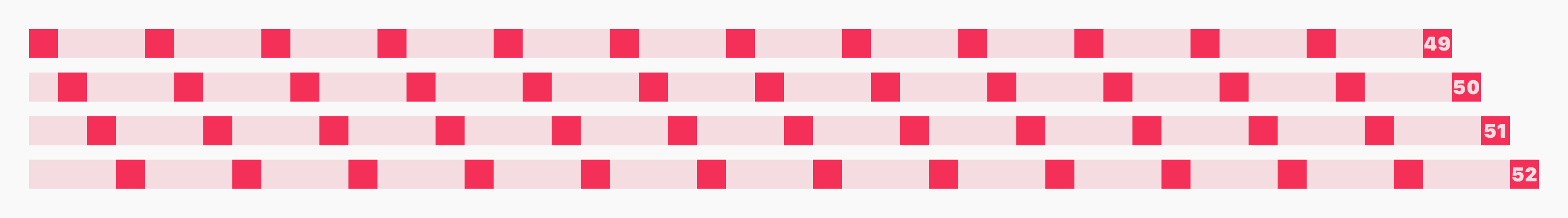

Let’s depart the net and HTTP for a second. Let’s play playing cards! Taylor, Charlie,

Sam, and Alex wish to play playing cards. I’m going to deal the playing cards to the 4 of

them.

These 4 individuals and their playing cards symbolize downloading 4 information. As an alternative of

bandwidth, the fixed right here is that it takes me ONE SECOND to deal one card. No

matter how I do it, it is going to take me 52 seconds to complete the job.

The normal round-robin method to dealing playing cards can be one to Taylor,

one to Charlie, one to Sam, one to Alex, and time and again till they’re all

dealt. Fifty-two seconds.

That is what that appears like. It took 49 seconds earlier than the primary individual had all

of their playing cards.

Are you able to see the place that is going?

What if I dealt every individual all of their playing cards directly as a substitute? Even with the

identical total 52-second timings, people have a full hand of playing cards a lot sooner.

full responses over the wire as quickly as potential.

Fortunately, the (s)lowest widespread denominator works simply superb for a sport of

playing cards. You’ll be able to’t begin taking part in earlier than everybody has all of their playing cards anyway, so

there’s no have to ‘be helpful’ a lot sooner than your folks.

On the net, nevertheless, issues are totally different. We don’t need information ready on the

(s)lowest widespread denominator! We wish information to reach and be helpful as quickly as

potential. We don’t desire a file at 49, 50, 51, 52s after we might have 13, 26, 39,

52!

On the net, it seems that some barely H/1-like behaviour continues to be a superb

thought.

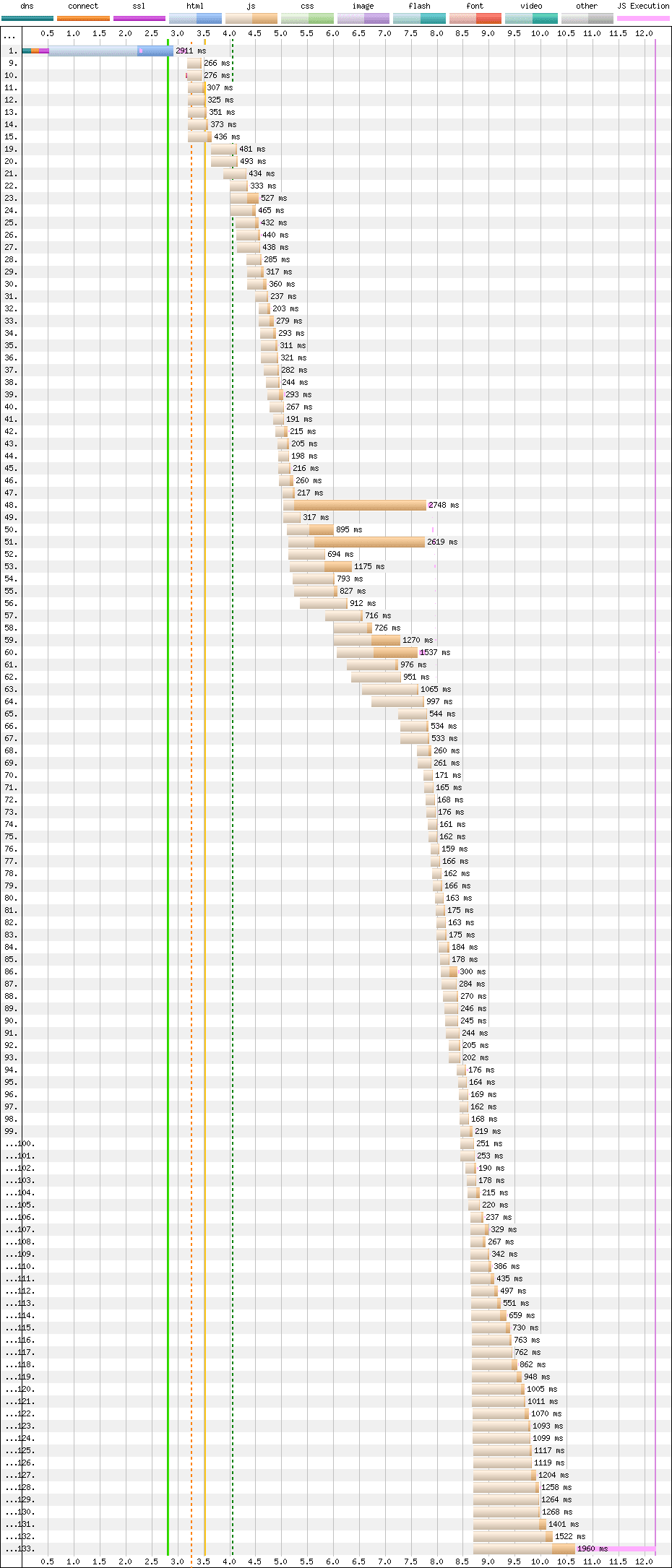

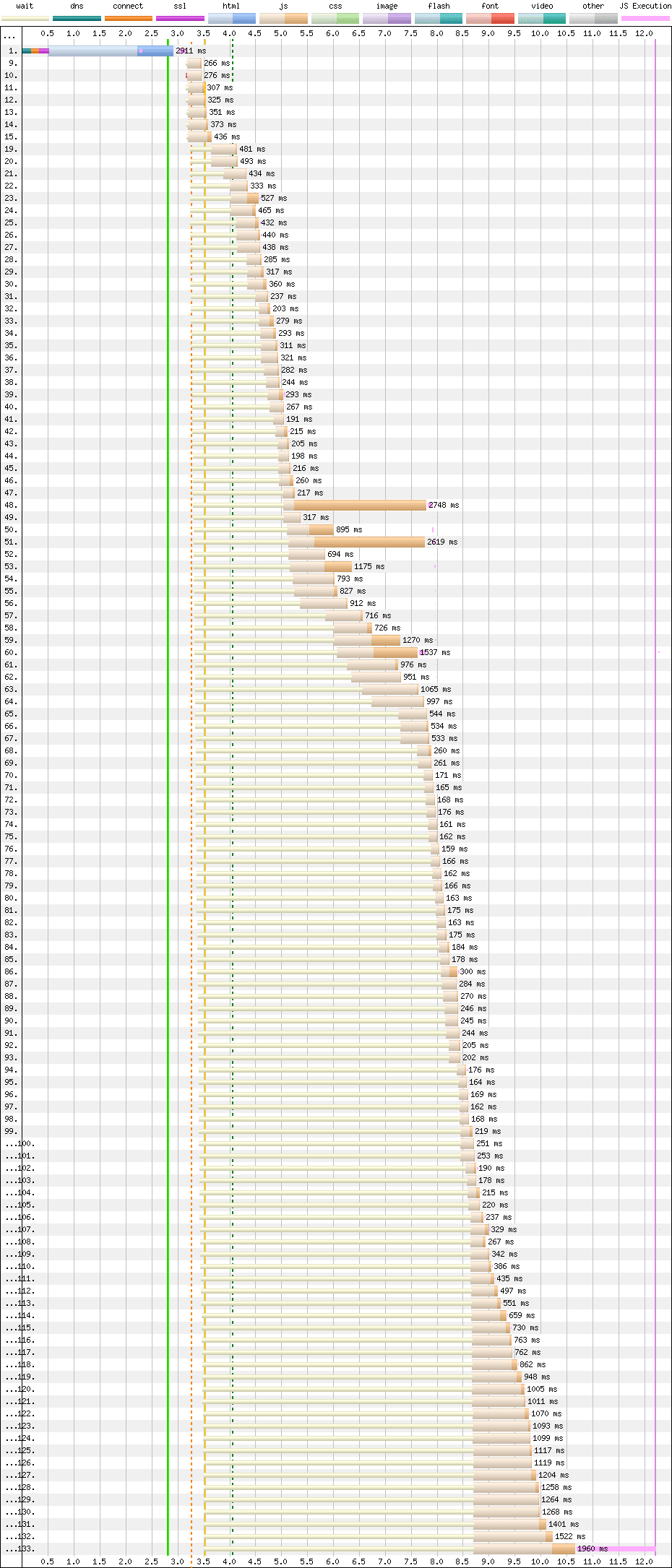

Again to our chart. Every of these information is a deferpink JS

bundle, that means they should run in

sequence. Due to how all the things is scheduled, requested, and prioritised, we

have a chic sample whereby information are queued, fetched, and executed in

a near-perfect order!

Queue, fetch, execute, queue, fetch, execute, queue, fetch, execute, queue,

fetch, execute, queue, fetch, execute with nearly zero lifeless time. That is the

peak of magnificence, and I like it.

I fondly consult with this complete course of as ‘orchestration’ as a result of, actually, that is

clever to me. And that’s why your waterfalls seem like that.