I constructed an iOS object detection app with YOLOv5 and Core ML. Right here’s how one can construct one too!

This tutorial will educate you the best way to construct a stay video object detection iOS app utilizing the YOLOv5 mannequin!

The tutorial relies on this object detection instance supplied by Apple, however the tutorial will significantly present the best way to use customized fashions on iOS.

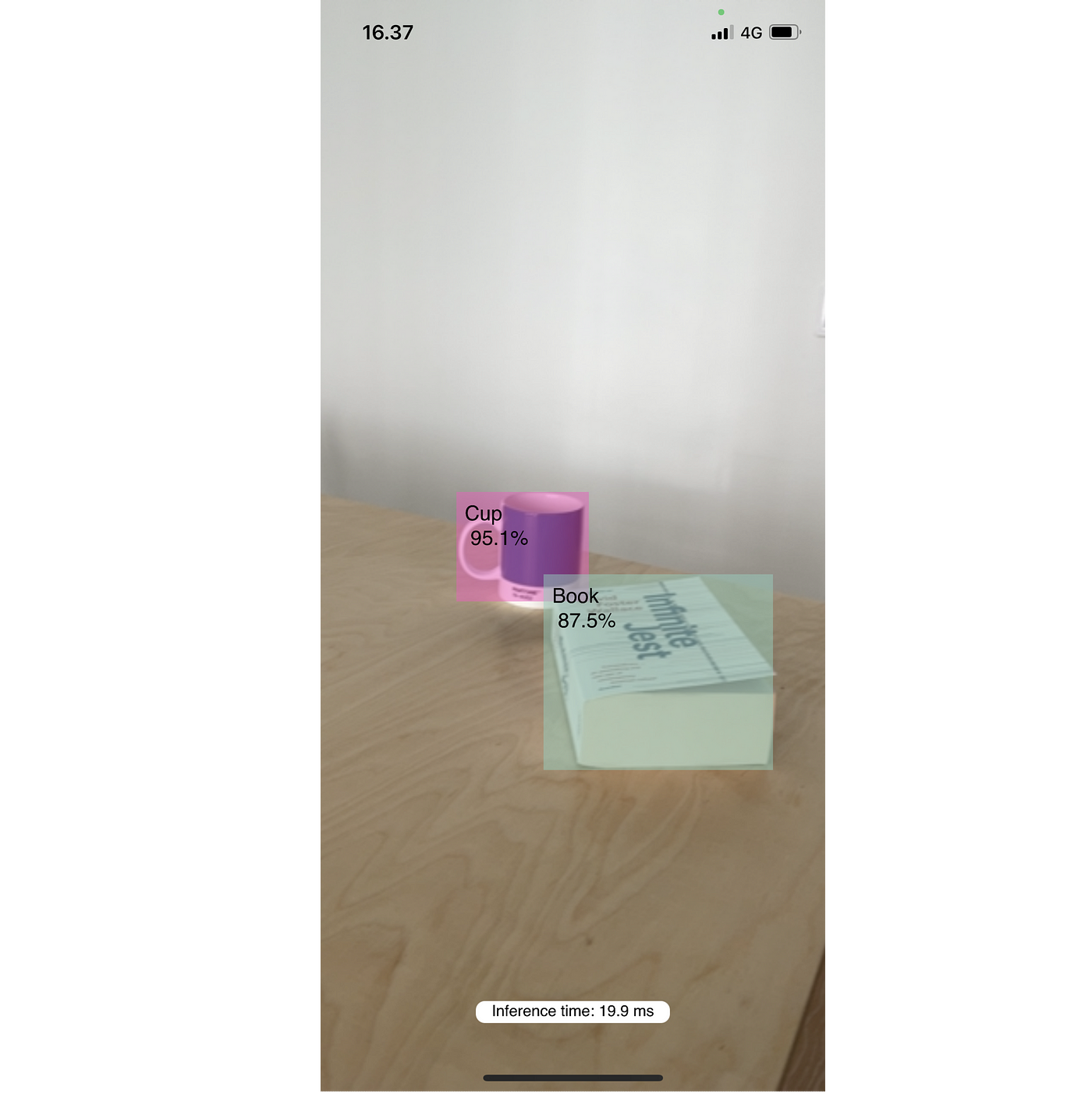

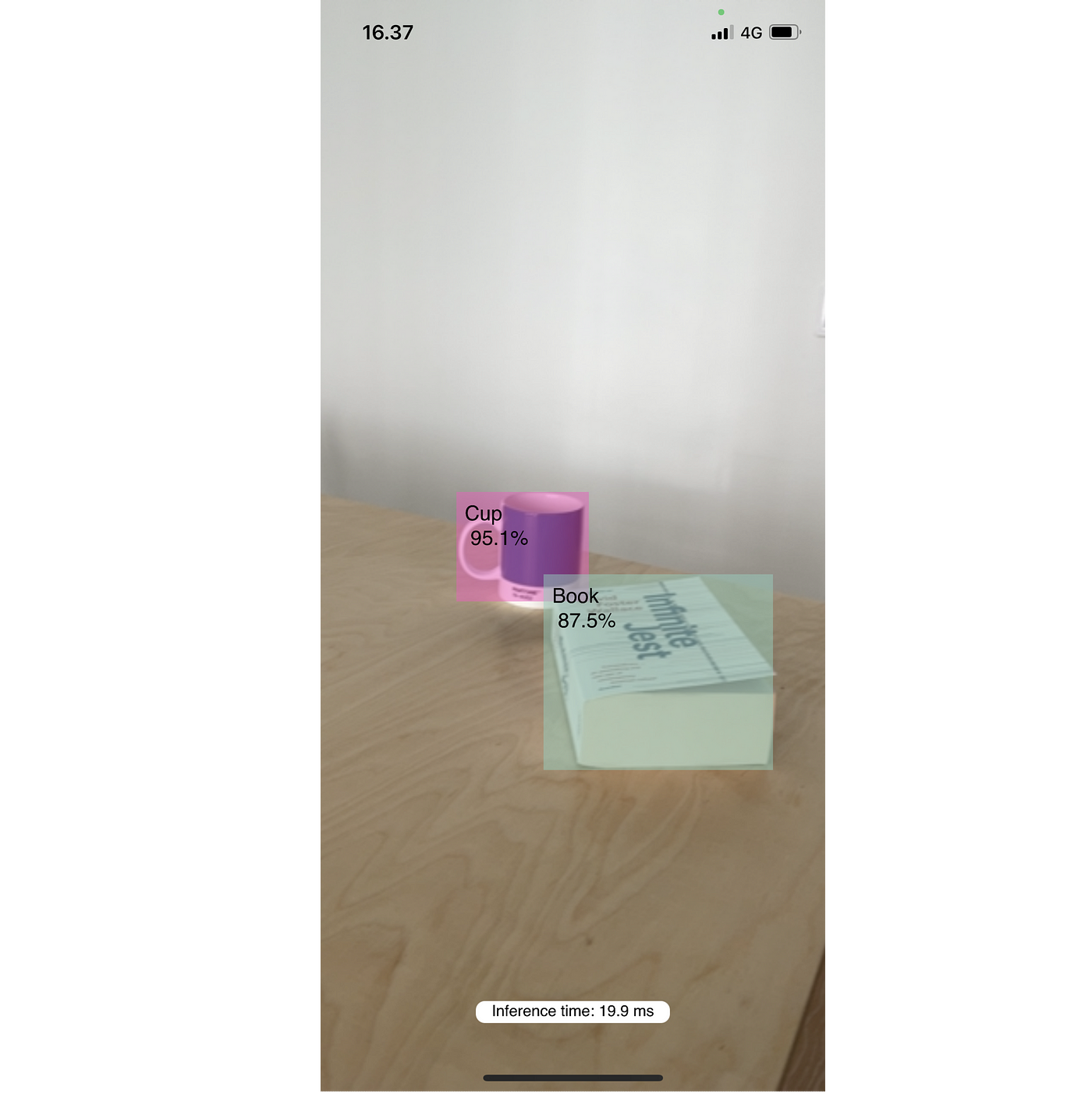

To construct up the thrill, right here’s what the ultimate model will appear to be:

Under you could find a recap of the important thing constructing blocks we are going to want for constructing the instance app.

YOLOv5

YOLOv5 is a household of object detection fashions constructed utilizing PyTorch. The fashions allow detecting objects from single photos, the place the mannequin output contains predictions of bounding packing containers, the bounding field classification, and the arrogance of the predictions.

The pretrained YOLOv5 fashions have been educated utilizing the COCO dataset which incorporates 80 totally different on a regular basis object classes, however coaching fashions with customized object classes could be very simple. One can discuss with the official information for directions.

The YOLOv5 GitHub repository gives numerous utility instruments, comparable to exporting the pretrained PyTorch fashions into numerous different codecs. Such codecs embody ONNX, TensorFlow Lite, and Core ML. Utilizing Core ML is the main focus of this tutorial.

Core ML

Apple’s Core ML allows utilizing Machine Studying (ML) fashions on iOS units. Core ML supplies numerous APIs and fashions out-of-the-box, but it surely additionally allows constructing utterly customized fashions.

Imaginative and prescient

Apple’s Imaginative and prescient framework is designed to allow numerous customary pc imaginative and prescient duties on iOS. I additionally permits utilizing customized Core ML fashions.

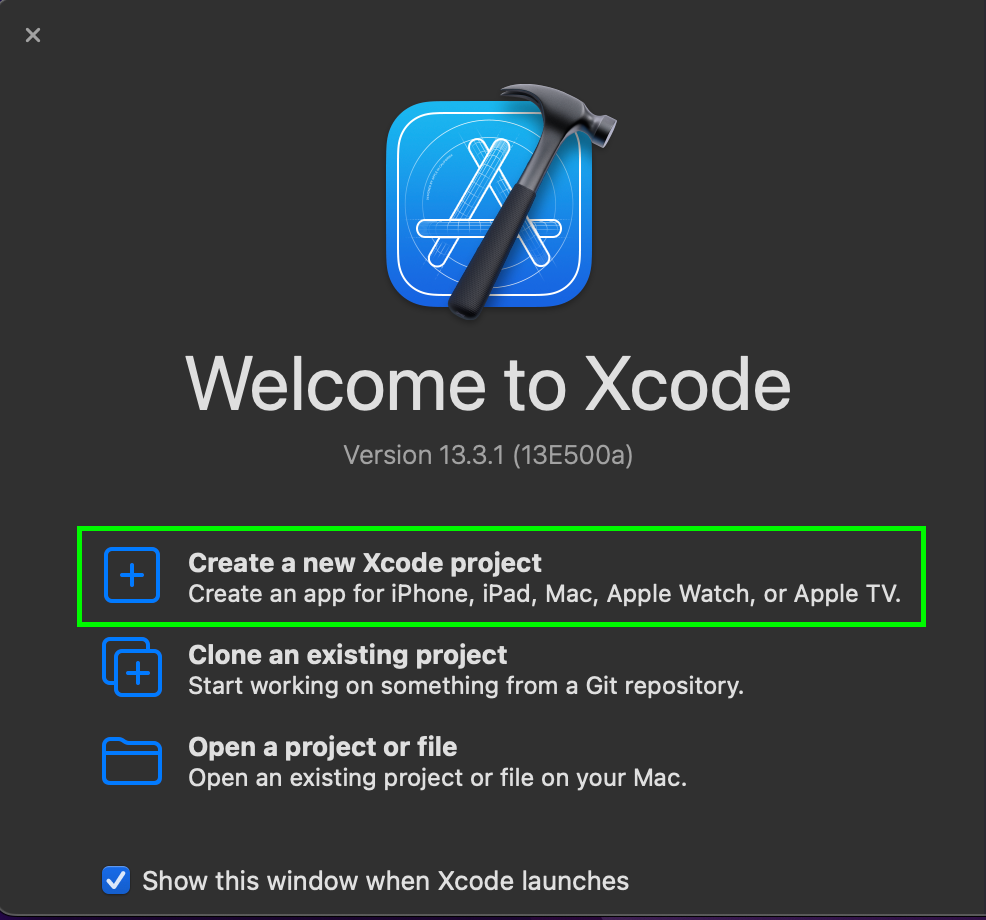

Begin by opening Xcode and deciding on “Create a brand new Xcode undertaking”

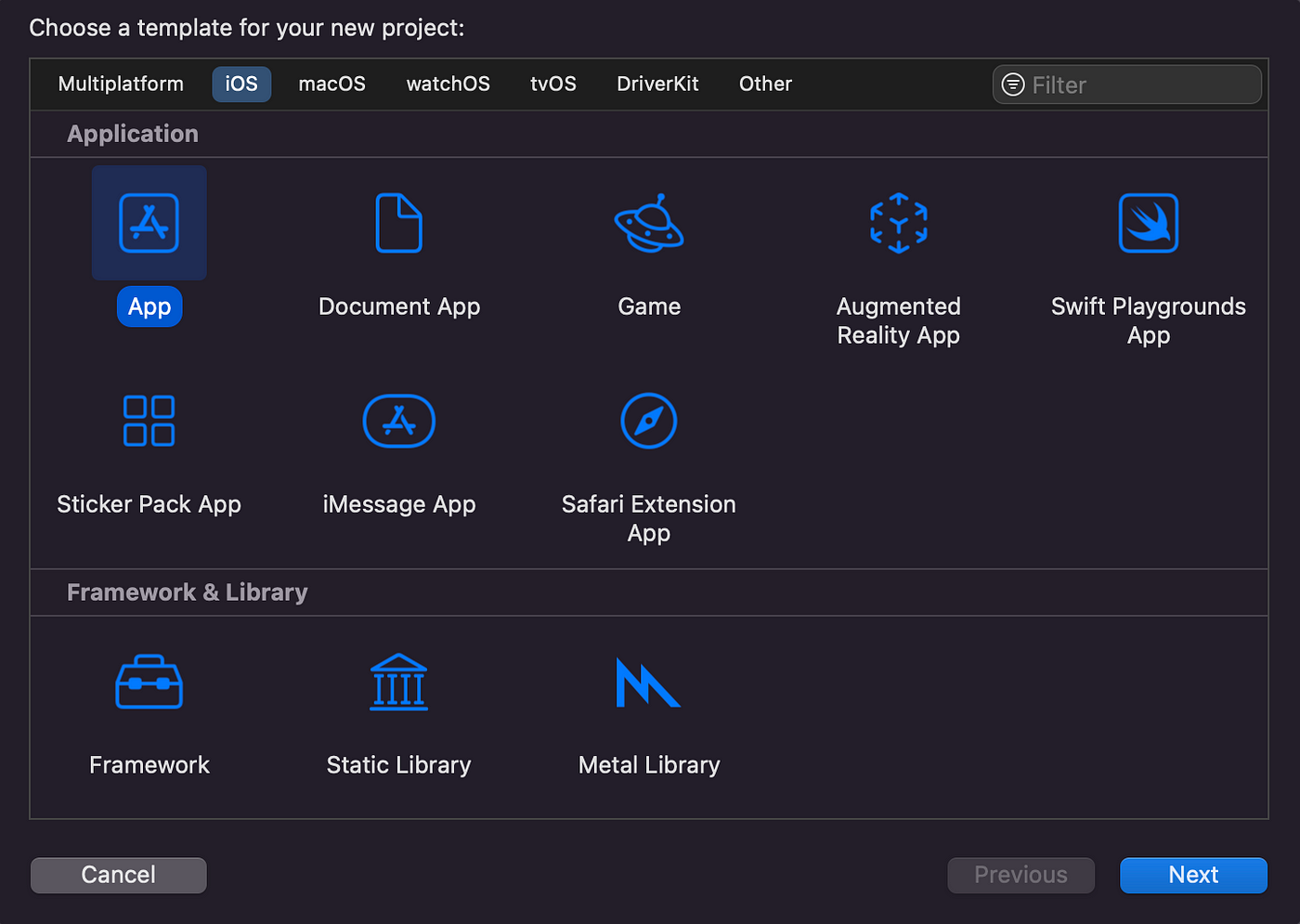

For the template kind, choose “App”

Ensure to edit the Group Identifier to for instance your Github username and choose a reputation for the undertaking (Product Title). For the Interface, choose “Storyboard” and for Language choose “Swift”.

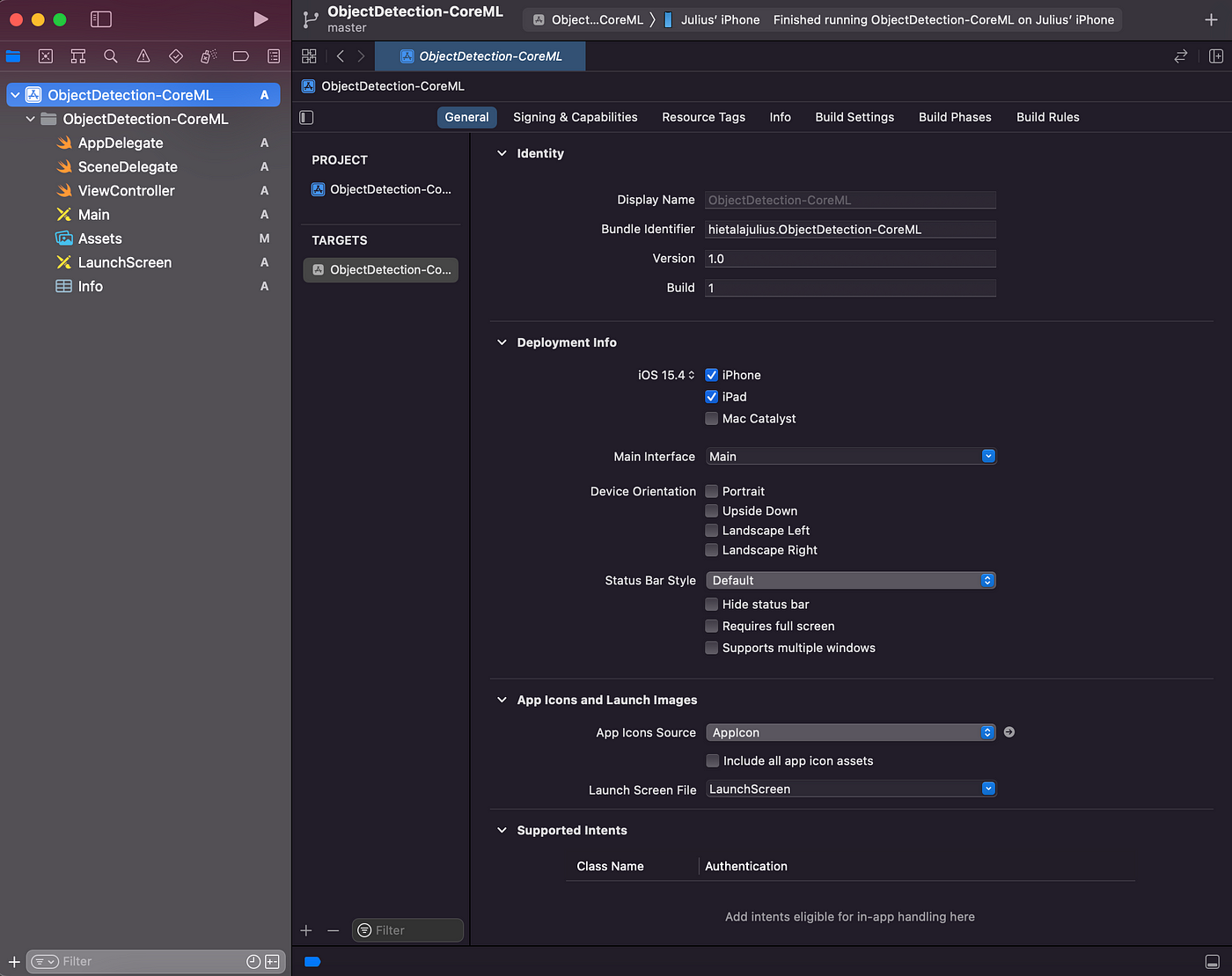

After finishing the above steps are accomplished, the undertaking construction will look as follows:

The complete instance supply code will be discovered right here however we’ll stroll by the essential components within the following sections.

ViewController

The principle logic for the app might be created within the ViewController class. The code under exhibits the members of the category.

The principle gadgets created are the video seize session and visible layers for displaying the output video stream, the detections, and inference time. Additionally, the Imaginative and prescient prediction requests array is initialized right here.

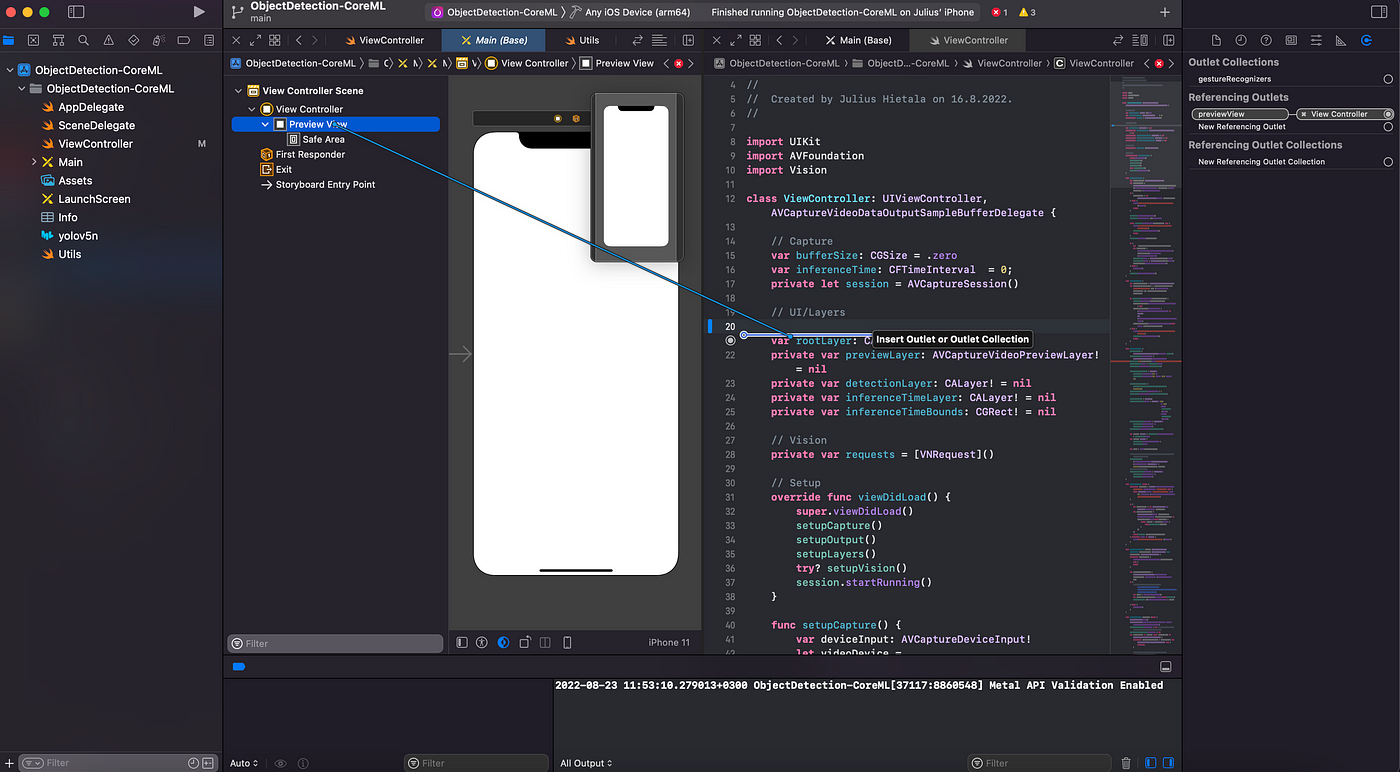

Most significantly, the previewView must be linked to the principle View within the Foremost.storyboard file in order that the totally different visible layers will be displayed on the display screen. This may be accomplished by deciding on the view, urgent management, and dragging it into one of many strains of ViewController:

viewDidLoad

After the category members have been initialized, you need to arrange the viewDidLoad technique which is known as after the view controller has loaded its view hierarchy into reminiscence. The tactic is cut up into initializing

- Video seize

- Video preview output

- Visible layers

- Imaginative and prescient predictions.

Right here’s how it’s specified by the code:

Organising video enter and output

Let’s stroll by the totally different parts beginning with organising video seize and output. Listed below are the implementations:

The gist (no pun meant) of the logic is to discover a gadget (the again digicam) and add it to the AvCaptureSession. The setupOutput technique additionally provides an output to the session. To summarize, the AvCaptureSession is answerable for connecting inputs (digicam) to outputs (display screen).

Organising the UI layers

The video visible display screen layers are arrange as follows:

There are totally different layers for displaying the preview video (previewLayer, which takes the AvCaptureSession as an argument), the detections (detectionLayer), and displaying the present inference time (inferenceTimeLayer).

Organising Imaginative and prescient

Lastly, the Imaginative and prescient setup is achieved with the next:

We’ll revisit together with the precise detection mannequin within the undertaking (strains 2 and seven within the above gist) within the subsequent part, however a very powerful half to notice right here is the duty definition i.e. the request that Imaginative and prescient ought to carry out on line 8 and defining the callback (drawResults) for the outcomes.

To tie every part collectively, we additionally outline a way (captureOutput) that will get known as each a brand new body arrives from the digicam and whose job is to ship a brand new object recognition request to Imaginative and prescient with the brand new picture:

As talked about earlier, we might want to create the YOLOv5 Core ML mannequin earlier than we’re capable of run the app. To take action, you need to clone this repository with:

git clone https://github.com/hietalajulius/yolov5

For creating the exported mannequin, you’ll need python ≥3.7 and set up the dependencies from the basis of the repository with:

pip set up -r necessities.txt -r requirements-export.txt

After putting in the dependencies efficiently, the precise export will be run with:

python export-nms.py --include coreml --weights yolov5n.pt

The script is a modified model of the unique YOLOv5export.py script that features non-maximum supression (NMS) on the finish of the mannequin to assist utilizing Imaginative and prescient. The weights used on this instance are from the nano variant of the YOLOv5 mannequin. The script takes care of downloading the educated mannequin so no want to try this manually.

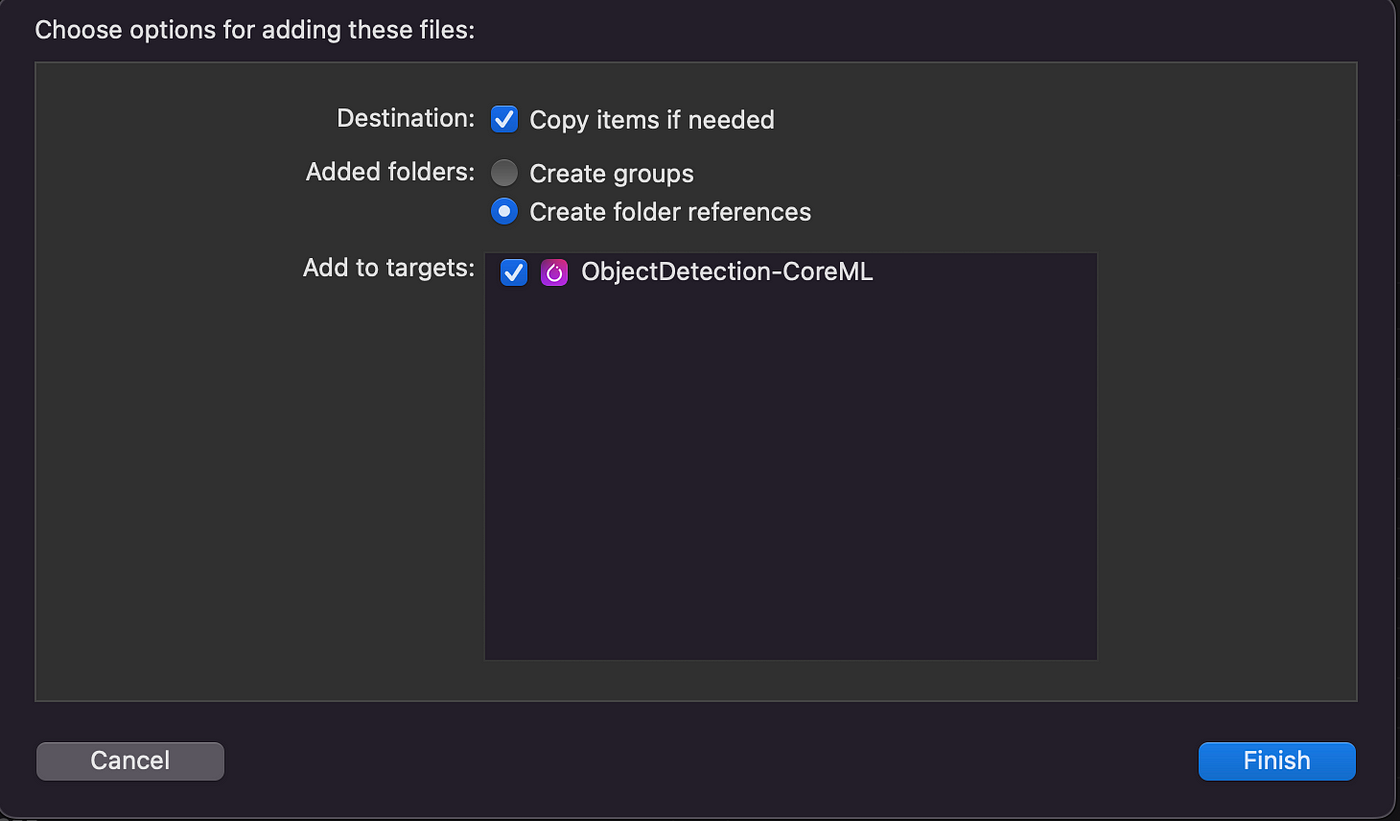

The script outputs a Core ML file known as yolov5n.mlmodel, which must be dragged and dropped into the Xcode undertaking:

You’ll be prompted with the next display screen, the place you will need to bear in mind to incorporate the mannequin file within the constructed goal (“Add to targets”).

To run the completed app, choose an iOS simulator or gadget on Xcode to run the app. The app will begin outputting predictions and the present inference time:

Thanks for studying! Be at liberty to present suggestions and enchancment concepts!

Need to Join?Yow will discover me on LinkedIn, Twitter, and Github. Additionally test my private web site/weblog for matters protecting ML and internet/cellular apps!