Introduction

- Importing a Tabular Dataset

- Preprocessing Knowledge

- Coaching and Evaluating a Machine Studying Mannequin

- Making New Predictions and Exporting Predictions

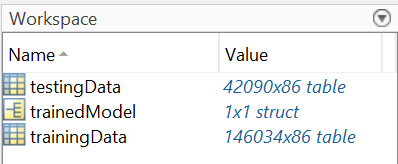

Import Knowledge

- Coaching information (practice.xlsx)

- Testing information (check.xlsx)

Preprocess Knowledge

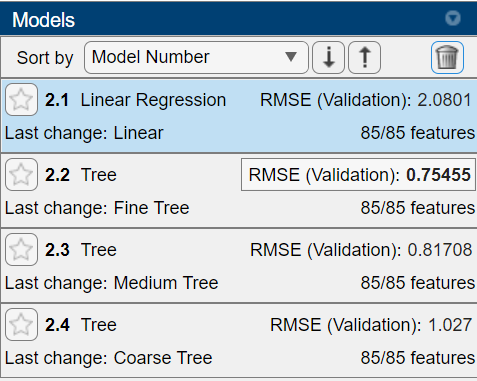

Prepare & Consider a Mannequin

E=1n∑i=1n|Ai-Fi|2

Save and Export Predictions

Experiment!

- Strive different preprocessing methods, equivalent to normalizing the info or creating new variables

- Mess around with the coaching choices obtainable within the app

- Change the variables that you simply use to coach the mannequin

- Strive machine and deep studying workflows

- Change the breakdown of coaching, testing, and validaton information

Accomplished!

Extra Sources

- Overview of Supervised Studying (Video)

- Preprocessing Knowledge Documentation

- Lacking Knowledge in MATLAB

- Supervised Studying Workflow and Algorithms

- Prepare Regression Fashions in Regression Learner App

- Prepare Classification Fashions in Classiication Learner App

- 8 MATLAB Cheat Sheets for Knowledge Science

- MATLAB Onramp

- Machine Studying Onramp

- Deep Studying Onramp

var css=”/* Styling that’s frequent to warnings and errors is in diagnosticOutput.css */.embeddedOutputsErrorElement { min-height: 18px; max-height: 550px;} .embeddedOutputsErrorElement .diagnosticMessage-errorType { overflow: auto;} .embeddedOutputsErrorElement.inlineElement {} .embeddedOutputsErrorElement.rightPaneElement {} /* Styling that’s frequent to warnings and errors is in diagnosticOutput.css */.embeddedOutputsWarningElement { min-height: 18px; max-height: 550px;} .embeddedOutputsWarningElement .diagnosticMessage-warningType { overflow: auto;} .embeddedOutputsWarningElement.inlineElement {} .embeddedOutputsWarningElement.rightPaneElement {} /* Copyright 2015-2019 The MathWorks, Inc. *//* On this file, kinds should not scoped to rtcContainer since they might be within the Dojo Tooltip */.diagnosticMessage-wrapper { font-family: Menlo, Monaco, Consolas, “Courier New”, monospace; font-size: 12px;} .diagnosticMessage-wrapper.diagnosticMessage-warningType { colour: rgb(255,100,0);} .diagnosticMessage-wrapper.diagnosticMessage-warningType a { colour: rgb(255,100,0); text-decoration: underline;} .diagnosticMessage-wrapper.diagnosticMessage-errorType { colour: rgb(230,0,0);} .diagnosticMessage-wrapper.diagnosticMessage-errorType a { colour: rgb(230,0,0); text-decoration: underline;} .diagnosticMessage-wrapper .diagnosticMessage-messagePart,.diagnosticMessage-wrapper .diagnosticMessage-causePart { white-space: pre-wrap;} .diagnosticMessage-wrapper .diagnosticMessage-stackPart { white-space: pre;} .embeddedOutputsTextElement,.embeddedOutputsVariableStringElement { white-space: pre; word-wrap: preliminary; min-height: 18px; max-height: 550px;} .embeddedOutputsTextElement .textElement,.embeddedOutputsVariableStringElement .textElement { overflow: auto;} .textElement,.rtcDataTipElement .textElement { padding-top: 2px;} .embeddedOutputsTextElement.inlineElement,.embeddedOutputsVariableStringElement.inlineElement {} .inlineElement .textElement {} .embeddedOutputsTextElement.rightPaneElement,.embeddedOutputsVariableStringElement.rightPaneElement { min-height: 16px;} .rightPaneElement .textElement { padding-top: 2px; padding-left: 9px;}”; var head = doc.head || doc.getElementsByTagName(‘head’)[0], type = doc.createElement(‘type’); head.appendChild(type); type.sort=”textual content/css”; if (type.styleSheet){ type.styleSheet.cssText = css; } else { type.appendChild(doc.createTextNode(css)); }